|

| template<template< bool, QudaPCType, typename > class P, int nParity, bool dagger, bool xpay> |

| void | instantiate (TuneParam &tp, const qudaStream_t &stream) |

| | This instantiate function is used to instantiate the the KernelType template required for the multi-GPU dslash kernels. More...

|

| |

| template<template< bool, QudaPCType, typename > class P, int nParity, bool xpay> |

| void | instantiate (TuneParam &tp, const qudaStream_t &stream) |

| | This instantiate function is used to instantiate the the dagger template. More...

|

| |

| template<template< bool, QudaPCType, typename > class P, bool xpay> |

| void | instantiate (TuneParam &tp, const qudaStream_t &stream) |

| | This instantiate function is used to instantiate the the nParity template. More...

|

| |

| template<template< bool, QudaPCType, typename > class P> |

| void | instantiate (TuneParam &tp, const qudaStream_t &stream) |

| | This instantiate function is used to instantiate the the xpay template. More...

|

| |

| | Dslash (Arg &arg, const ColorSpinorField &out, const ColorSpinorField &in) |

| |

| void | setShmem (int shmem) |

| |

| void | setPack (bool pack, MemoryLocation location) |

| |

| int | Nface () const |

| |

| int | Dagger () const |

| |

| const char * | getAux (KernelType type) const |

| |

| void | setAux (KernelType type, const char *aux_) |

| |

| void | augmentAux (KernelType type, const char *extra) |

| |

| virtual TuneKey | tuneKey () const |

| |

| virtual void | preTune () |

| | Save the output field since the output field is both read from and written to in the exterior kernels. More...

|

| |

| virtual void | postTune () |

| | Restore the output field if doing exterior kernel. More...

|

| |

| virtual long long | flops () const |

| |

| virtual long long | bytes () const |

| |

| | TunableVectorYZ (unsigned int vector_length_y, unsigned int vector_length_z) |

| |

| bool | advanceBlockDim (TuneParam ¶m) const |

| |

| void | initTuneParam (TuneParam ¶m) const |

| |

| void | defaultTuneParam (TuneParam ¶m) const |

| |

| void | resizeVector (int y, int z) const |

| |

| void | resizeStep (int y, int z) const |

| |

| | TunableVectorY (unsigned int vector_length_y) |

| |

| void | resizeVector (int y) const |

| |

| void | resizeStep (int y) const |

| |

| | Tunable () |

| |

| virtual | ~Tunable () |

| |

| virtual void | apply (const qudaStream_t &stream)=0 |

| |

| virtual std::string | paramString (const TuneParam ¶m) const |

| |

| virtual std::string | perfString (float time) const |

| |

| void | checkLaunchParam (TuneParam ¶m) |

| |

| CUresult | jitifyError () const |

| |

| CUresult & | jitifyError () |

| |

|

| void | fillAuxBase () |

| | Set the base strings used by the different dslash kernel types for autotuning. More...

|

| |

| void | fillAux (KernelType kernel_type, const char *kernel_str) |

| | Specialize the auxiliary strings for each kernel type. More...

|

| |

| virtual bool | tuneGridDim () const |

| |

| virtual unsigned int | minThreads () const |

| |

| virtual unsigned int | minGridSize () const |

| |

| virtual int | gridStep () const |

| | gridStep sets the step size when iterating the grid size in advanceGridDim. More...

|

| |

| void | setParam (TuneParam &tp) |

| |

| virtual int | tuningIter () const |

| |

| virtual int | blockStep () const |

| |

| virtual int | blockMin () const |

| |

| unsigned int | maxSharedBytesPerBlock () const |

| | The maximum shared memory that a CUDA thread block can use in the autotuner. This isn't necessarily the same as maxDynamicSharedMemoryPerBlock since that may need explicit opt in to enable (by calling setMaxDynamicSharedBytes for the kernel in question). If the CUDA kernel in question does this opt in then this function can be overloaded to return maxDynamicSharedBytesPerBlock. More...

|

| |

| virtual bool | advanceAux (TuneParam ¶m) const |

| |

| virtual bool | advanceTuneParam (TuneParam ¶m) const |

| |

| virtual void | initTuneParam (TuneParam ¶m) const |

| |

| virtual void | defaultTuneParam (TuneParam ¶m) const |

| |

| template<template< bool, QudaPCType, typename > class P, int nParity, bool dagger, bool xpay, KernelType kernel_type> |

| void | launch (TuneParam &tp, const qudaStream_t &stream) |

| | This is a helper class that is used to instantiate the correct templated kernel for the dslash. This can be used for all dslash types, though in some cases we specialize to reduce compilation time. More...

|

| |

| virtual unsigned int | sharedBytesPerThread () const |

| |

| virtual unsigned int | sharedBytesPerBlock (const TuneParam ¶m) const |

| |

| virtual bool | tuneAuxDim () const |

| |

| virtual bool | tuneSharedBytes () const |

| |

| virtual bool | advanceGridDim (TuneParam ¶m) const |

| |

| virtual unsigned int | maxBlockSize (const TuneParam ¶m) const |

| |

| virtual unsigned int | maxGridSize () const |

| |

| virtual void | resetBlockDim (TuneParam ¶m) const |

| |

| unsigned int | maxBlocksPerSM () const |

| | Returns the maximum number of simultaneously resident blocks per SM. We can directly query this of CUDA 11, but previously this needed to be hand coded. More...

|

| |

| unsigned int | maxDynamicSharedBytesPerBlock () const |

| | Returns the maximum dynamic shared memory per block. More...

|

| |

| virtual bool | advanceSharedBytes (TuneParam ¶m) const |

| |

| int | writeAuxString (const char *format,...) |

| |

| bool | tuned () |

| | Whether the present instance has already been tuned or not. More...

|

| |

template<template< int, bool, bool, KernelType, typename > class D, typename Arg>

class quda::Dslash< D, Arg >

This is the generic driver for launching Dslash kernels (the base kernel of which is defined in dslash_helper.cuh). This is templated on the a template template parameter which is the underlying operator wrapped in a class,.

- Template Parameters

-

| D | A class that defines the linear operator we wish to apply. This class should define an operator() method that is used to apply the operator by the dslash kernel. See the wilson class in the file kernels/dslash_wilson.cuh as an exmaple. |

| Arg | The argument struct that is used to parameterize the kernel. For the wilson class example above, the WilsonArg class defined in the same file is the corresponding argument class. |

Definition at line 32 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

sets default values for when tuning is disabled

Reimplemented from quda::Tunable.

Definition at line 226 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

| void quda::Dslash< D, Arg >::fillAux |

( |

KernelType |

kernel_type, |

|

|

const char * |

kernel_str |

|

) |

| |

|

inlineprotected |

Specialize the auxiliary strings for each kernel type.

- Parameters

-

| [in] | kernel_type | The kernel_type we are generating the string got |

| [in] | kernel_str | String corresponding to the kernel type |

Definition at line 75 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

Set the base strings used by the different dslash kernel types for autotuning.

Definition at line 55 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

gridStep sets the step size when iterating the grid size in advanceGridDim.

- Returns

- Grid step size

Reimplemented from quda::Tunable.

Definition at line 100 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

template<template< bool, QudaPCType, typename > class P, int nParity, bool dagger, bool xpay>

This instantiate function is used to instantiate the the KernelType template required for the multi-GPU dslash kernels.

- Parameters

-

| [in] | tp | The tuning parameters to use for this kernel |

| [in] | stream | The qudaStream_t where the kernel will run |

Definition at line 291 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

template<template< bool, QudaPCType, typename > class P, int nParity, bool xpay>

This instantiate function is used to instantiate the the dagger template.

- Parameters

-

| [in] | tp | The tuning parameters to use for this kernel |

| [in] | stream | The qudaStream_t where the kernel will run |

Definition at line 326 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

template<template< bool, QudaPCType, typename > class P, bool xpay>

This instantiate function is used to instantiate the the nParity template.

- Parameters

-

| [in] | tp | The tuning parameters to use for this kernel |

| [in] | stream | The qudaStream_t where the kernel will run |

Definition at line 345 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

template<template< bool, QudaPCType, typename > class P>

This instantiate function is used to instantiate the the xpay template.

- Parameters

-

| [in] | tp | The tuning parameters to use for this kernel |

| [in] | stream | The qudaStream_t where the kernel will run |

Definition at line 365 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

template<template< bool, QudaPCType, typename > class P, int nParity, bool dagger, bool xpay, KernelType kernel_type>

This is a helper class that is used to instantiate the correct templated kernel for the dslash. This can be used for all dslash types, though in some cases we specialize to reduce compilation time.

Definition at line 248 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

| unsigned int quda::Dslash< D, Arg >::maxSharedBytesPerBlock |

( |

| ) |

const |

|

inlineprotectedvirtual |

The maximum shared memory that a CUDA thread block can use in the autotuner. This isn't necessarily the same as maxDynamicSharedMemoryPerBlock since that may need explicit opt in to enable (by calling setMaxDynamicSharedBytes for the kernel in question). If the CUDA kernel in question does this opt in then this function can be overloaded to return maxDynamicSharedBytesPerBlock.

- Returns

- The maximum shared bytes limit per block the autotung will utilize.

Reimplemented from quda::Tunable.

Definition at line 164 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

Restore the output field if doing exterior kernel.

Reimplemented from quda::Tunable.

Definition at line 513 of file dslash.h.

template<template< int, bool, bool, KernelType, typename > class D, typename Arg >

Save the output field since the output field is both read from and written to in the exterior kernels.

Reimplemented from quda::Tunable.

Definition at line 504 of file dslash.h.

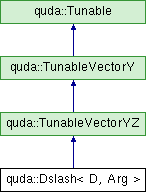

Inheritance diagram for quda::Dslash< D, Arg >:

Inheritance diagram for quda::Dslash< D, Arg >: Public Member Functions inherited from quda::TunableVectorYZ

Public Member Functions inherited from quda::TunableVectorYZ Public Member Functions inherited from quda::TunableVectorY

Public Member Functions inherited from quda::TunableVectorY Public Member Functions inherited from quda::Tunable

Public Member Functions inherited from quda::Tunable Protected Member Functions inherited from quda::TunableVectorY

Protected Member Functions inherited from quda::TunableVectorY Protected Member Functions inherited from quda::Tunable

Protected Member Functions inherited from quda::Tunable Protected Attributes inherited from quda::TunableVectorYZ

Protected Attributes inherited from quda::TunableVectorYZ Protected Attributes inherited from quda::TunableVectorY

Protected Attributes inherited from quda::TunableVectorY Protected Attributes inherited from quda::Tunable

Protected Attributes inherited from quda::Tunable