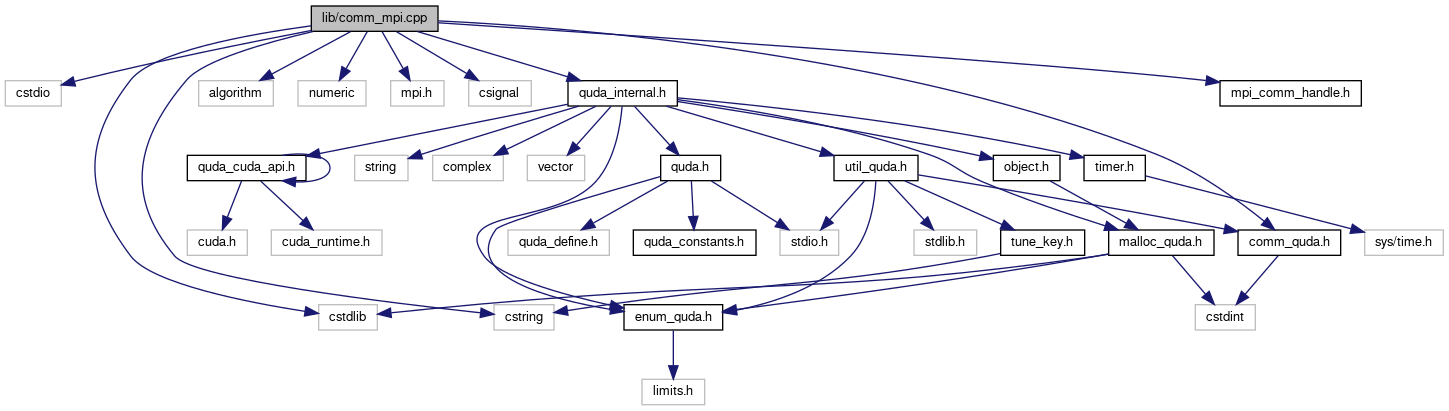

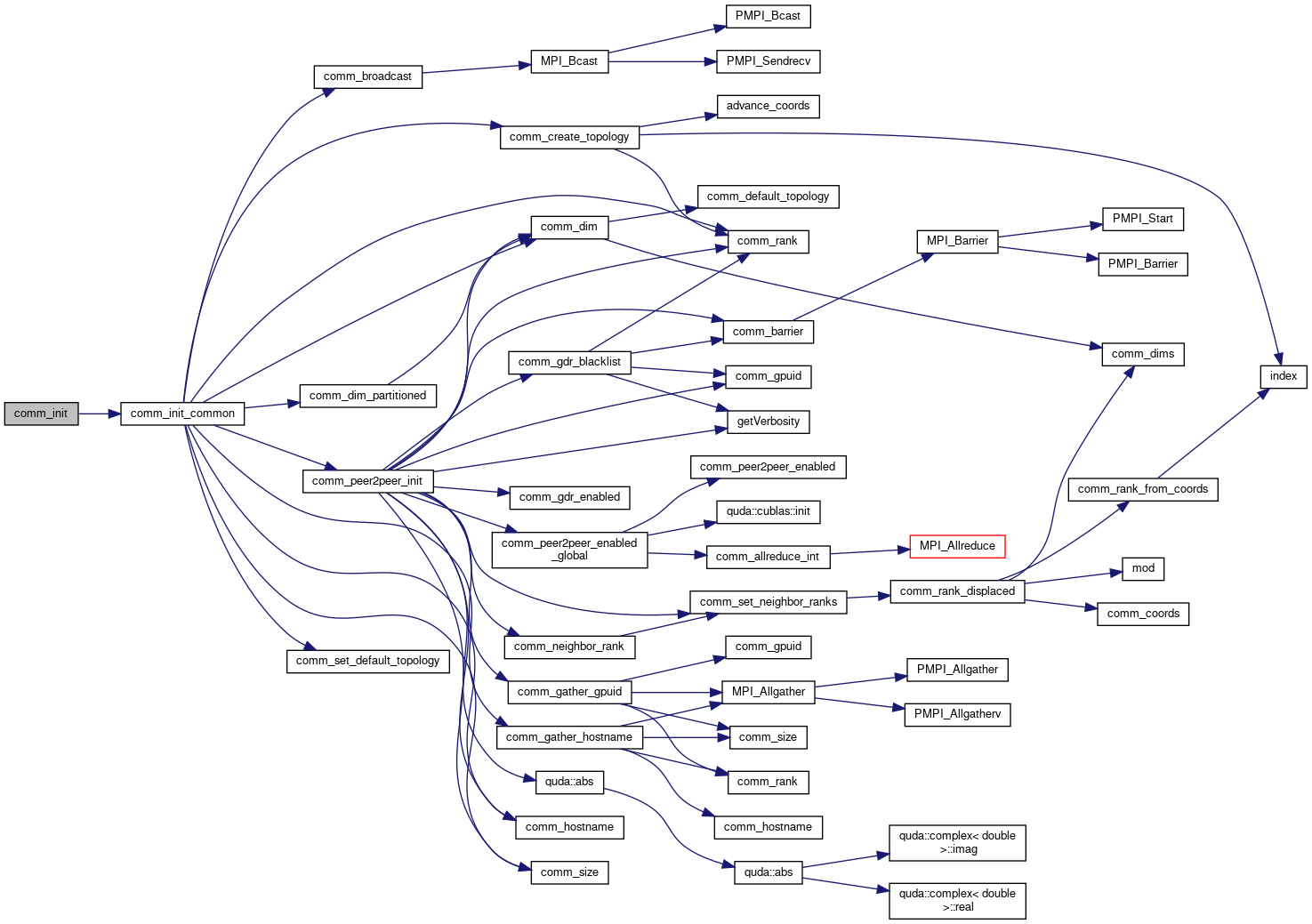

#include <cstdio>#include <cstdlib>#include <cstring>#include <algorithm>#include <numeric>#include <mpi.h>#include <csignal>#include <quda_internal.h>#include <comm_quda.h>#include <mpi_comm_handle.h>

Go to the source code of this file.

Classes | |

| struct | MsgHandle_s |

Macros | |

| #define | MPI_CHECK(mpi_call) |

Functions | |

| void | comm_gather_hostname (char *hostname_recv_buf) |

| Gather all hostnames. More... | |

| void | comm_gather_gpuid (int *gpuid_recv_buf) |

| Gather all GPU ids. More... | |

| void | comm_init (int ndim, const int *dims, QudaCommsMap rank_from_coords, void *map_data) |

| Initialize the communications, implemented in comm_single.cpp, comm_qmp.cpp, and comm_mpi.cpp. More... | |

| int | comm_rank (void) |

| int | comm_size (void) |

| static void | check_displacement (const int displacement[], int ndim) |

| MsgHandle * | comm_declare_send_displaced (void *buffer, const int displacement[], size_t nbytes) |

| MsgHandle * | comm_declare_receive_displaced (void *buffer, const int displacement[], size_t nbytes) |

| MsgHandle * | comm_declare_strided_send_displaced (void *buffer, const int displacement[], size_t blksize, int nblocks, size_t stride) |

| MsgHandle * | comm_declare_strided_receive_displaced (void *buffer, const int displacement[], size_t blksize, int nblocks, size_t stride) |

| void | comm_free (MsgHandle *&mh) |

| void | comm_start (MsgHandle *mh) |

| void | comm_wait (MsgHandle *mh) |

| int | comm_query (MsgHandle *mh) |

| template<typename T > | |

| T | deterministic_reduce (T *array, int n) |

| void | comm_allreduce (double *data) |

| void | comm_allreduce_max (double *data) |

| void | comm_allreduce_min (double *data) |

| void | comm_allreduce_array (double *data, size_t size) |

| void | comm_allreduce_max_array (double *data, size_t size) |

| void | comm_allreduce_int (int *data) |

| void | comm_allreduce_xor (uint64_t *data) |

| void | comm_broadcast (void *data, size_t nbytes) |

| void | comm_barrier (void) |

| void | comm_abort (int status) |

Variables | |

| static int | rank = -1 |

| static int | size = -1 |

| static const int | max_displacement = 4 |

Macro Definition Documentation

◆ MPI_CHECK

| #define MPI_CHECK | ( | mpi_call | ) |

Definition at line 12 of file comm_mpi.cpp.

Referenced by comm_allreduce(), comm_allreduce_array(), comm_allreduce_int(), comm_allreduce_max(), comm_allreduce_max_array(), comm_allreduce_min(), comm_allreduce_xor(), comm_barrier(), comm_broadcast(), comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), comm_declare_strided_send_displaced(), comm_free(), comm_gather_gpuid(), comm_gather_hostname(), comm_init(), comm_query(), comm_start(), and comm_wait().

Function Documentation

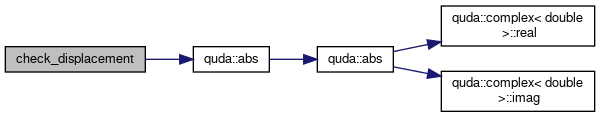

◆ check_displacement()

|

static |

Definition at line 96 of file comm_mpi.cpp.

References quda::abs(), errorQuda, max_displacement, and ndim.

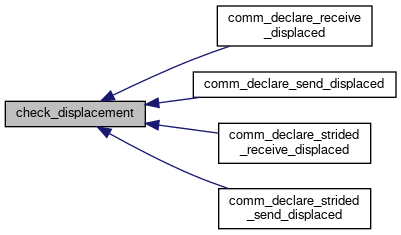

Referenced by comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), and comm_declare_strided_send_displaced().

◆ comm_abort()

| void comm_abort | ( | int | status | ) |

Definition at line 328 of file comm_mpi.cpp.

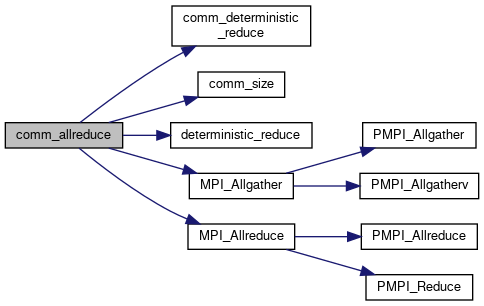

◆ comm_allreduce()

| void comm_allreduce | ( | double * | data | ) |

Definition at line 242 of file comm_mpi.cpp.

References comm_deterministic_reduce(), comm_size(), deterministic_reduce(), host_free, MPI_Allgather(), MPI_Allreduce(), MPI_CHECK, and safe_malloc.

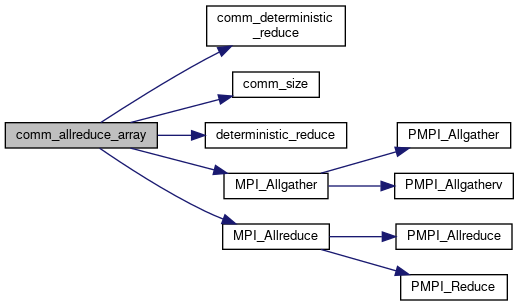

◆ comm_allreduce_array()

| void comm_allreduce_array | ( | double * | data, |

| size_t | size | ||

| ) |

Definition at line 272 of file comm_mpi.cpp.

References comm_deterministic_reduce(), comm_size(), deterministic_reduce(), MPI_Allgather(), MPI_Allreduce(), MPI_CHECK, and size.

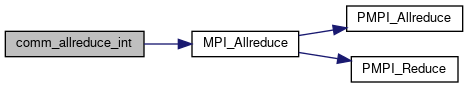

◆ comm_allreduce_int()

| void comm_allreduce_int | ( | int * | data | ) |

Definition at line 304 of file comm_mpi.cpp.

References MPI_Allreduce(), and MPI_CHECK.

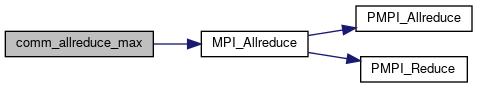

◆ comm_allreduce_max()

| void comm_allreduce_max | ( | double * | data | ) |

Definition at line 258 of file comm_mpi.cpp.

References MPI_Allreduce(), and MPI_CHECK.

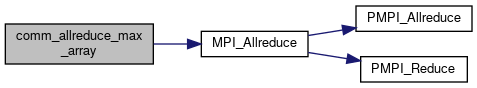

◆ comm_allreduce_max_array()

| void comm_allreduce_max_array | ( | double * | data, |

| size_t | size | ||

| ) |

Definition at line 296 of file comm_mpi.cpp.

References MPI_Allreduce(), MPI_CHECK, and size.

◆ comm_allreduce_min()

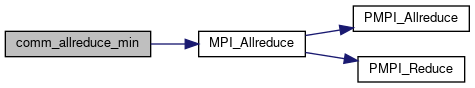

| void comm_allreduce_min | ( | double * | data | ) |

Definition at line 265 of file comm_mpi.cpp.

References MPI_Allreduce(), and MPI_CHECK.

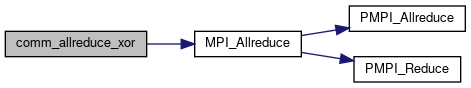

◆ comm_allreduce_xor()

| void comm_allreduce_xor | ( | uint64_t * | data | ) |

Definition at line 311 of file comm_mpi.cpp.

References errorQuda, MPI_Allreduce(), and MPI_CHECK.

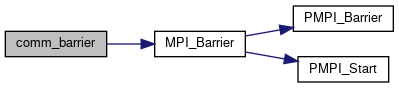

◆ comm_barrier()

| void comm_barrier | ( | void | ) |

Definition at line 326 of file comm_mpi.cpp.

References MPI_Barrier(), and MPI_CHECK.

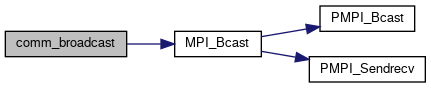

◆ comm_broadcast()

| void comm_broadcast | ( | void * | data, |

| size_t | nbytes | ||

| ) |

broadcast from rank 0

Definition at line 321 of file comm_mpi.cpp.

References MPI_Bcast(), and MPI_CHECK.

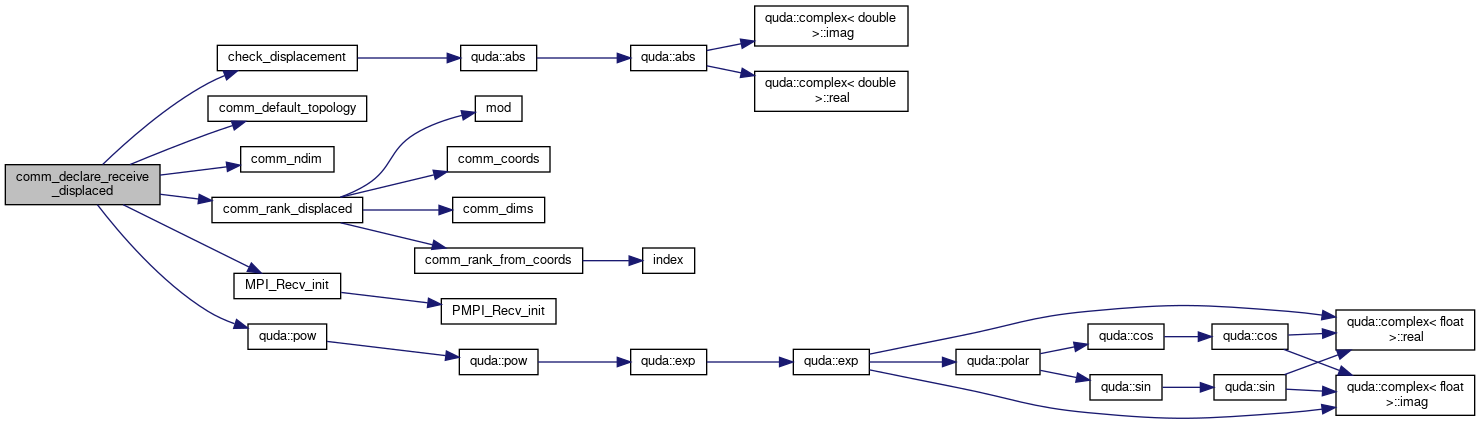

◆ comm_declare_receive_displaced()

| MsgHandle* comm_declare_receive_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | nbytes | ||

| ) |

Declare a message handle for receiving from a node displaced in (x,y,z,t) according to "displacement"

Definition at line 130 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, max_displacement, MPI_CHECK, MPI_Recv_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

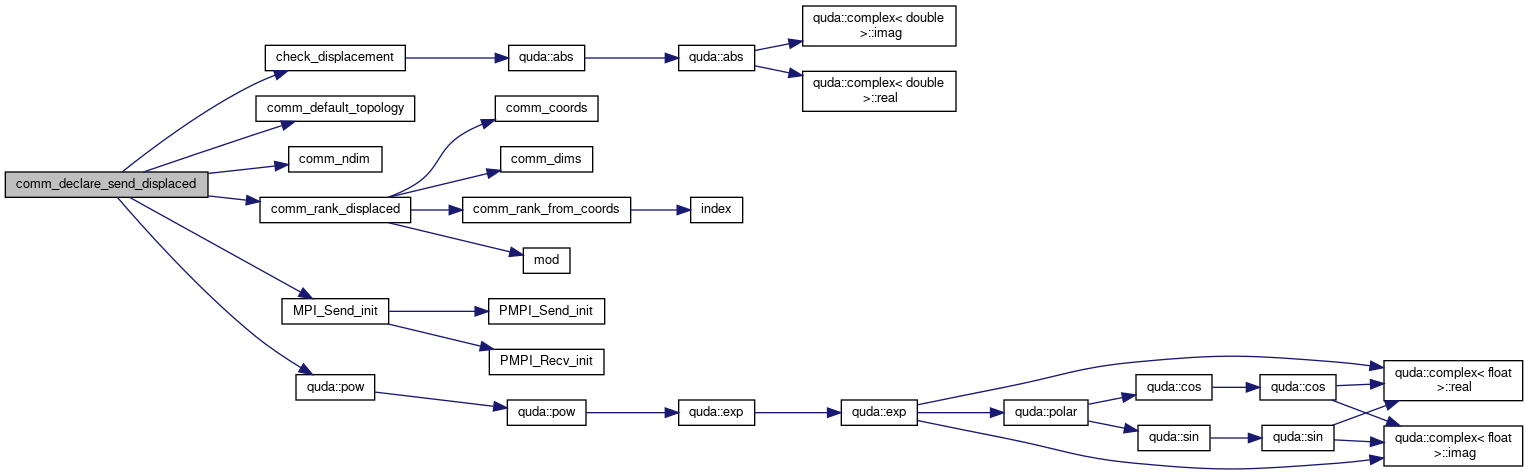

◆ comm_declare_send_displaced()

| MsgHandle* comm_declare_send_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | nbytes | ||

| ) |

Declare a message handle for sending to a node displaced in (x,y,z,t) according to "displacement"

Definition at line 107 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, max_displacement, MPI_CHECK, MPI_Send_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

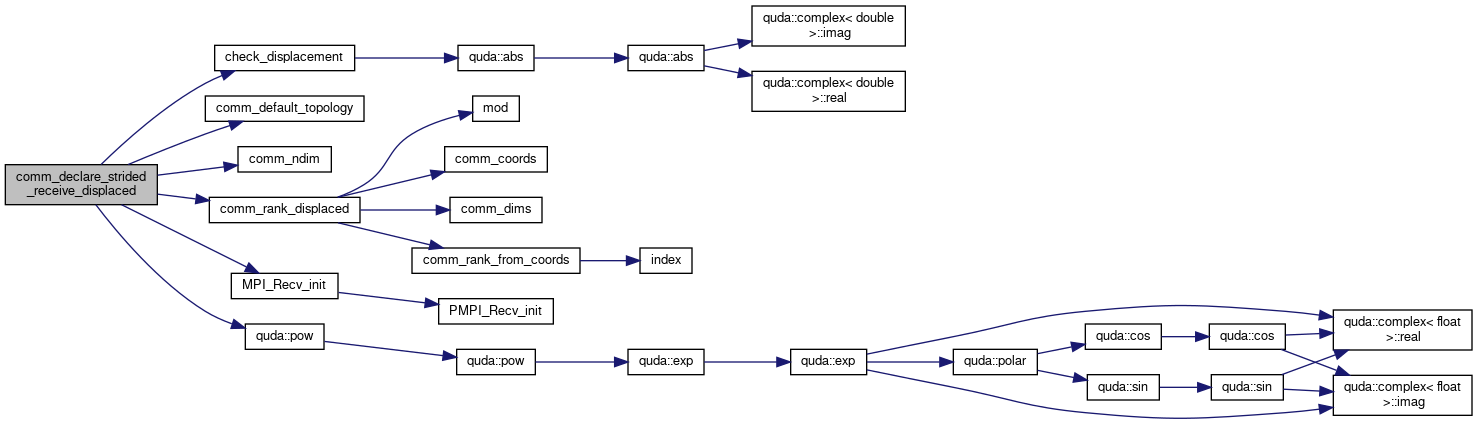

◆ comm_declare_strided_receive_displaced()

| MsgHandle* comm_declare_strided_receive_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Declare a message handle for receiving from a node displaced in (x,y,z,t) according to "displacement"

Definition at line 182 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, MsgHandle_s::datatype, max_displacement, MPI_CHECK, MPI_Recv_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

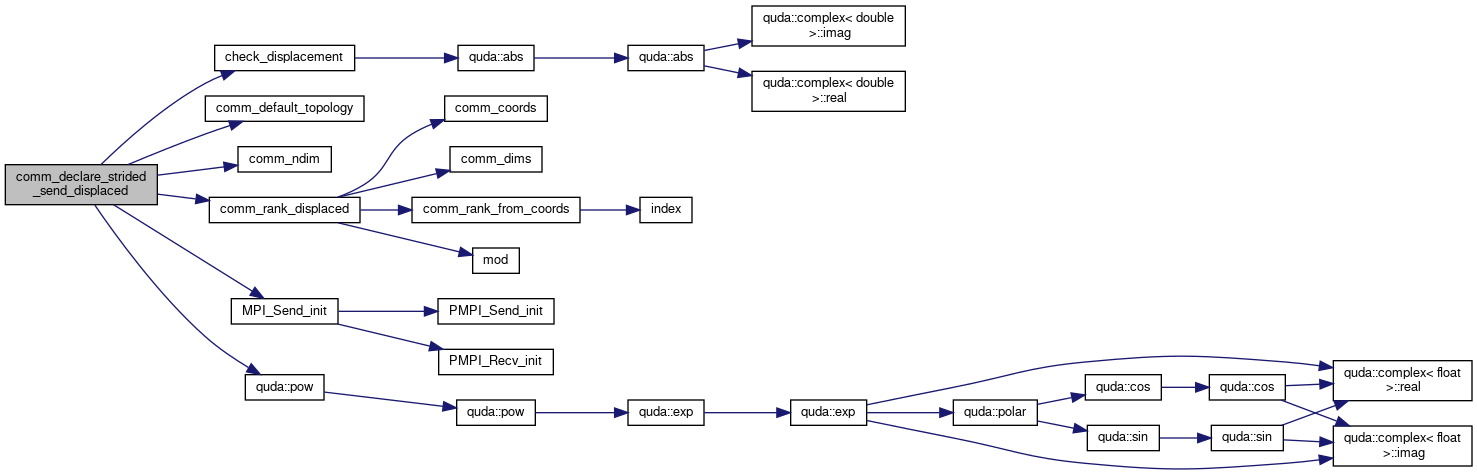

◆ comm_declare_strided_send_displaced()

| MsgHandle* comm_declare_strided_send_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Declare a message handle for sending to a node displaced in (x,y,z,t) according to "displacement"

Definition at line 153 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, MsgHandle_s::datatype, max_displacement, MPI_CHECK, MPI_Send_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

◆ comm_free()

| void comm_free | ( | MsgHandle *& | mh | ) |

Definition at line 207 of file comm_mpi.cpp.

References MsgHandle_s::custom, MsgHandle_s::datatype, host_free, MPI_CHECK, and MsgHandle_s::request.

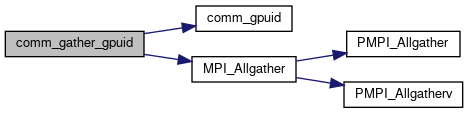

◆ comm_gather_gpuid()

| void comm_gather_gpuid | ( | int * | gpuid_recv_buf | ) |

Gather all GPU ids.

- Parameters

-

[out] gpuid_recv_buf int array of length comm_size() that will be filled in GPU ids for all processes (in rank order).

Definition at line 53 of file comm_mpi.cpp.

References comm_gpuid(), gpuid, MPI_Allgather(), and MPI_CHECK.

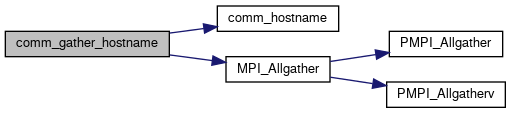

◆ comm_gather_hostname()

| void comm_gather_hostname | ( | char * | hostname_recv_buf | ) |

Gather all hostnames.

- Parameters

-

[out] hostname_recv_buf char array of length 128*comm_size() that will be filled in GPU ids for all processes. Each hostname is in rank order, with 128 bytes for each.

Definition at line 47 of file comm_mpi.cpp.

References comm_hostname(), MPI_Allgather(), and MPI_CHECK.

◆ comm_init()

| void comm_init | ( | int | ndim, |

| const int * | dims, | ||

| QudaCommsMap | rank_from_coords, | ||

| void * | map_data | ||

| ) |

Initialize the communications, implemented in comm_single.cpp, comm_qmp.cpp, and comm_mpi.cpp.

Definition at line 58 of file comm_mpi.cpp.

References comm_init_common(), errorQuda, initialized, MPI_CHECK, ndim, rank, and size.

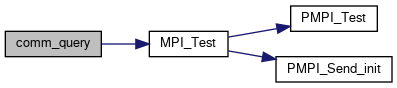

◆ comm_query()

| int comm_query | ( | MsgHandle * | mh | ) |

Definition at line 228 of file comm_mpi.cpp.

References MPI_CHECK, MPI_Test(), and MsgHandle_s::request.

◆ comm_rank()

| int comm_rank | ( | void | ) |

◆ comm_size()

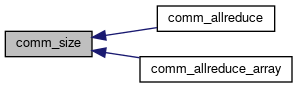

| int comm_size | ( | void | ) |

- Returns

- Number of processes

Definition at line 88 of file comm_mpi.cpp.

References size.

Referenced by comm_allreduce(), and comm_allreduce_array().

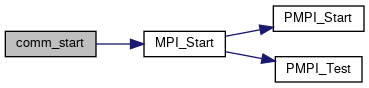

◆ comm_start()

| void comm_start | ( | MsgHandle * | mh | ) |

Definition at line 216 of file comm_mpi.cpp.

References MPI_CHECK, MPI_Start(), and MsgHandle_s::request.

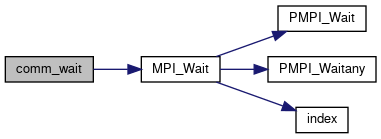

◆ comm_wait()

| void comm_wait | ( | MsgHandle * | mh | ) |

Definition at line 222 of file comm_mpi.cpp.

References MPI_CHECK, MPI_Wait(), and MsgHandle_s::request.

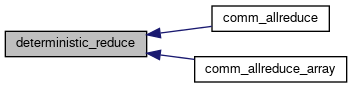

◆ deterministic_reduce()

| T deterministic_reduce | ( | T * | array, |

| int | n | ||

| ) |

Definition at line 236 of file comm_mpi.cpp.

Referenced by comm_allreduce(), and comm_allreduce_array().

Variable Documentation

◆ max_displacement

|

static |

Definition at line 94 of file comm_mpi.cpp.

Referenced by check_displacement(), comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), and comm_declare_strided_send_displaced().

◆ rank

|

static |

Definition at line 44 of file comm_mpi.cpp.

Referenced by comm_coords_from_rank(), comm_create_topology(), comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), comm_declare_strided_send_displaced(), comm_init(), comm_rank(), initRand(), kinetic_quda_(), lex_rank_from_coords(), lex_rank_from_coords_t(), lex_rank_from_coords_x(), and MPI_Init().

◆ size

|

static |

Definition at line 45 of file comm_mpi.cpp.

Referenced by comm_allreduce_array(), comm_allreduce_max_array(), comm_init(), and comm_size().

1.8.13

1.8.13