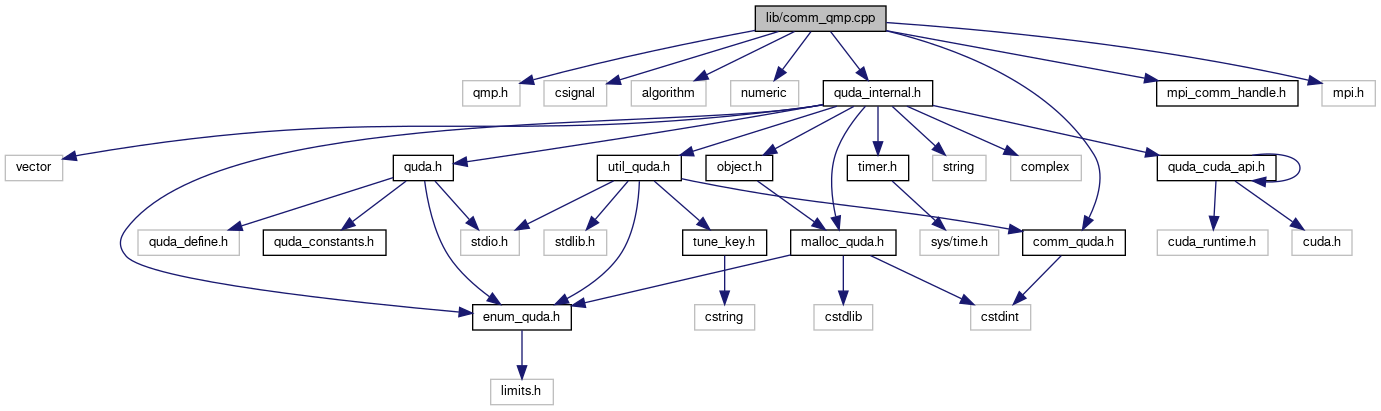

#include <qmp.h>#include <csignal>#include <algorithm>#include <numeric>#include <quda_internal.h>#include <comm_quda.h>#include <mpi_comm_handle.h>#include <mpi.h>

Go to the source code of this file.

Classes | |

| struct | MsgHandle_s |

Macros | |

| #define | QMP_CHECK(qmp_call) |

| #define | MPI_CHECK(mpi_call) |

| #define | USE_MPI_GATHER |

Functions | |

| void | comm_gather_hostname (char *hostname_recv_buf) |

| Gather all hostnames. More... | |

| void | comm_gather_gpuid (int *gpuid_recv_buf) |

| Gather all GPU ids. More... | |

| void | comm_init (int ndim, const int *dims, QudaCommsMap rank_from_coords, void *map_data) |

| Initialize the communications, implemented in comm_single.cpp, comm_qmp.cpp, and comm_mpi.cpp. More... | |

| int | comm_rank (void) |

| int | comm_size (void) |

| MsgHandle * | comm_declare_send_displaced (void *buffer, const int displacement[], size_t nbytes) |

| MsgHandle * | comm_declare_receive_displaced (void *buffer, const int displacement[], size_t nbytes) |

| MsgHandle * | comm_declare_strided_send_displaced (void *buffer, const int displacement[], size_t blksize, int nblocks, size_t stride) |

| MsgHandle * | comm_declare_strided_receive_displaced (void *buffer, const int displacement[], size_t blksize, int nblocks, size_t stride) |

| void | comm_free (MsgHandle *&mh) |

| void | comm_start (MsgHandle *mh) |

| void | comm_wait (MsgHandle *mh) |

| int | comm_query (MsgHandle *mh) |

| template<typename T > | |

| T | deterministic_reduce (T *array, int n) |

| void | comm_allreduce (double *data) |

| void | comm_allreduce_max (double *data) |

| void | comm_allreduce_min (double *data) |

| void | comm_allreduce_array (double *data, size_t size) |

| void | comm_allreduce_max_array (double *data, size_t size) |

| void | comm_allreduce_int (int *data) |

| void | comm_allreduce_xor (uint64_t *data) |

| void | comm_broadcast (void *data, size_t nbytes) |

| void | comm_barrier (void) |

| void | comm_abort (int status) |

Macro Definition Documentation

◆ MPI_CHECK

| #define MPI_CHECK | ( | mpi_call | ) |

Definition at line 15 of file comm_qmp.cpp.

Referenced by comm_allreduce(), comm_allreduce_array(), comm_gather_gpuid(), and comm_gather_hostname().

◆ QMP_CHECK

| #define QMP_CHECK | ( | qmp_call | ) |

Definition at line 9 of file comm_qmp.cpp.

Referenced by comm_allreduce(), comm_allreduce_array(), comm_allreduce_int(), comm_allreduce_max(), comm_allreduce_max_array(), comm_allreduce_min(), comm_allreduce_xor(), comm_barrier(), comm_broadcast(), comm_start(), and comm_wait().

◆ USE_MPI_GATHER

| #define USE_MPI_GATHER |

Definition at line 35 of file comm_qmp.cpp.

Function Documentation

◆ comm_abort()

| void comm_abort | ( | int | status | ) |

Definition at line 306 of file comm_qmp.cpp.

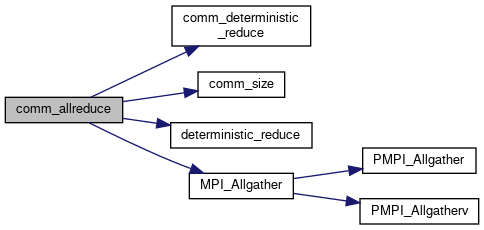

◆ comm_allreduce()

| void comm_allreduce | ( | double * | data | ) |

Definition at line 230 of file comm_qmp.cpp.

References comm_deterministic_reduce(), comm_size(), deterministic_reduce(), host_free, MPI_Allgather(), MPI_CHECK, QMP_CHECK, and safe_malloc.

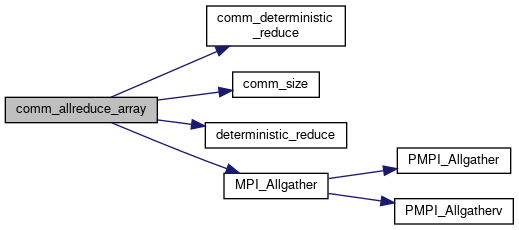

◆ comm_allreduce_array()

| void comm_allreduce_array | ( | double * | data, |

| size_t | size | ||

| ) |

Definition at line 256 of file comm_qmp.cpp.

References comm_deterministic_reduce(), comm_size(), deterministic_reduce(), MPI_Allgather(), MPI_CHECK, QMP_CHECK, and size.

◆ comm_allreduce_int()

| void comm_allreduce_int | ( | int * | data | ) |

Definition at line 283 of file comm_qmp.cpp.

References QMP_CHECK.

◆ comm_allreduce_max()

| void comm_allreduce_max | ( | double * | data | ) |

Definition at line 245 of file comm_qmp.cpp.

References QMP_CHECK.

◆ comm_allreduce_max_array()

| void comm_allreduce_max_array | ( | double * | data, |

| size_t | size | ||

| ) |

Definition at line 278 of file comm_qmp.cpp.

◆ comm_allreduce_min()

| void comm_allreduce_min | ( | double * | data | ) |

Definition at line 250 of file comm_qmp.cpp.

References QMP_CHECK.

◆ comm_allreduce_xor()

| void comm_allreduce_xor | ( | uint64_t * | data | ) |

Definition at line 288 of file comm_qmp.cpp.

◆ comm_barrier()

| void comm_barrier | ( | void | ) |

Definition at line 300 of file comm_qmp.cpp.

References QMP_CHECK.

◆ comm_broadcast()

| void comm_broadcast | ( | void * | data, |

| size_t | nbytes | ||

| ) |

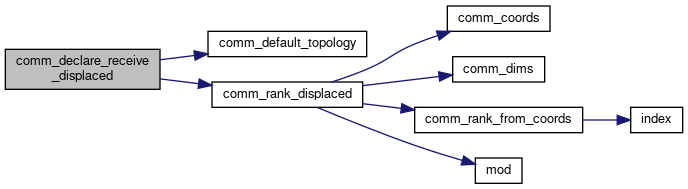

◆ comm_declare_receive_displaced()

| MsgHandle* comm_declare_receive_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | nbytes | ||

| ) |

Declare a message handle for receiving from a node displaced in (x,y,z,t) according to "displacement"

Definition at line 139 of file comm_qmp.cpp.

References comm_default_topology(), comm_rank_displaced(), errorQuda, MsgHandle_s::handle, MsgHandle_s::mem, rank, and safe_malloc.

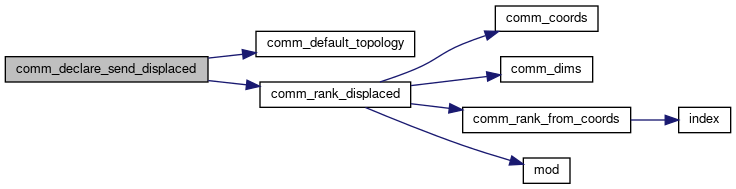

◆ comm_declare_send_displaced()

| MsgHandle* comm_declare_send_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | nbytes | ||

| ) |

Declare a message handle for sending to a node displaced in (x,y,z,t) according to "displacement"

Definition at line 120 of file comm_qmp.cpp.

References comm_default_topology(), comm_rank_displaced(), errorQuda, MsgHandle_s::handle, MsgHandle_s::mem, rank, and safe_malloc.

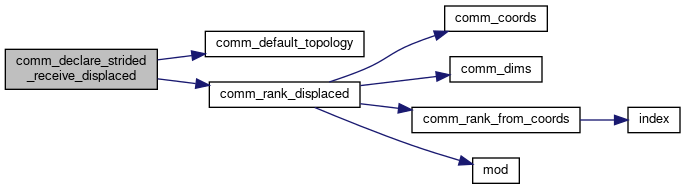

◆ comm_declare_strided_receive_displaced()

| MsgHandle* comm_declare_strided_receive_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Declare a message handle for strided receiving from a node displaced in (x,y,z,t) according to "displacement"

Definition at line 181 of file comm_qmp.cpp.

References comm_default_topology(), comm_rank_displaced(), errorQuda, MsgHandle_s::handle, MsgHandle_s::mem, rank, and safe_malloc.

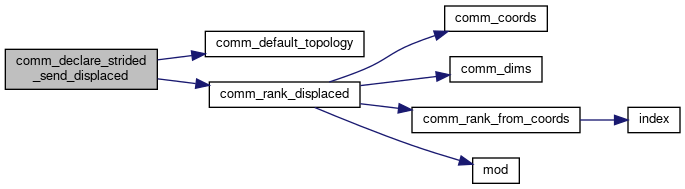

◆ comm_declare_strided_send_displaced()

| MsgHandle* comm_declare_strided_send_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Declare a message handle for strided sending to a node displaced in (x,y,z,t) according to "displacement"

Definition at line 160 of file comm_qmp.cpp.

References comm_default_topology(), comm_rank_displaced(), errorQuda, MsgHandle_s::handle, MsgHandle_s::mem, rank, and safe_malloc.

◆ comm_free()

| void comm_free | ( | MsgHandle *& | mh | ) |

Definition at line 198 of file comm_qmp.cpp.

References MsgHandle_s::handle, host_free, and MsgHandle_s::mem.

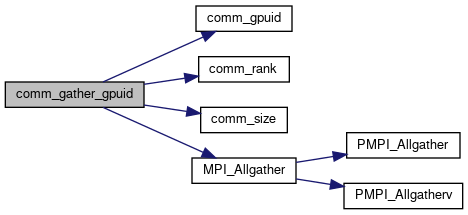

◆ comm_gather_gpuid()

| void comm_gather_gpuid | ( | int * | gpuid_recv_buf | ) |

Gather all GPU ids.

- Parameters

-

[out] gpuid_recv_buf int array of length comm_size() that will be filled in GPU ids for all processes (in rank order).

Definition at line 70 of file comm_qmp.cpp.

References comm_gpuid(), comm_rank(), comm_size(), gpuid, MPI_Allgather(), and MPI_CHECK.

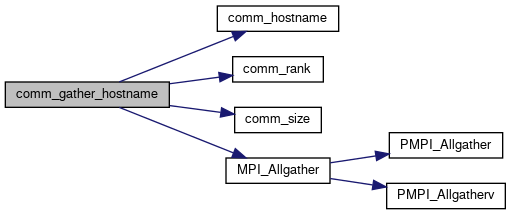

◆ comm_gather_hostname()

| void comm_gather_hostname | ( | char * | hostname_recv_buf | ) |

Gather all hostnames.

- Parameters

-

[out] hostname_recv_buf char array of length 128*comm_size() that will be filled in GPU ids for all processes. Each hostname is in rank order, with 128 bytes for each.

Definition at line 44 of file comm_qmp.cpp.

References comm_hostname(), comm_rank(), comm_size(), MPI_Allgather(), and MPI_CHECK.

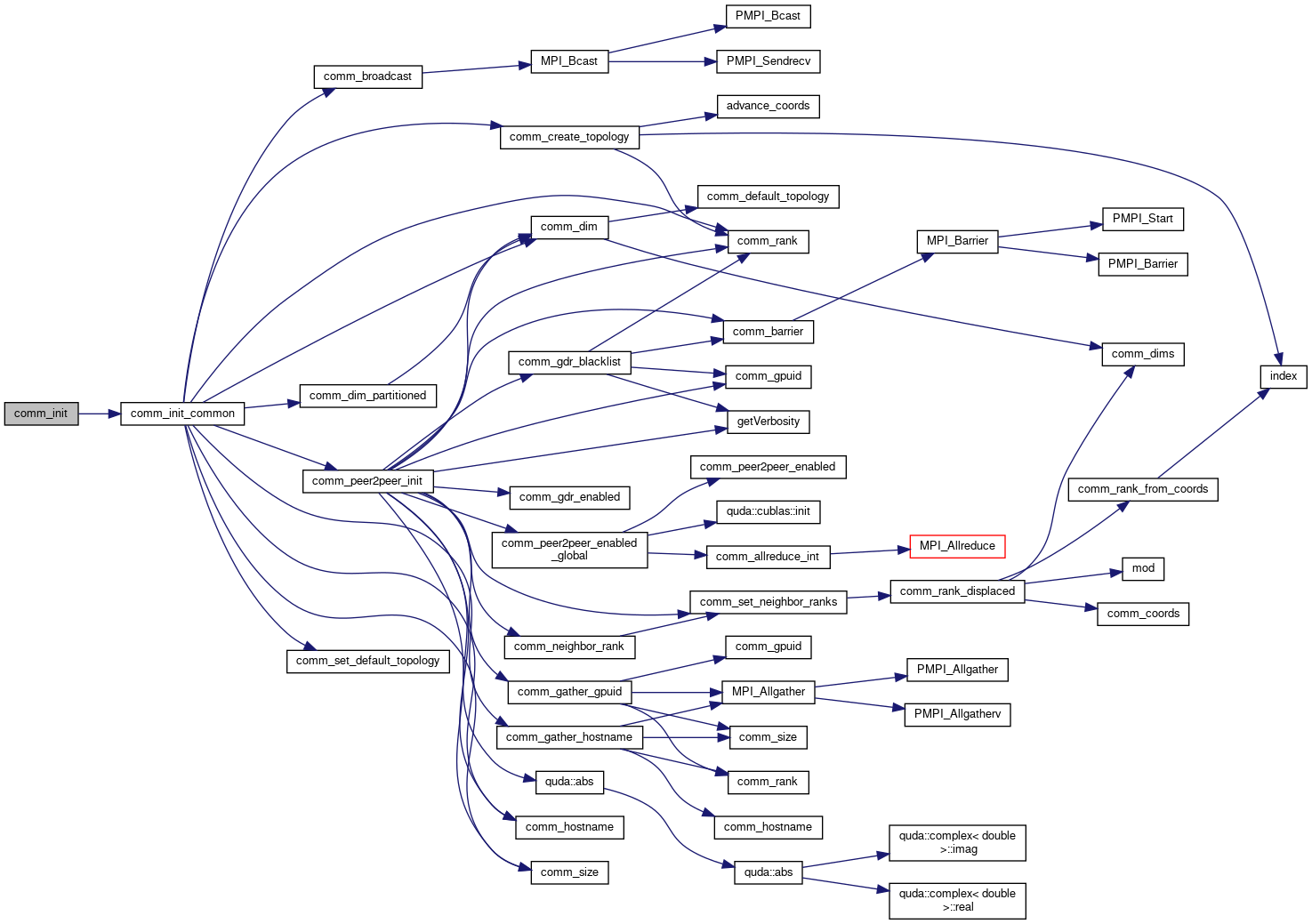

◆ comm_init()

| void comm_init | ( | int | ndim, |

| const int * | dims, | ||

| QudaCommsMap | rank_from_coords, | ||

| void * | map_data | ||

| ) |

Initialize the communications, implemented in comm_single.cpp, comm_qmp.cpp, and comm_mpi.cpp.

Definition at line 87 of file comm_qmp.cpp.

References comm_init_common(), errorQuda, and ndim.

◆ comm_query()

| int comm_query | ( | MsgHandle * | mh | ) |

Definition at line 219 of file comm_qmp.cpp.

References MsgHandle_s::handle.

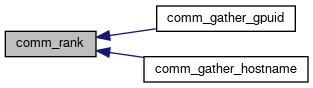

◆ comm_rank()

| int comm_rank | ( | void | ) |

- Returns

- Rank id of this process

Definition at line 105 of file comm_qmp.cpp.

Referenced by comm_gather_gpuid(), and comm_gather_hostname().

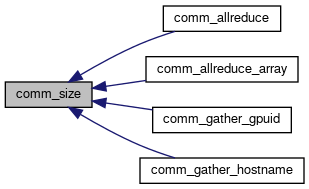

◆ comm_size()

| int comm_size | ( | void | ) |

- Returns

- Number of processes

Definition at line 111 of file comm_qmp.cpp.

Referenced by comm_allreduce(), comm_allreduce_array(), comm_gather_gpuid(), and comm_gather_hostname().

◆ comm_start()

| void comm_start | ( | MsgHandle * | mh | ) |

Definition at line 207 of file comm_qmp.cpp.

References MsgHandle_s::handle, and QMP_CHECK.

◆ comm_wait()

| void comm_wait | ( | MsgHandle * | mh | ) |

Definition at line 213 of file comm_qmp.cpp.

References MsgHandle_s::handle, and QMP_CHECK.

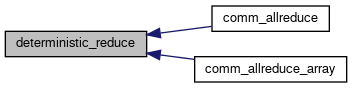

◆ deterministic_reduce()

| T deterministic_reduce | ( | T * | array, |

| int | n | ||

| ) |

Definition at line 224 of file comm_qmp.cpp.

Referenced by comm_allreduce(), and comm_allreduce_array().

1.8.13

1.8.13