#include <cstdint>

Go to the source code of this file.

Macros | |

| #define | comm_declare_send_relative(buffer, dim, dir, nbytes) comm_declare_send_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, nbytes) |

| #define | comm_declare_receive_relative(buffer, dim, dir, nbytes) comm_declare_receive_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, nbytes) |

| #define | comm_declare_strided_send_relative(buffer, dim, dir, blksize, nblocks, stride) comm_declare_strided_send_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, blksize, nblocks, stride) |

| #define | comm_declare_strided_receive_relative(buffer, dim, dir, blksize, nblocks, stride) comm_declare_strided_receive_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, blksize, nblocks, stride) |

Typedefs | |

| typedef struct MsgHandle_s | MsgHandle |

| typedef struct Topology_s | Topology |

| typedef int(* | QudaCommsMap) (const int *coords, void *fdata) |

Functions | |

| char * | comm_hostname (void) |

| double | comm_drand (void) |

| Topology * | comm_create_topology (int ndim, const int *dims, QudaCommsMap rank_from_coords, void *map_data) |

| void | comm_destroy_topology (Topology *topo) |

| int | comm_ndim (const Topology *topo) |

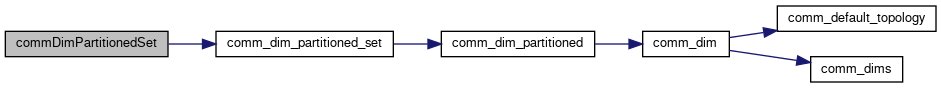

| const int * | comm_dims (const Topology *topo) |

| const int * | comm_coords (const Topology *topo) |

| const int * | comm_coords_from_rank (const Topology *topo, int rank) |

| int | comm_rank_from_coords (const Topology *topo, const int *coords) |

| int | comm_rank_displaced (const Topology *topo, const int displacement[]) |

| void | comm_set_default_topology (Topology *topo) |

| Topology * | comm_default_topology (void) |

| void | comm_set_neighbor_ranks (Topology *topo=NULL) |

| int | comm_neighbor_rank (int dir, int dim) |

| int | comm_dim (int dim) |

| int | comm_coord (int dim) |

| MsgHandle * | comm_declare_send_relative_ (const char *func, const char *file, int line, void *buffer, int dim, int dir, size_t nbytes) |

| MsgHandle * | comm_declare_receive_relative_ (const char *func, const char *file, int line, void *buffer, int dim, int dir, size_t nbytes) |

| MsgHandle * | comm_declare_strided_send_relative_ (const char *func, const char *file, int line, void *buffer, int dim, int dir, size_t blksize, int nblocks, size_t stride) |

| MsgHandle * | comm_declare_strided_receive_relative_ (const char *func, const char *file, int line, void *buffer, int dim, int dir, size_t blksize, int nblocks, size_t stride) |

| void | comm_finalize (void) |

| void | comm_dim_partitioned_set (int dim) |

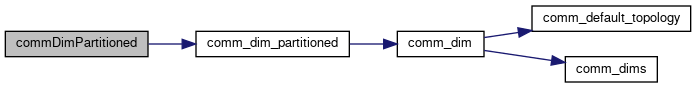

| int | comm_dim_partitioned (int dim) |

| int | comm_partitioned () |

| Loop over comm_dim_partitioned(dim) for all comms dimensions. More... | |

| void | comm_set_tunekey_string () |

| Create the topology and partition strings that are used in tuneKeys. More... | |

| const char * | comm_dim_partitioned_string (const int *comm_dim_override=0) |

| Return a string that defines the comm partitioning (used as a tuneKey) More... | |

| const char * | comm_dim_topology_string () |

| Return a string that defines the comm topology (for use as a tuneKey) More... | |

| const char * | comm_config_string () |

| Return a string that defines the P2P/GDR environment variable configuration (for use as a tuneKey to enable unique policies). More... | |

| void | comm_init (int ndim, const int *dims, QudaCommsMap rank_from_coords, void *map_data) |

| Initialize the communications, implemented in comm_single.cpp, comm_qmp.cpp, and comm_mpi.cpp. More... | |

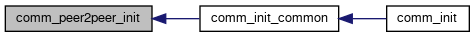

| void | comm_init_common (int ndim, const int *dims, QudaCommsMap rank_from_coords, void *map_data) |

| Initialize the communications common to all communications abstractions. More... | |

| int | comm_rank (void) |

| int | comm_size (void) |

| int | comm_gpuid (void) |

| bool | comm_deterministic_reduce () |

| void | comm_gather_hostname (char *hostname_recv_buf) |

| Gather all hostnames. More... | |

| void | comm_gather_gpuid (int *gpuid_recv_buf) |

| Gather all GPU ids. More... | |

| void | comm_peer2peer_init (const char *hostname_recv_buf) |

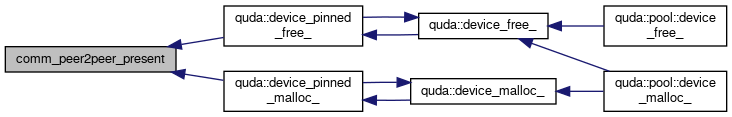

| bool | comm_peer2peer_present () |

| Returns true if any peer-to-peer capability is present on this system (regardless of whether it has been disabled or not. We use this, for example, to determine if we need to allocate pinned device memory or not. More... | |

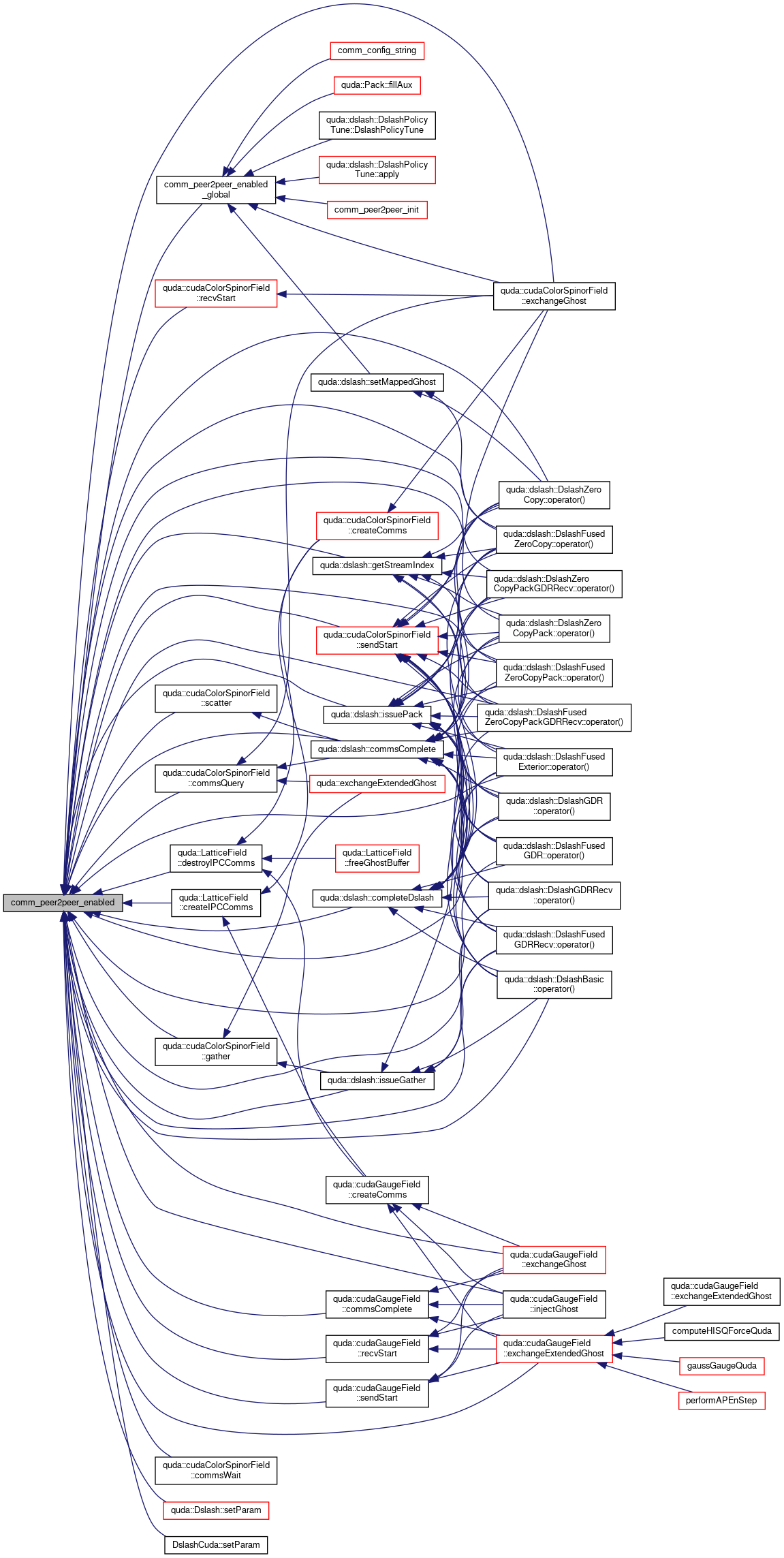

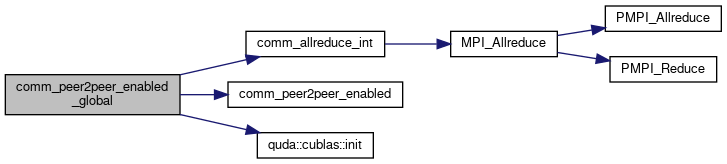

| int | comm_peer2peer_enabled_global () |

| bool | comm_peer2peer_enabled (int dir, int dim) |

| void | comm_enable_peer2peer (bool enable) |

| Enable / disable peer-to-peer communication: used for dslash policies that do not presently support peer-to-peer communication. More... | |

| bool | comm_intranode_enabled (int dir, int dim) |

| void | comm_enable_intranode (bool enable) |

| Enable / disable intra-node (non-peer-to-peer) communication. More... | |

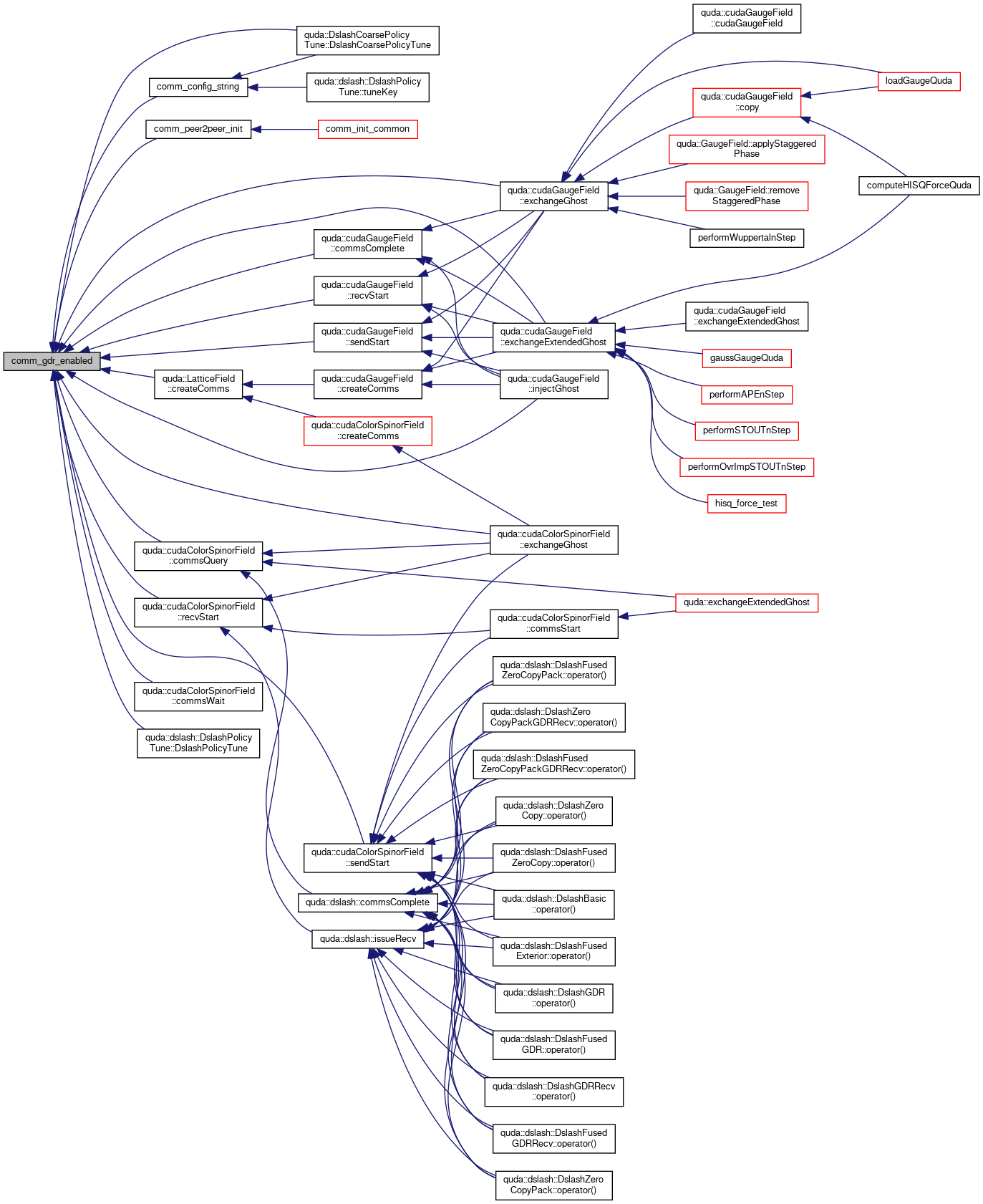

| bool | comm_gdr_enabled () |

| Query if GPU Direct RDMA communication is enabled (global setting) More... | |

| bool | comm_gdr_blacklist () |

| Query if GPU Direct RDMA communication is blacklisted for this GPU. More... | |

| MsgHandle * | comm_declare_send_displaced (void *buffer, const int displacement[], size_t nbytes) |

| MsgHandle * | comm_declare_receive_displaced (void *buffer, const int displacement[], size_t nbytes) |

| MsgHandle * | comm_declare_strided_send_displaced (void *buffer, const int displacement[], size_t blksize, int nblocks, size_t stride) |

| MsgHandle * | comm_declare_strided_receive_displaced (void *buffer, const int displacement[], size_t blksize, int nblocks, size_t stride) |

| void | comm_free (MsgHandle *&mh) |

| void | comm_start (MsgHandle *mh) |

| void | comm_wait (MsgHandle *mh) |

| int | comm_query (MsgHandle *mh) |

| void | comm_allreduce (double *data) |

| void | comm_allreduce_max (double *data) |

| void | comm_allreduce_min (double *data) |

| void | comm_allreduce_array (double *data, size_t size) |

| void | comm_allreduce_max_array (double *data, size_t size) |

| void | comm_allreduce_int (int *data) |

| void | comm_allreduce_xor (uint64_t *data) |

| void | comm_broadcast (void *data, size_t nbytes) |

| void | comm_barrier (void) |

| void | comm_abort (int status) |

| void | reduceMaxDouble (double &) |

| void | reduceDouble (double &) |

| void | reduceDoubleArray (double *, const int len) |

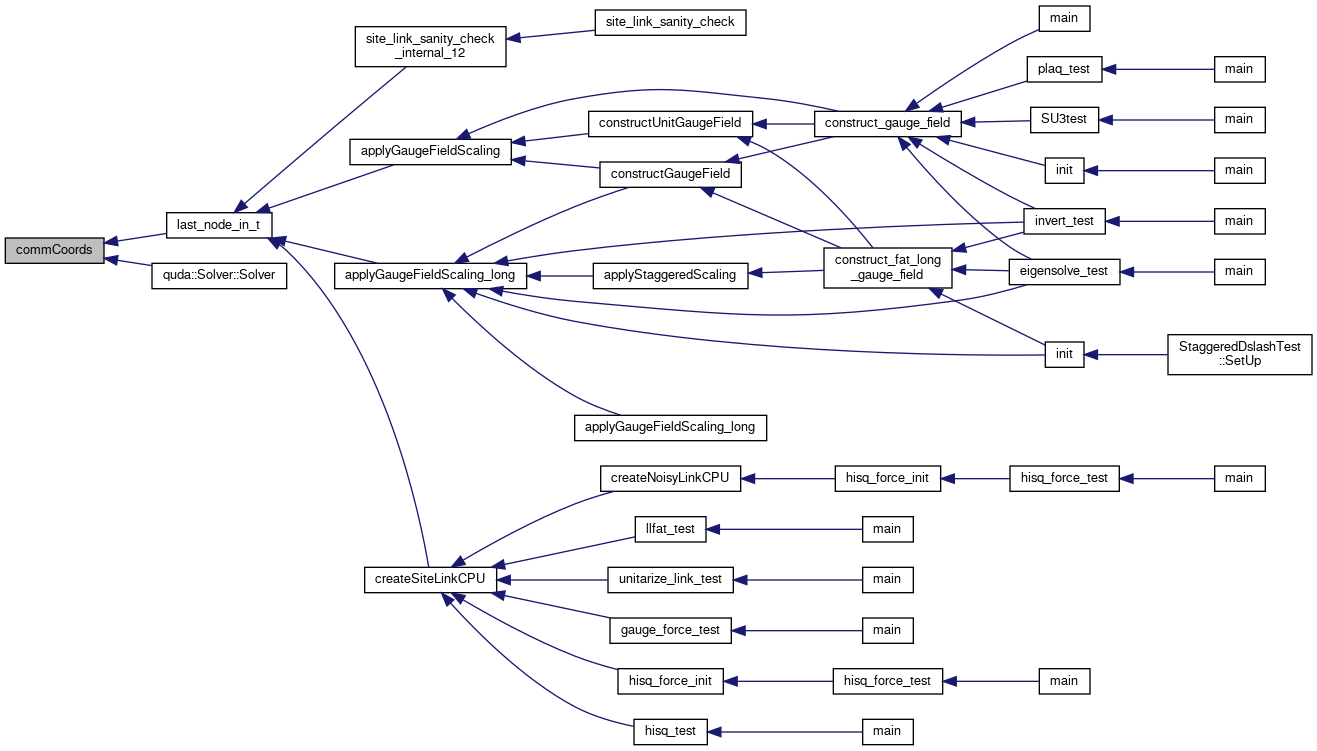

| int | commDim (int) |

| int | commCoords (int) |

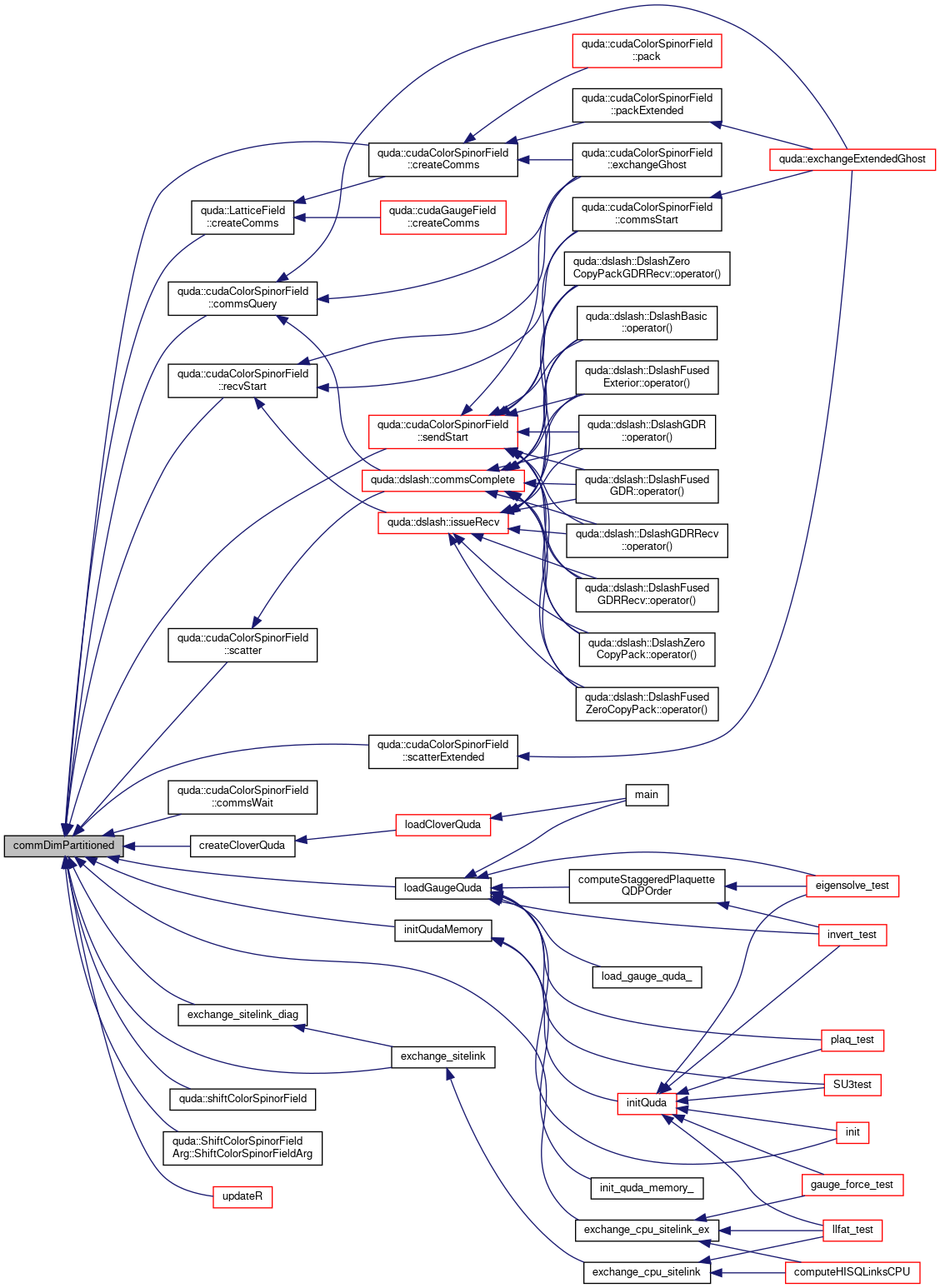

| int | commDimPartitioned (int dir) |

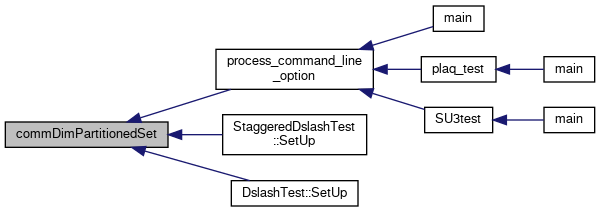

| void | commDimPartitionedSet (int dir) |

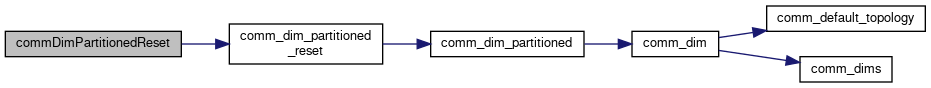

| void | commDimPartitionedReset () |

| Reset the comm dim partioned array to zero,. More... | |

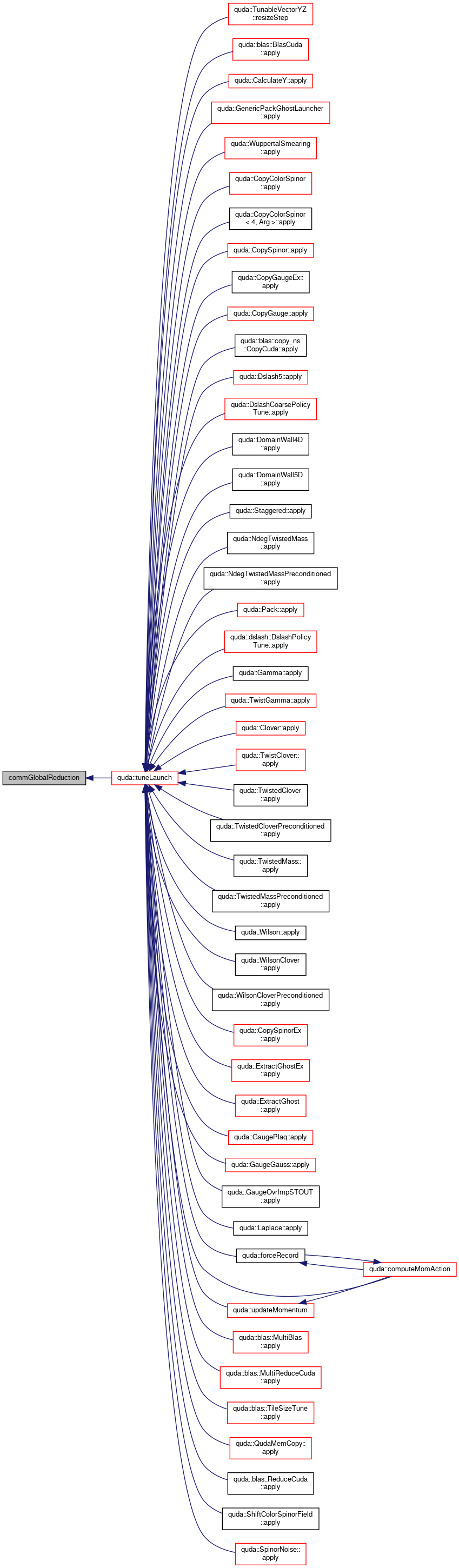

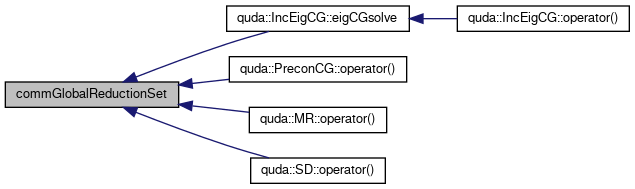

| bool | commGlobalReduction () |

| void | commGlobalReductionSet (bool global_reduce) |

| bool | commAsyncReduction () |

| void | commAsyncReductionSet (bool global_reduce) |

Macro Definition Documentation

◆ comm_declare_receive_relative

| #define comm_declare_receive_relative | ( | buffer, | |

| dim, | |||

| dir, | |||

| nbytes | |||

| ) | comm_declare_receive_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, nbytes) |

Definition at line 74 of file comm_quda.h.

Referenced by quda::LatticeField::createComms(), quda::LatticeField::createIPCComms(), do_exchange_cpu_staple(), quda::GaugeField::exchange(), quda::ColorSpinorField::exchange(), exchange_cpu_sitelink_ex(), exchange_sitelink(), and quda::cpuGaugeField::exchangeExtendedGhost().

◆ comm_declare_send_relative

| #define comm_declare_send_relative | ( | buffer, | |

| dim, | |||

| dir, | |||

| nbytes | |||

| ) | comm_declare_send_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, nbytes) |

Definition at line 59 of file comm_quda.h.

Referenced by quda::LatticeField::createComms(), quda::LatticeField::createIPCComms(), do_exchange_cpu_staple(), quda::GaugeField::exchange(), quda::ColorSpinorField::exchange(), exchange_cpu_sitelink_ex(), exchange_sitelink(), and quda::cpuGaugeField::exchangeExtendedGhost().

◆ comm_declare_strided_receive_relative

| #define comm_declare_strided_receive_relative | ( | buffer, | |

| dim, | |||

| dir, | |||

| blksize, | |||

| nblocks, | |||

| stride | |||

| ) | comm_declare_strided_receive_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, blksize, nblocks, stride) |

Definition at line 110 of file comm_quda.h.

◆ comm_declare_strided_send_relative

| #define comm_declare_strided_send_relative | ( | buffer, | |

| dim, | |||

| dir, | |||

| blksize, | |||

| nblocks, | |||

| stride | |||

| ) | comm_declare_strided_send_relative_(__func__, __FILE__, __LINE__, buffer, dim, dir, blksize, nblocks, stride) |

Definition at line 92 of file comm_quda.h.

Typedef Documentation

◆ MsgHandle

| typedef struct MsgHandle_s MsgHandle |

Definition at line 8 of file comm_quda.h.

◆ QudaCommsMap

| typedef int(* QudaCommsMap) (const int *coords, void *fdata) |

Definition at line 12 of file comm_quda.h.

◆ Topology

| typedef struct Topology_s Topology |

Definition at line 9 of file comm_quda.h.

Function Documentation

◆ comm_abort()

| void comm_abort | ( | int | status | ) |

Definition at line 328 of file comm_mpi.cpp.

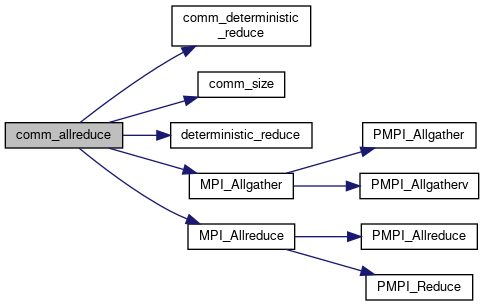

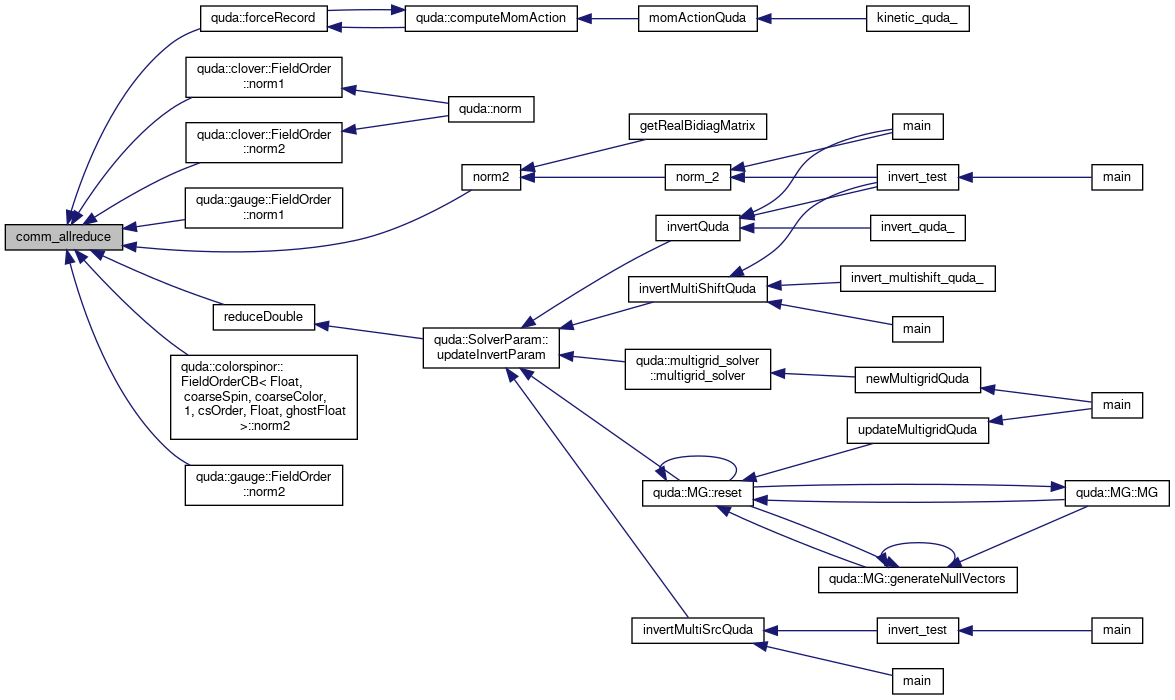

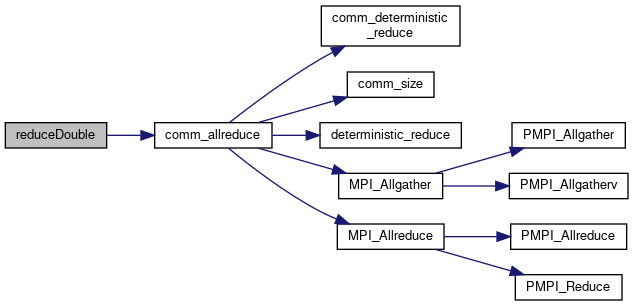

◆ comm_allreduce()

| void comm_allreduce | ( | double * | data | ) |

Definition at line 242 of file comm_mpi.cpp.

References comm_deterministic_reduce(), comm_size(), deterministic_reduce(), host_free, MPI_Allgather(), MPI_Allreduce(), MPI_CHECK, QMP_CHECK, and safe_malloc.

Referenced by quda::forceRecord(), quda::clover::FieldOrder< Float, nColor, nSpin, order >::norm1(), quda::gauge::FieldOrder< Float, nColor, nSpinCoarse, order, native_ghost, storeFloat, use_tex >::norm1(), norm2(), quda::clover::FieldOrder< Float, nColor, nSpin, order >::norm2(), quda::colorspinor::FieldOrderCB< Float, coarseSpin, coarseColor, 1, csOrder, Float, ghostFloat >::norm2(), quda::gauge::FieldOrder< Float, nColor, nSpinCoarse, order, native_ghost, storeFloat, use_tex >::norm2(), and reduceDouble().

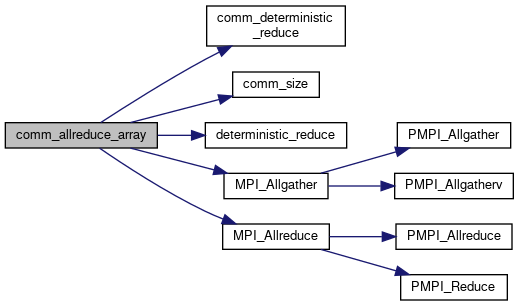

◆ comm_allreduce_array()

| void comm_allreduce_array | ( | double * | data, |

| size_t | size | ||

| ) |

Definition at line 272 of file comm_mpi.cpp.

References comm_deterministic_reduce(), comm_size(), deterministic_reduce(), MPI_Allgather(), MPI_Allreduce(), MPI_CHECK, QMP_CHECK, and size.

Referenced by quda::plaquette(), and reduceDoubleArray().

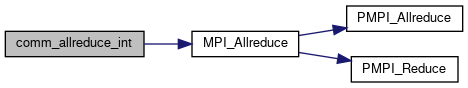

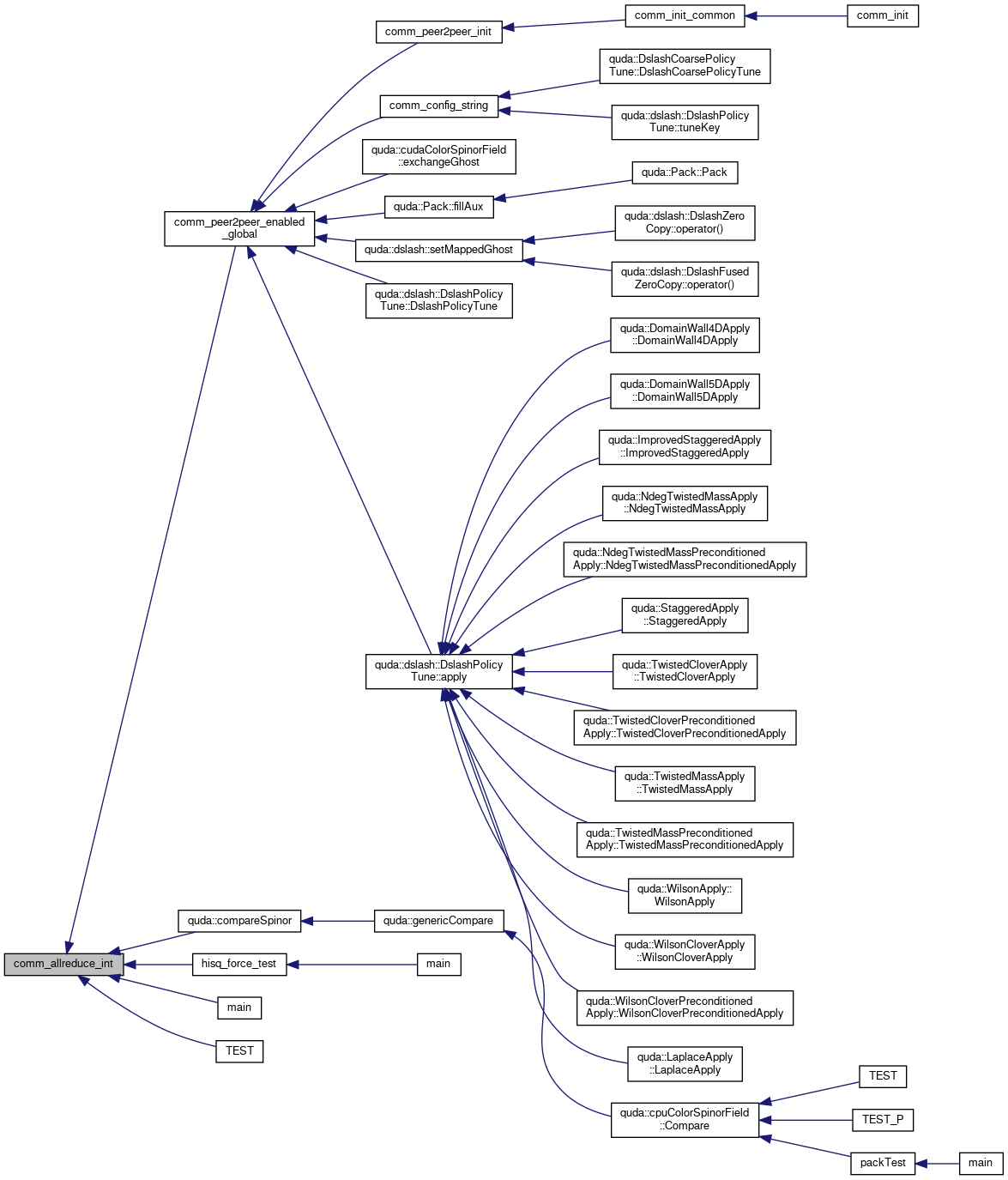

◆ comm_allreduce_int()

| void comm_allreduce_int | ( | int * | data | ) |

Definition at line 304 of file comm_mpi.cpp.

References MPI_Allreduce(), MPI_CHECK, and QMP_CHECK.

Referenced by comm_peer2peer_enabled_global(), quda::compareSpinor(), hisq_force_test(), main(), and TEST().

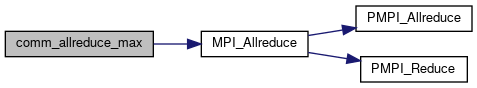

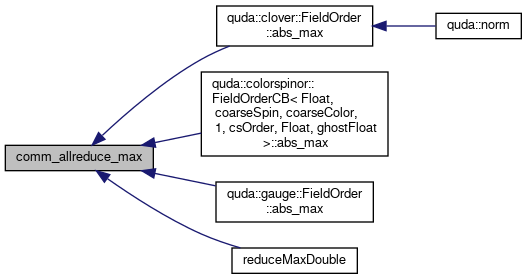

◆ comm_allreduce_max()

| void comm_allreduce_max | ( | double * | data | ) |

Definition at line 258 of file comm_mpi.cpp.

References MPI_Allreduce(), MPI_CHECK, and QMP_CHECK.

Referenced by quda::clover::FieldOrder< Float, nColor, nSpin, order >::abs_max(), quda::colorspinor::FieldOrderCB< Float, coarseSpin, coarseColor, 1, csOrder, Float, ghostFloat >::abs_max(), quda::gauge::FieldOrder< Float, nColor, nSpinCoarse, order, native_ghost, storeFloat, use_tex >::abs_max(), and reduceMaxDouble().

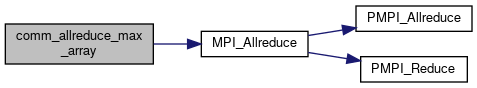

◆ comm_allreduce_max_array()

| void comm_allreduce_max_array | ( | double * | data, |

| size_t | size | ||

| ) |

Definition at line 296 of file comm_mpi.cpp.

References MPI_Allreduce(), MPI_CHECK, QMP_CHECK, and size.

Referenced by quda::forceRecord().

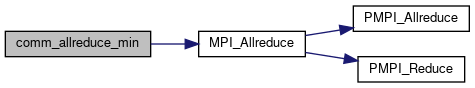

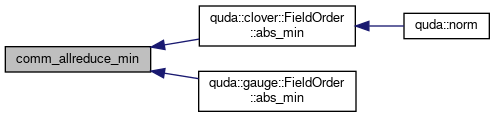

◆ comm_allreduce_min()

| void comm_allreduce_min | ( | double * | data | ) |

Definition at line 265 of file comm_mpi.cpp.

References MPI_Allreduce(), MPI_CHECK, and QMP_CHECK.

Referenced by quda::clover::FieldOrder< Float, nColor, nSpin, order >::abs_min(), and quda::gauge::FieldOrder< Float, nColor, nSpinCoarse, order, native_ghost, storeFloat, use_tex >::abs_min().

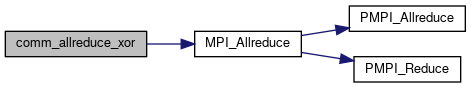

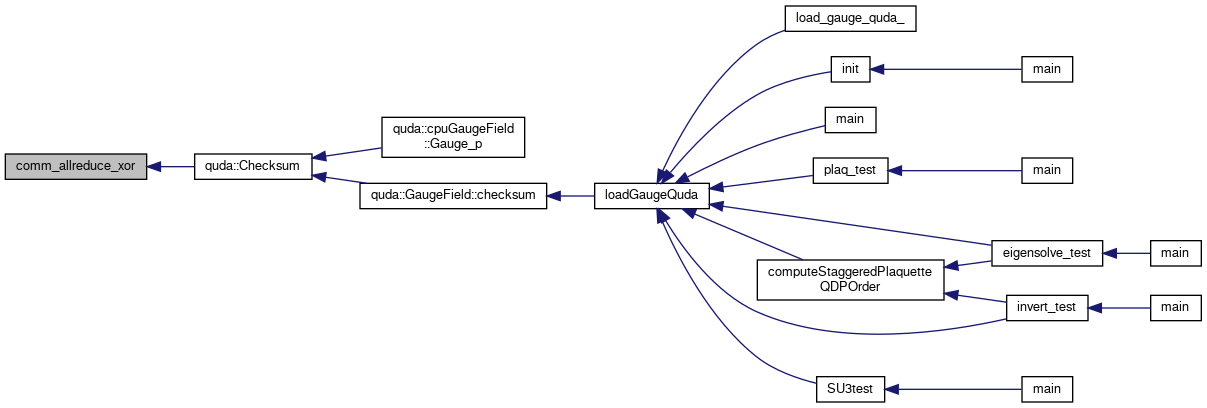

◆ comm_allreduce_xor()

| void comm_allreduce_xor | ( | uint64_t * | data | ) |

Definition at line 311 of file comm_mpi.cpp.

References errorQuda, MPI_Allreduce(), MPI_CHECK, and QMP_CHECK.

Referenced by quda::Checksum().

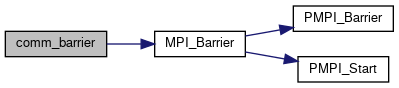

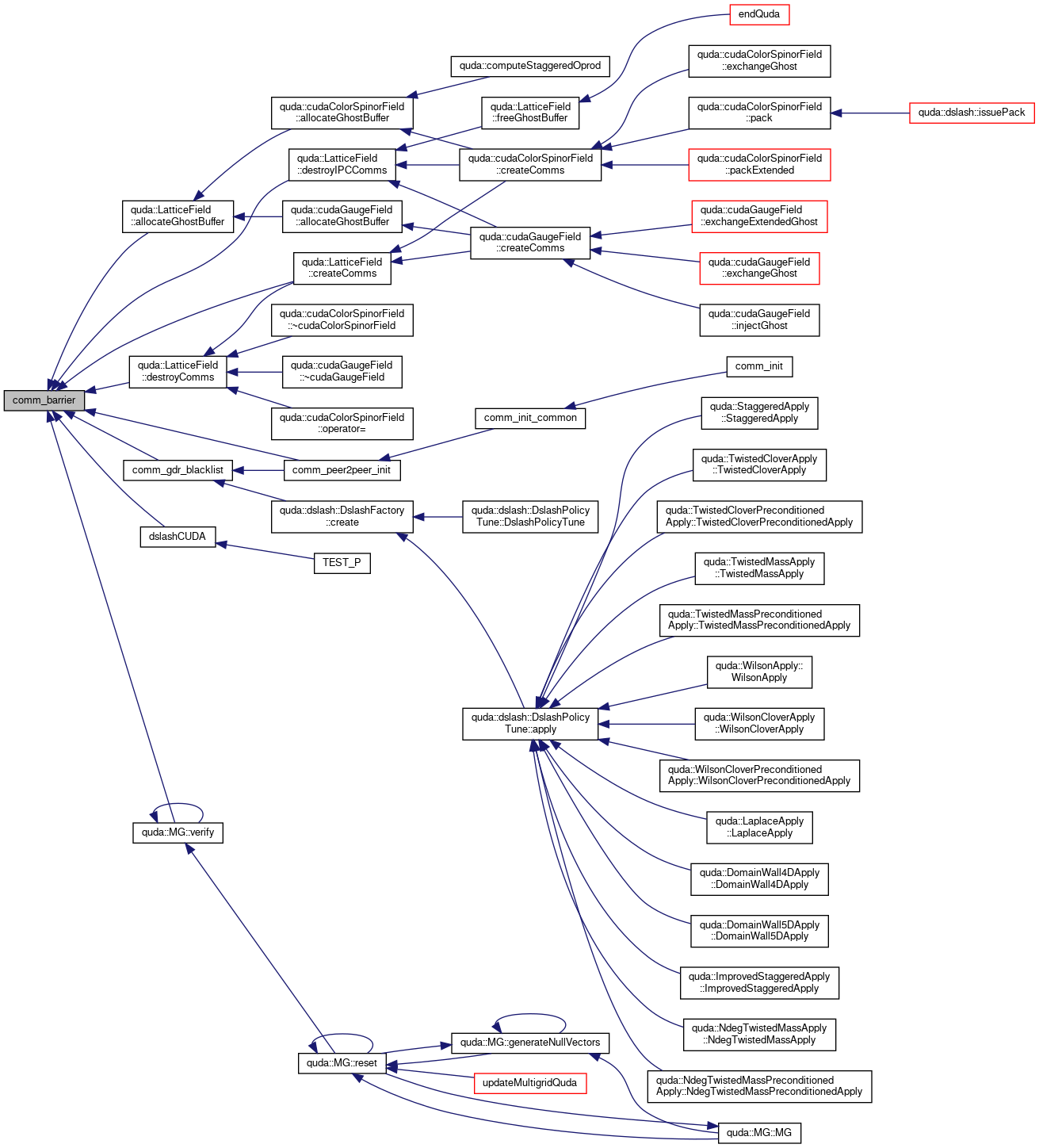

◆ comm_barrier()

| void comm_barrier | ( | void | ) |

Definition at line 326 of file comm_mpi.cpp.

References MPI_Barrier(), MPI_CHECK, and QMP_CHECK.

Referenced by quda::LatticeField::allocateGhostBuffer(), comm_gdr_blacklist(), comm_peer2peer_init(), quda::LatticeField::createComms(), quda::LatticeField::destroyComms(), quda::LatticeField::destroyIPCComms(), dslashCUDA(), and quda::MG::verify().

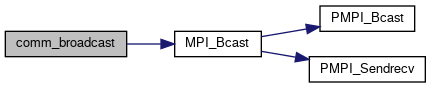

◆ comm_broadcast()

| void comm_broadcast | ( | void * | data, |

| size_t | nbytes | ||

| ) |

broadcast from rank 0

Definition at line 321 of file comm_mpi.cpp.

References MPI_Bcast(), MPI_CHECK, and QMP_CHECK.

Referenced by quda::broadcastTuneCache(), and comm_init_common().

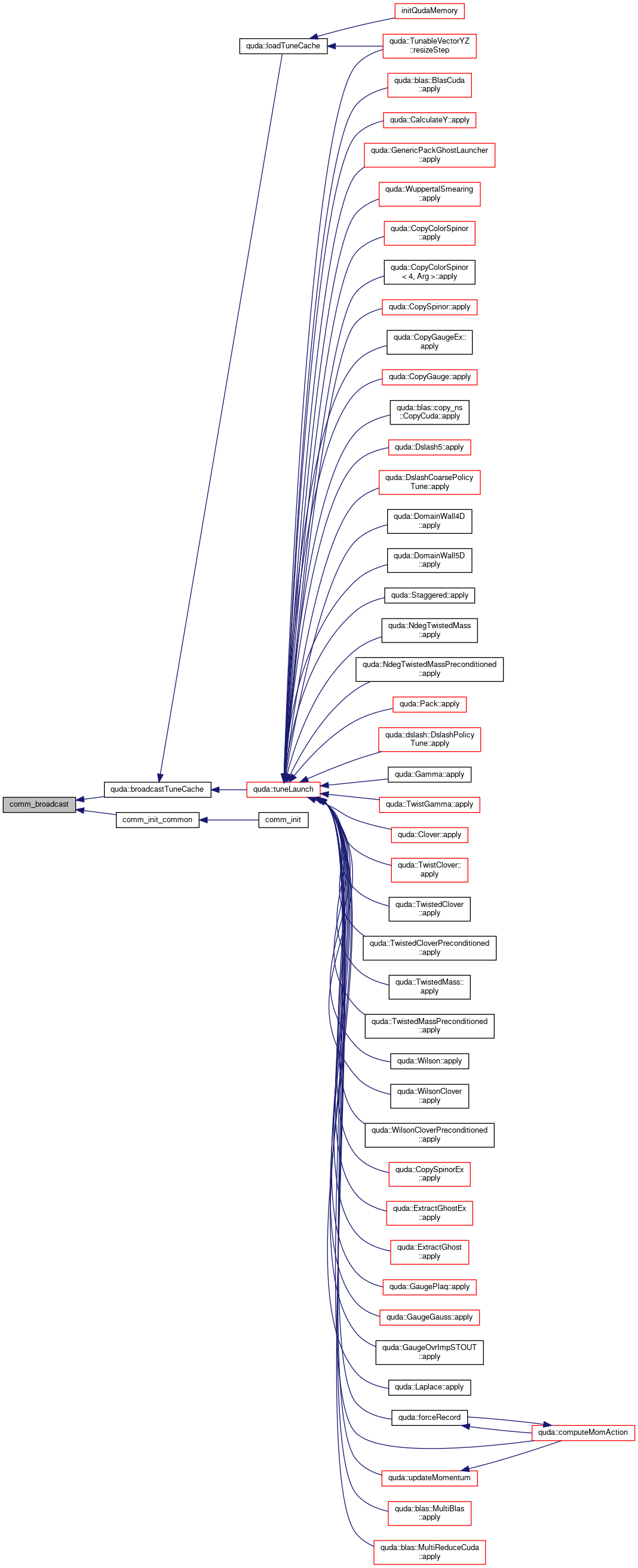

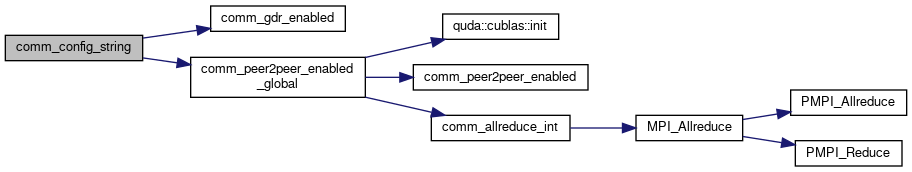

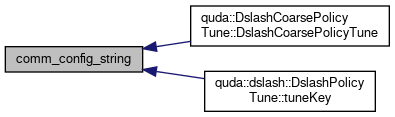

◆ comm_config_string()

| const char* comm_config_string | ( | ) |

Return a string that defines the P2P/GDR environment variable configuration (for use as a tuneKey to enable unique policies).

- Returns

- String specifying comm config

Definition at line 766 of file comm_common.cpp.

References comm_gdr_enabled(), and comm_peer2peer_enabled_global().

Referenced by quda::DslashCoarsePolicyTune::DslashCoarsePolicyTune(), and quda::dslash::DslashPolicyTune< Dslash >::tuneKey().

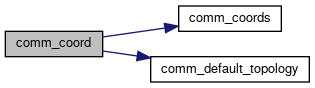

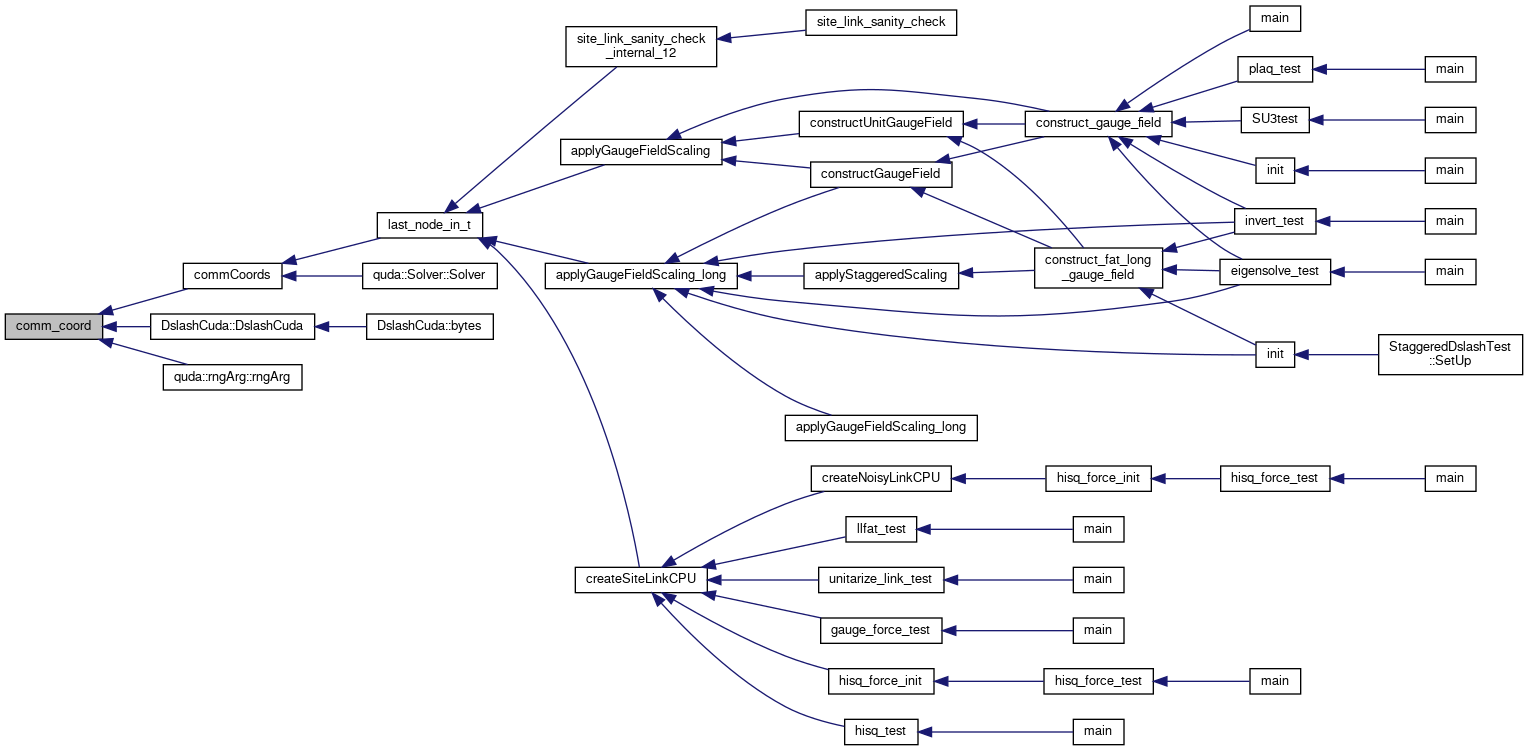

◆ comm_coord()

| int comm_coord | ( | int | dim | ) |

Return the coording of this process in the dimension dim

- Parameters

-

dim Dimension which we are querying

- Returns

- Coordinate of this process

Definition at line 431 of file comm_common.cpp.

References comm_coords(), and comm_default_topology().

Referenced by commCoords(), DslashCuda::DslashCuda(), and quda::rngArg::rngArg().

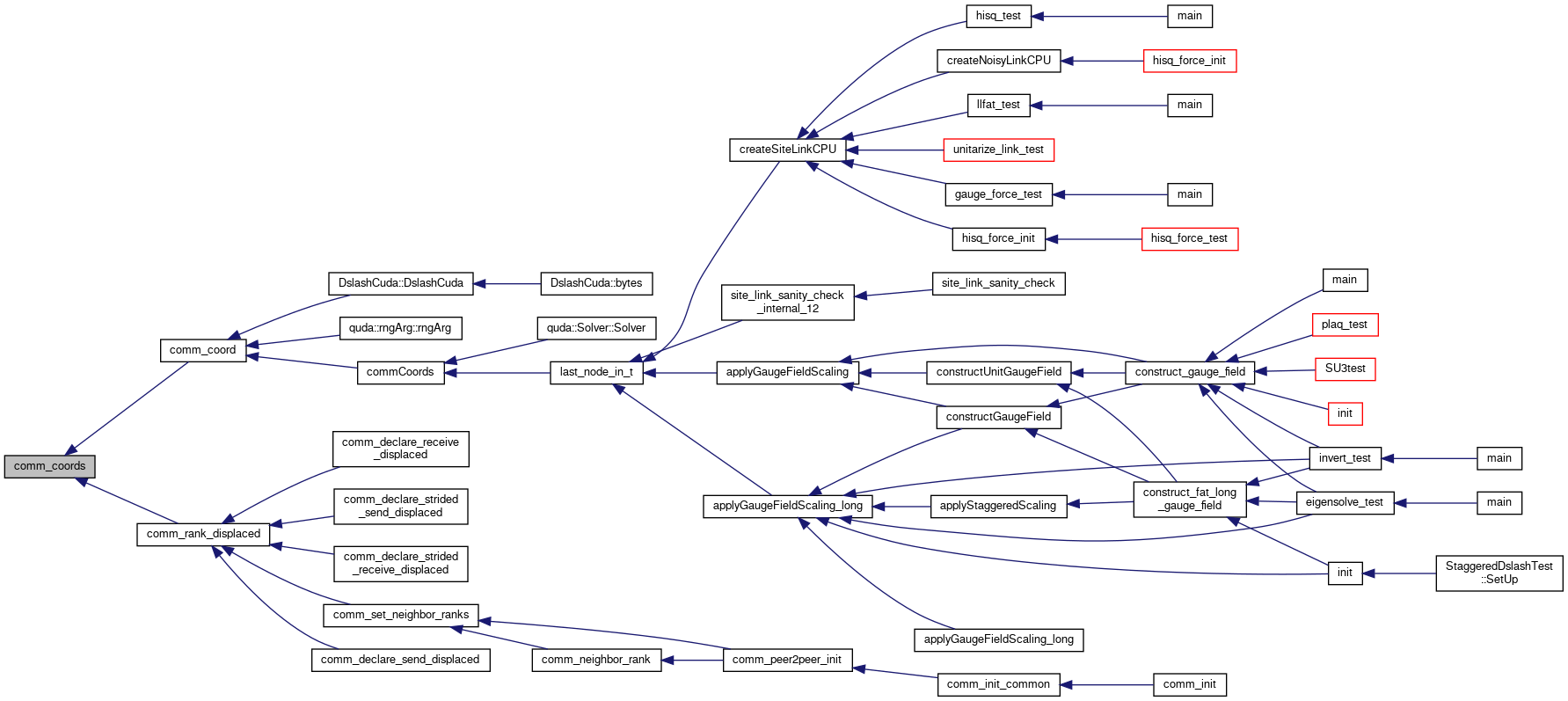

◆ comm_coords()

| const int* comm_coords | ( | const Topology * | topo | ) |

Definition at line 334 of file comm_common.cpp.

References Topology_s::my_coords.

Referenced by comm_coord(), and comm_rank_displaced().

◆ comm_coords_from_rank()

| const int* comm_coords_from_rank | ( | const Topology * | topo, |

| int | rank | ||

| ) |

Definition at line 340 of file comm_common.cpp.

References Topology_s::coords, and rank.

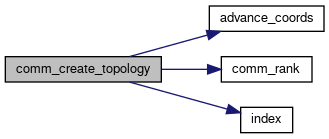

◆ comm_create_topology()

| Topology* comm_create_topology | ( | int | ndim, |

| const int * | dims, | ||

| QudaCommsMap | rank_from_coords, | ||

| void * | map_data | ||

| ) |

Definition at line 94 of file comm_common.cpp.

References advance_coords(), comm_rank(), Topology_s::coords, Topology_s::dims, errorQuda, index(), Topology_s::my_coords, Topology_s::my_rank, Topology_s::ndim, QUDA_MAX_DIM, rand_seed, rank, Topology_s::ranks, and safe_malloc.

Referenced by comm_init_common().

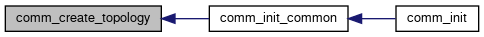

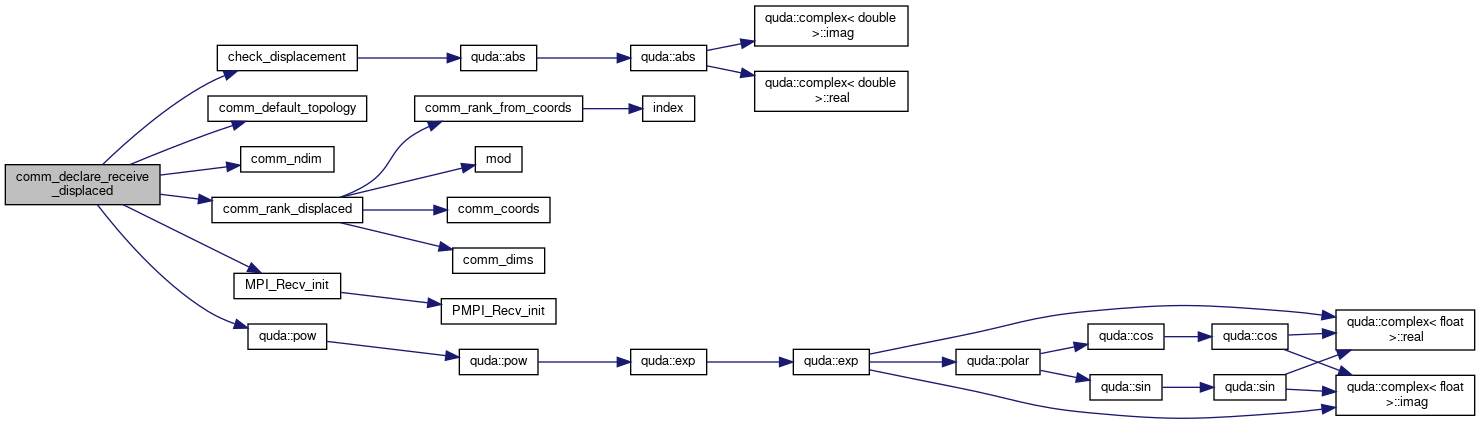

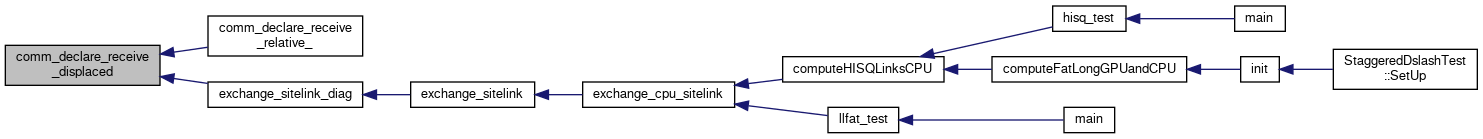

◆ comm_declare_receive_displaced()

| MsgHandle* comm_declare_receive_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | nbytes | ||

| ) |

Create a persistent message handler for a relative receive

- Parameters

-

buffer Buffer into which message will be received dim Dimension from message will be received dir Direction from messaged with be recived (0 - backwards, 1 forwards) nbytes Size of message in bytes

Declare a message handle for receiving from a node displaced in (x,y,z,t) according to "displacement"

Definition at line 130 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, errorQuda, MsgHandle_s::handle, max_displacement, MsgHandle_s::mem, MPI_CHECK, MPI_Recv_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

Referenced by comm_declare_receive_relative_(), and exchange_sitelink_diag().

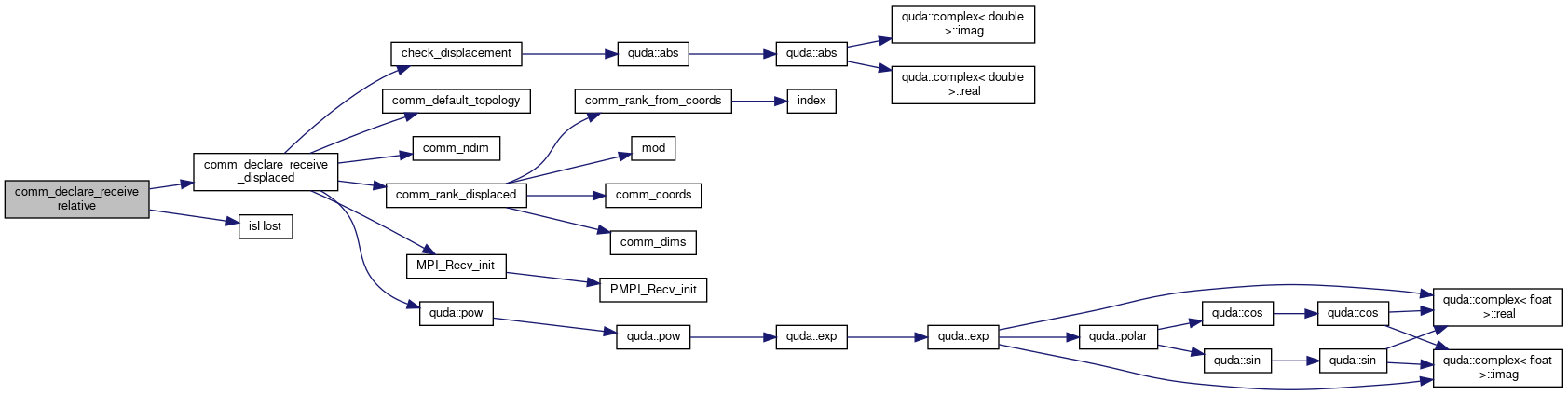

◆ comm_declare_receive_relative_()

| MsgHandle* comm_declare_receive_relative_ | ( | const char * | func, |

| const char * | file, | ||

| int | line, | ||

| void * | buffer, | ||

| int | dim, | ||

| int | dir, | ||

| size_t | nbytes | ||

| ) |

Create a persistent message handler for a relative send. This should not be called directly, and instead the helper macro (without the trailing underscore) should be called instead.

- Parameters

-

buffer Buffer into which message will be received dim Dimension from message will be received dir Direction from messaged with be recived (0 - backwards, 1 forwards) nbytes Size of message in bytes

Receive from the "dir" direction in the "dim" dimension

Definition at line 499 of file comm_common.cpp.

References checkCudaError, comm_declare_receive_displaced(), errorQuda, isHost(), printfQuda, and QUDA_MAX_DIM.

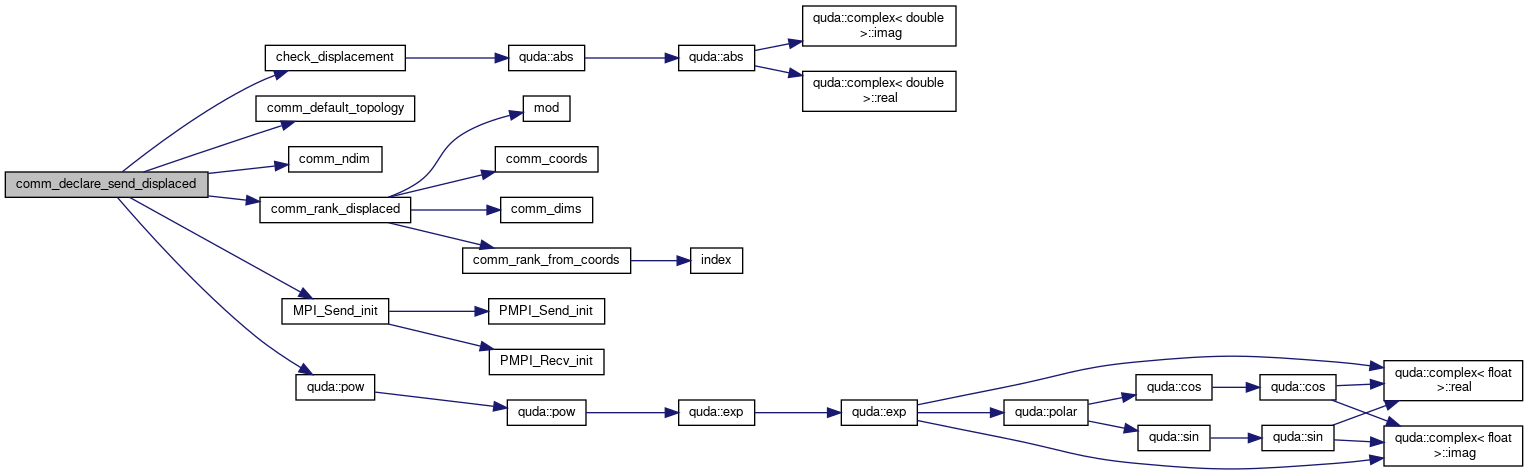

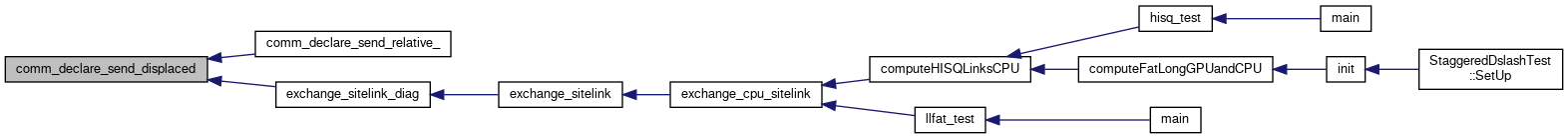

◆ comm_declare_send_displaced()

| MsgHandle* comm_declare_send_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | nbytes | ||

| ) |

Create a persistent message handler for a relative send

- Parameters

-

buffer Buffer from which message will be sent dim Dimension in which message will be sent dir Direction in which messaged with be sent (0 - backwards, 1 forwards) nbytes Size of message in bytes

Declare a message handle for sending to a node displaced in (x,y,z,t) according to "displacement"

Definition at line 107 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, errorQuda, MsgHandle_s::handle, max_displacement, MsgHandle_s::mem, MPI_CHECK, MPI_Send_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

Referenced by comm_declare_send_relative_(), and exchange_sitelink_diag().

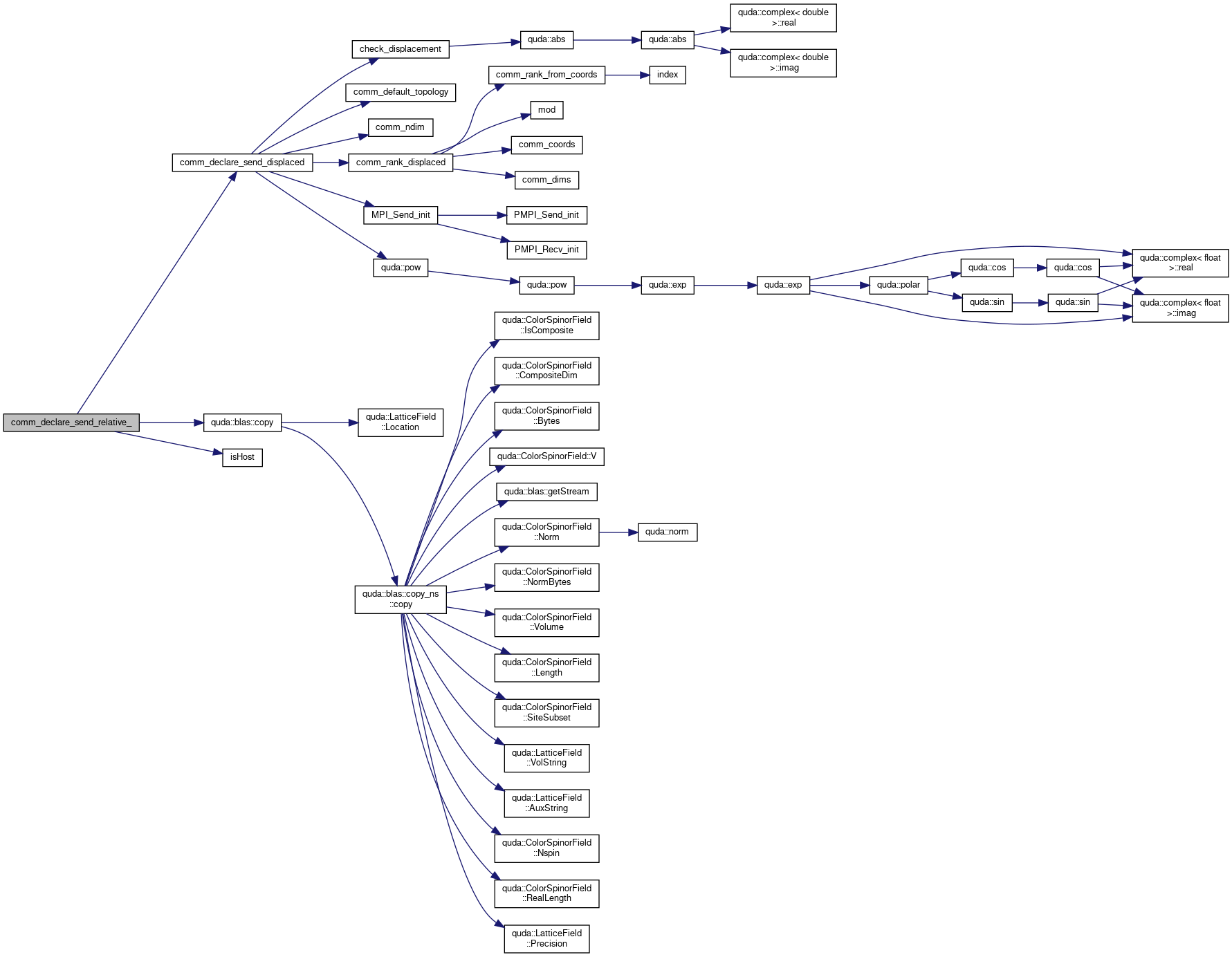

◆ comm_declare_send_relative_()

| MsgHandle* comm_declare_send_relative_ | ( | const char * | func, |

| const char * | file, | ||

| int | line, | ||

| void * | buffer, | ||

| int | dim, | ||

| int | dir, | ||

| size_t | nbytes | ||

| ) |

Create a persistent message handler for a relative send. This should not be called directly, and instead the helper macro (without the trailing underscore) should be called instead.

- Parameters

-

buffer Buffer from which message will be sent dim Dimension in which message will be sent dir Direction in which messaged with be sent (0 - backwards, 1 forwards) nbytes Size of message in bytes

Send to the "dir" direction in the "dim" dimension

Definition at line 462 of file comm_common.cpp.

References checkCudaError, comm_declare_send_displaced(), quda::blas::copy(), device_free, device_malloc, errorQuda, host_free, isHost(), printfQuda, QUDA_MAX_DIM, safe_malloc, and tmp.

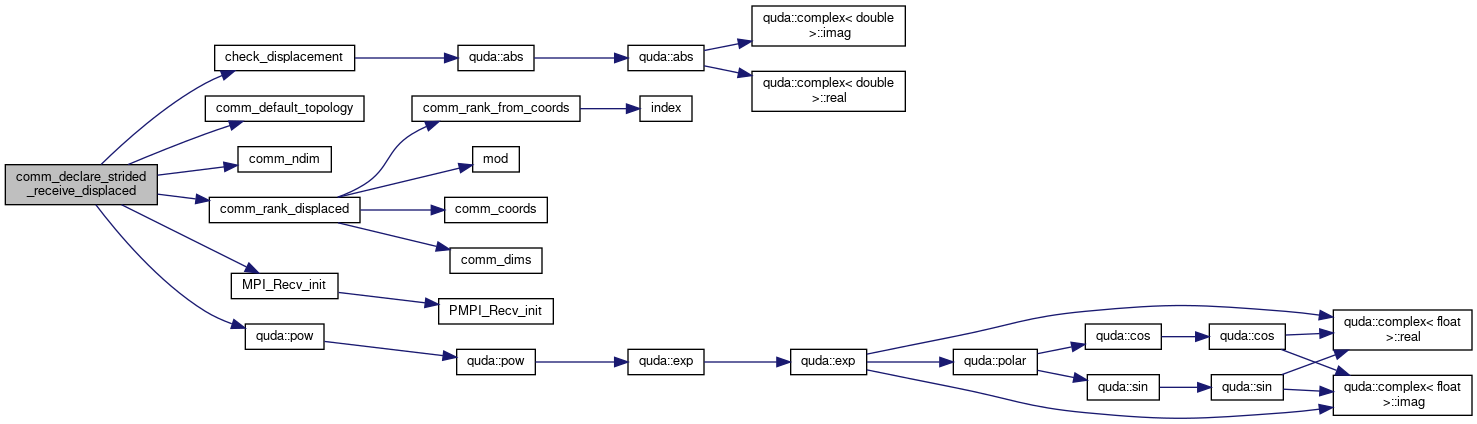

◆ comm_declare_strided_receive_displaced()

| MsgHandle* comm_declare_strided_receive_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Create a persistent strided message handler for a displaced receive

- Parameters

-

buffer Buffer into which message will be received displacement Array of offsets specifying the relative node from which we are receiving blksize Size of block in bytes nblocks Number of blocks stride Stride between blocks in bytes

Declare a message handle for receiving from a node displaced in (x,y,z,t) according to "displacement"

Declare a message handle for strided receiving from a node displaced in (x,y,z,t) according to "displacement"

Definition at line 182 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, MsgHandle_s::datatype, errorQuda, MsgHandle_s::handle, max_displacement, MsgHandle_s::mem, MPI_CHECK, MPI_Recv_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

Referenced by comm_declare_strided_receive_relative_().

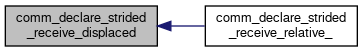

◆ comm_declare_strided_receive_relative_()

| MsgHandle* comm_declare_strided_receive_relative_ | ( | const char * | func, |

| const char * | file, | ||

| int | line, | ||

| void * | buffer, | ||

| int | dim, | ||

| int | dir, | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Create a persistent strided message handler for a relative receive This should not be called directly, and instead the helper macro (without the trailing underscore) should be called instead.

- Parameters

-

buffer Buffer into which message will be received dim Dimension from message will be received dir Direction from messaged with be recived (0 - backwards, 1 forwards) blksize Size of block in bytes nblocks Number of blocks stride Stride between blocks in bytes

Strided receive from the "dir" direction in the "dim" dimension

Definition at line 573 of file comm_common.cpp.

References checkCudaError, comm_declare_strided_receive_displaced(), errorQuda, isHost(), printfQuda, and QUDA_MAX_DIM.

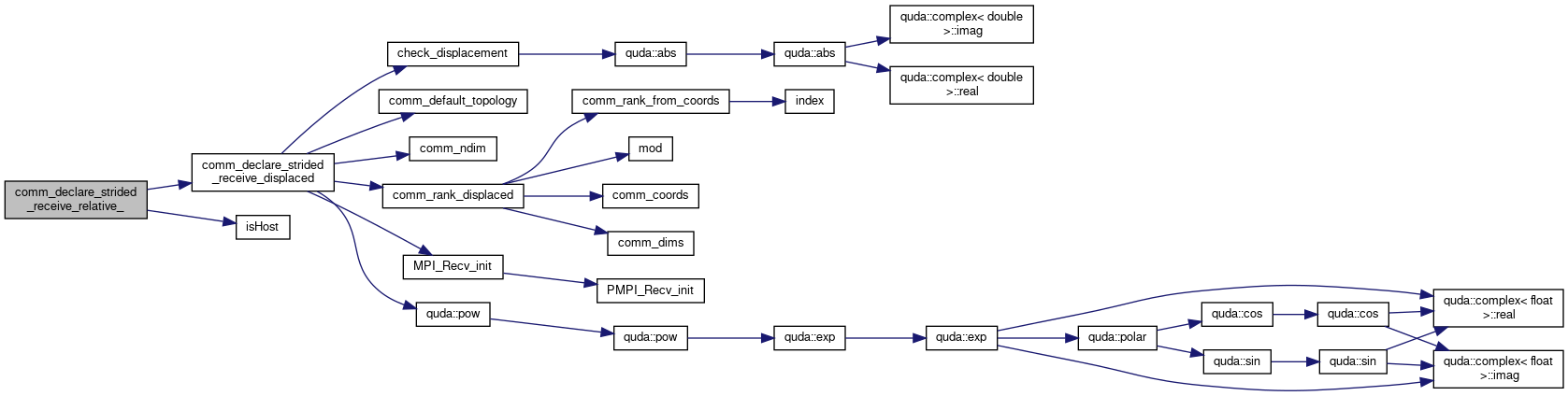

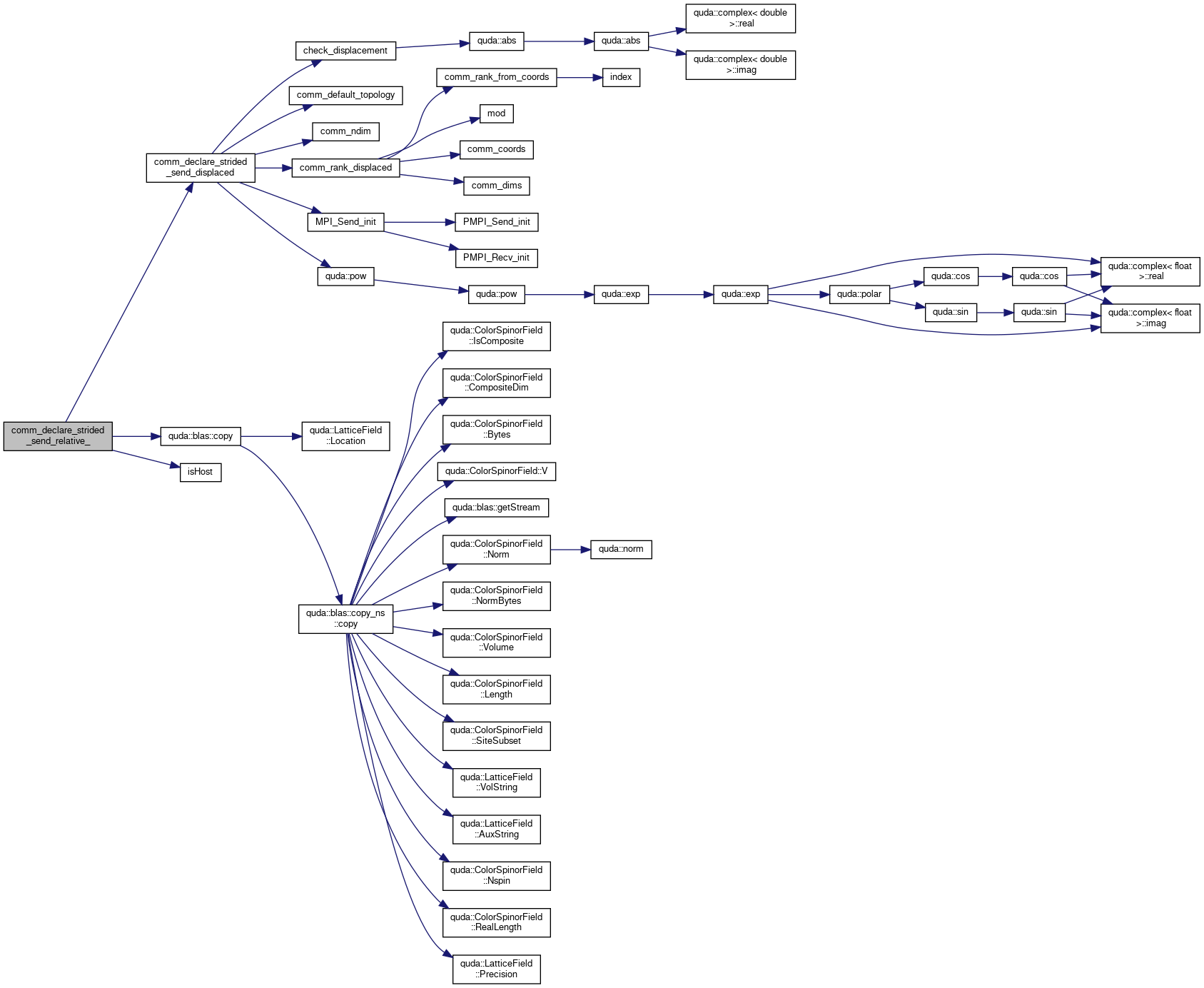

◆ comm_declare_strided_send_displaced()

| MsgHandle* comm_declare_strided_send_displaced | ( | void * | buffer, |

| const int | displacement[], | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Create a persistent strided message handler for a displaced send

- Parameters

-

buffer Buffer from which message will be sent displacement Array of offsets specifying the relative node to which we are sending blksize Size of block in bytes nblocks Number of blocks stride Stride between blocks in bytes

Declare a message handle for sending to a node displaced in (x,y,z,t) according to "displacement"

Declare a message handle for strided sending to a node displaced in (x,y,z,t) according to "displacement"

Definition at line 153 of file comm_mpi.cpp.

References check_displacement(), comm_default_topology(), comm_ndim(), comm_rank_displaced(), MsgHandle_s::custom, MsgHandle_s::datatype, errorQuda, MsgHandle_s::handle, max_displacement, MsgHandle_s::mem, MPI_CHECK, MPI_Send_init(), ndim, quda::pow(), rank, MsgHandle_s::request, and safe_malloc.

Referenced by comm_declare_strided_send_relative_().

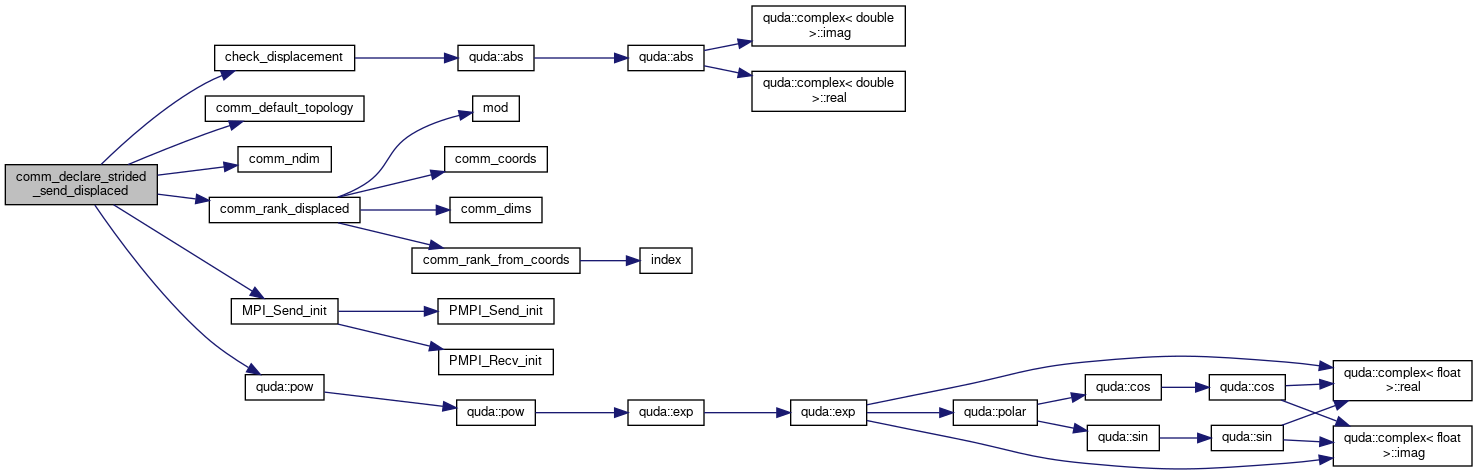

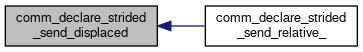

◆ comm_declare_strided_send_relative_()

| MsgHandle* comm_declare_strided_send_relative_ | ( | const char * | func, |

| const char * | file, | ||

| int | line, | ||

| void * | buffer, | ||

| int | dim, | ||

| int | dir, | ||

| size_t | blksize, | ||

| int | nblocks, | ||

| size_t | stride | ||

| ) |

Create a persistent strided message handler for a relative send. This should not be called directly, and instead the helper macro (without the trailing underscore) should be called instead.

- Parameters

-

buffer Buffer from which message will be sent dim Dimension in which message will be sent dir Direction in which messaged with be sent (0 - backwards, 1 forwards) blksize Size of block in bytes nblocks Number of blocks stride Stride between blocks in bytes

Strided send to the "dir" direction in the "dim" dimension

Definition at line 532 of file comm_common.cpp.

References checkCudaError, comm_declare_strided_send_displaced(), quda::blas::copy(), device_free, device_malloc, errorQuda, host_free, isHost(), printfQuda, QUDA_MAX_DIM, safe_malloc, and tmp.

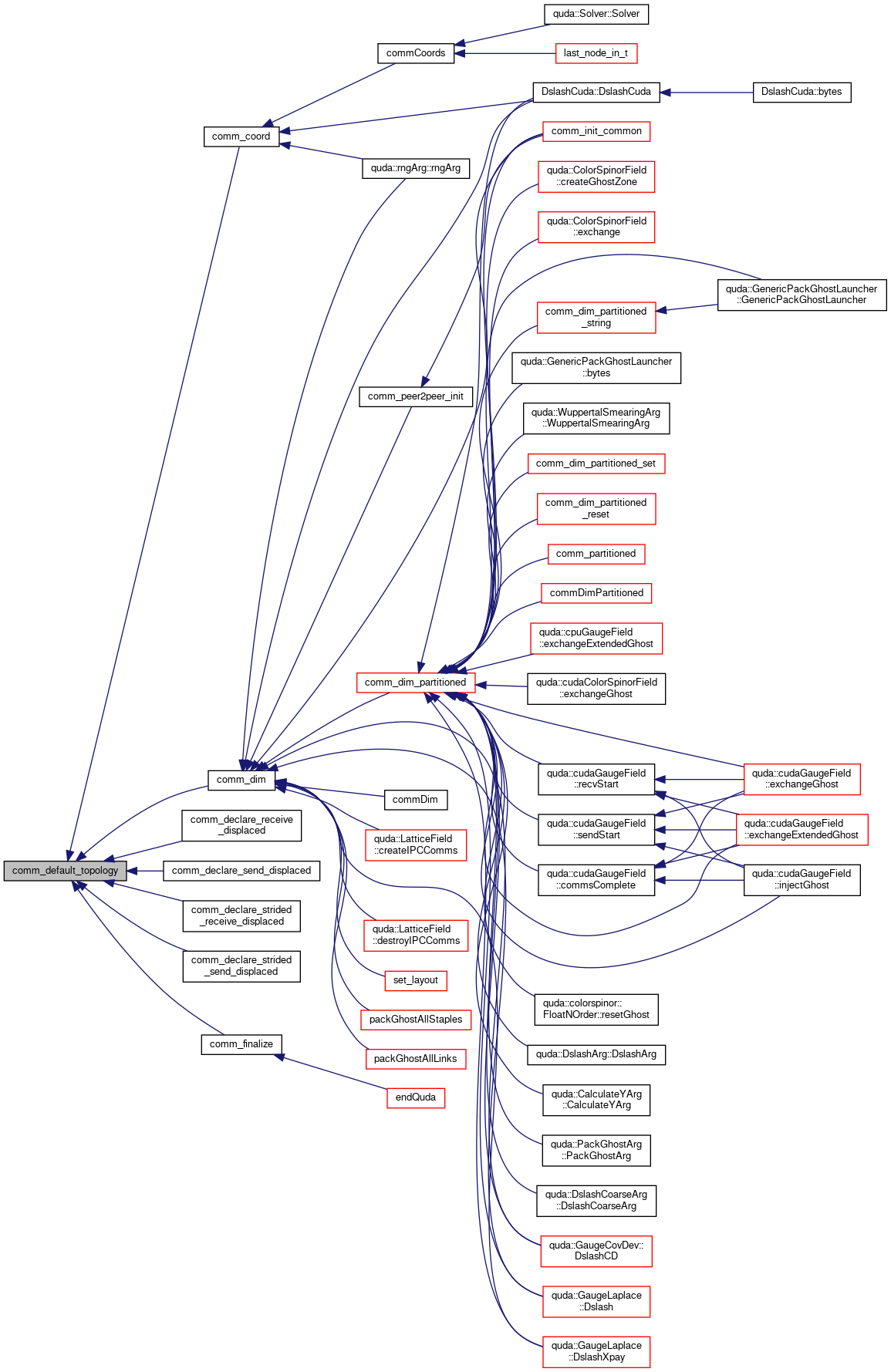

◆ comm_default_topology()

| Topology* comm_default_topology | ( | void | ) |

Definition at line 381 of file comm_common.cpp.

References default_topo, and errorQuda.

Referenced by comm_coord(), comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), comm_declare_strided_send_displaced(), comm_dim(), and comm_finalize().

◆ comm_destroy_topology()

| void comm_destroy_topology | ( | Topology * | topo | ) |

Definition at line 137 of file comm_common.cpp.

References Topology_s::coords, host_free, and Topology_s::ranks.

Referenced by comm_finalize().

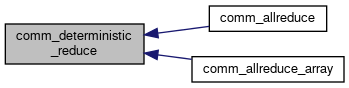

◆ comm_deterministic_reduce()

| bool comm_deterministic_reduce | ( | ) |

- Returns

- Whether are doing determinisitic multi-process reductions or not

Definition at line 799 of file comm_common.cpp.

References deterministic_reduce.

Referenced by comm_allreduce(), and comm_allreduce_array().

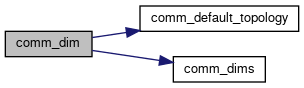

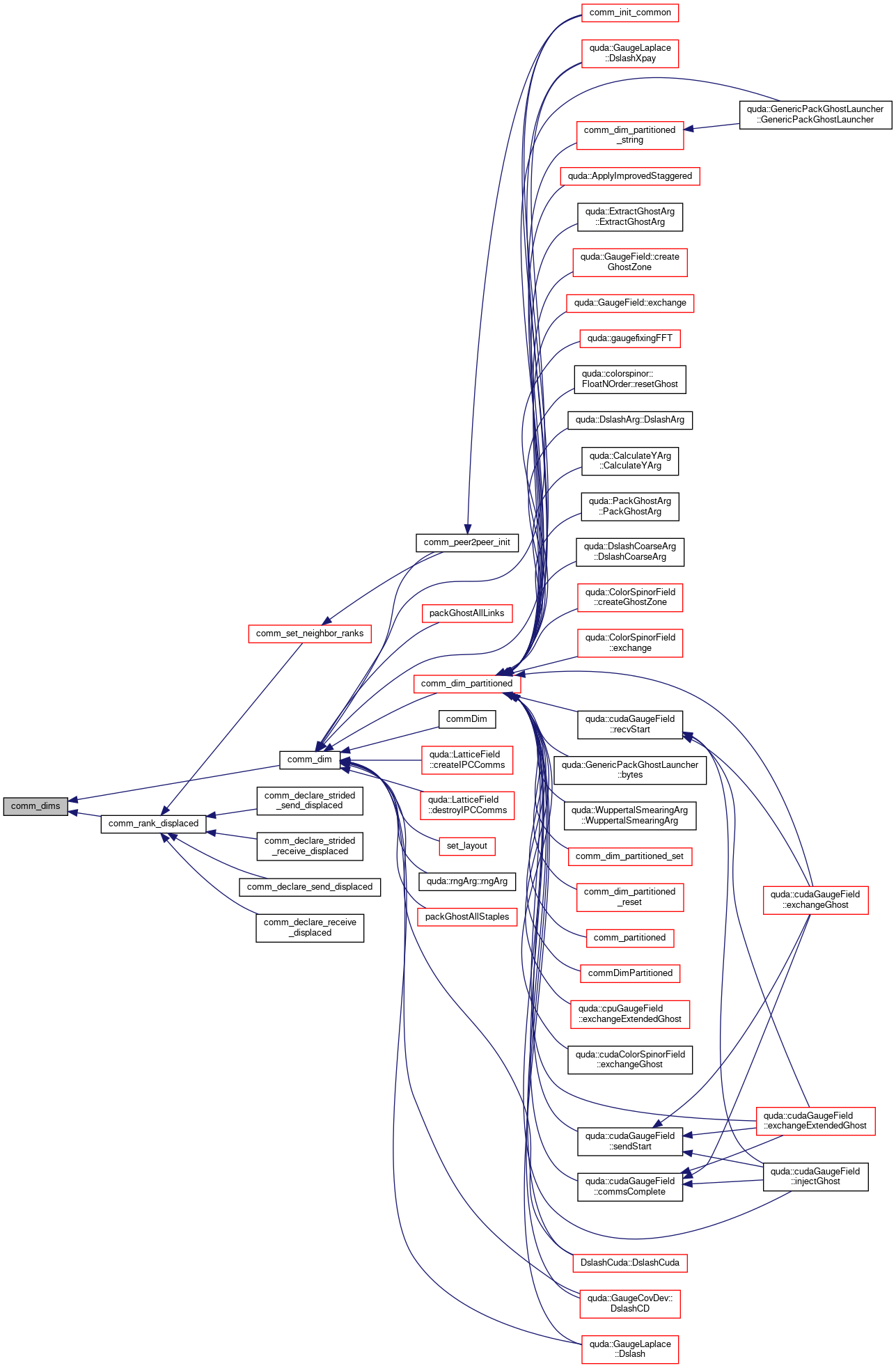

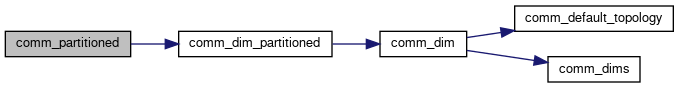

◆ comm_dim()

| int comm_dim | ( | int | dim | ) |

Return the number of processes in the dimension dim

- Parameters

-

dim Dimension which we are querying

- Returns

- Length of process dimensions

Definition at line 424 of file comm_common.cpp.

References comm_default_topology(), and comm_dims().

Referenced by comm_dim_partitioned(), comm_init_common(), comm_peer2peer_init(), commDim(), quda::LatticeField::createIPCComms(), quda::LatticeField::destroyIPCComms(), quda::GaugeLaplace::Dslash(), quda::GaugeCovDev::DslashCD(), DslashCuda::DslashCuda(), quda::GaugeLaplace::DslashXpay(), packGhostAllLinks(), packGhostAllStaples(), quda::rngArg::rngArg(), and set_layout().

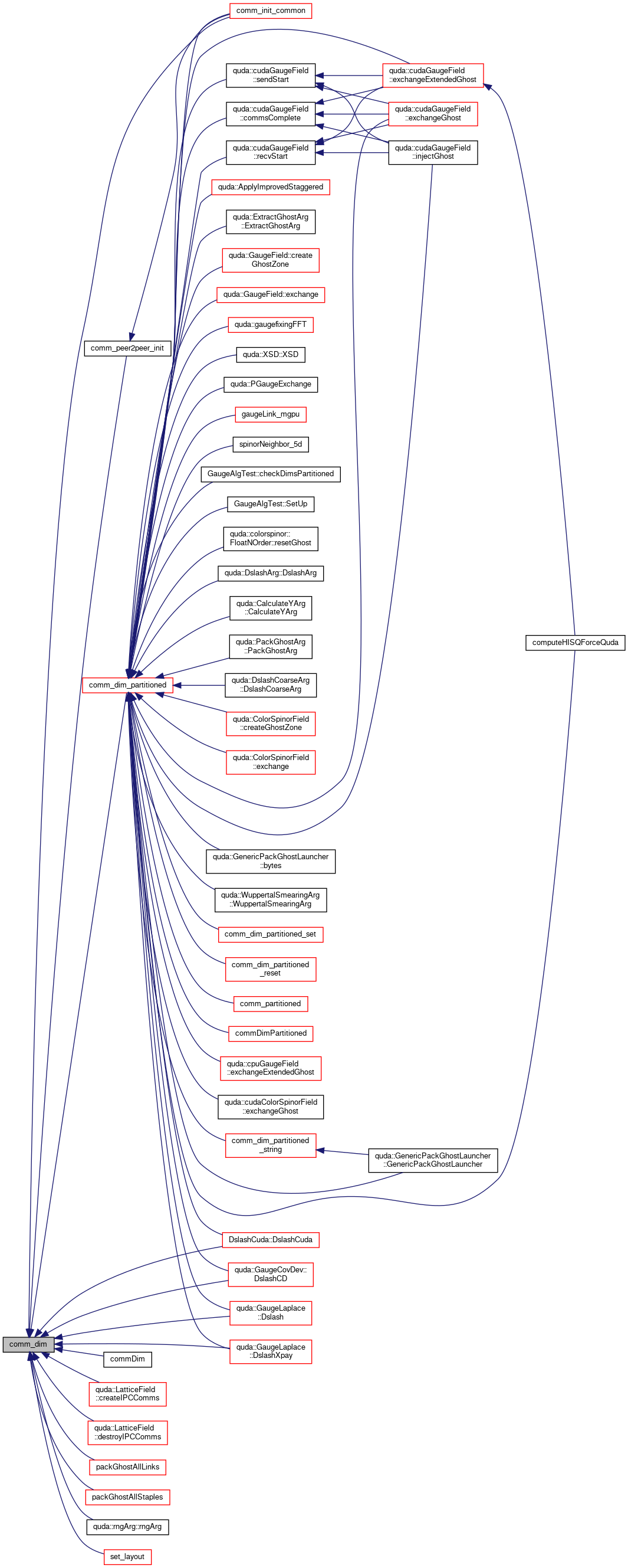

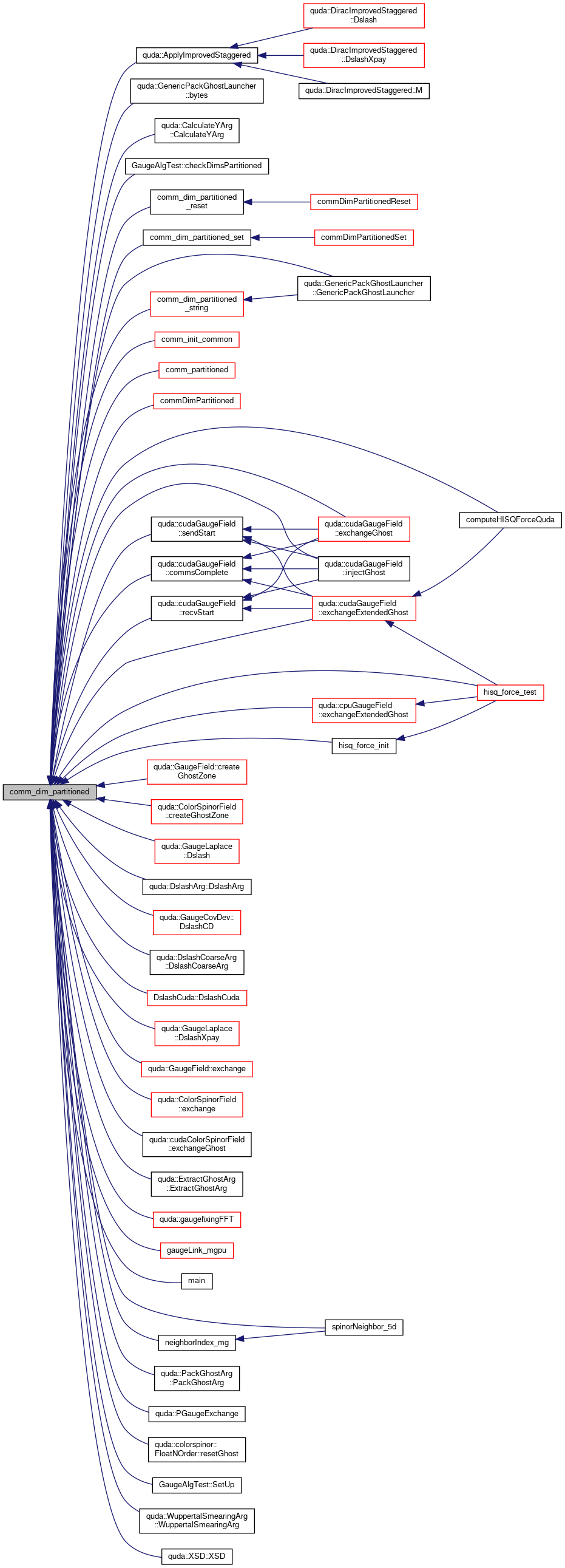

◆ comm_dim_partitioned()

| int comm_dim_partitioned | ( | int | dim | ) |

Definition at line 635 of file comm_common.cpp.

References comm_dim(), and manual_set_partition.

Referenced by quda::ApplyImprovedStaggered(), quda::GenericPackGhostLauncher< Float, block_float, Ns, Ms, Nc, Mc, Arg >::bytes(), quda::CalculateYArg< Float, fineSpin, coarseSpin, fineColor, coarseColor, coarseGauge, coarseGaugeAtomic, fineGauge, fineSpinor, fineSpinorTmp, fineSpinorV, fineClover >::CalculateYArg(), GaugeAlgTest::checkDimsPartitioned(), comm_dim_partitioned_reset(), comm_dim_partitioned_set(), comm_dim_partitioned_string(), comm_init_common(), comm_partitioned(), commDimPartitioned(), quda::cudaGaugeField::commsComplete(), computeHISQForceQuda(), quda::GaugeField::createGhostZone(), quda::ColorSpinorField::createGhostZone(), quda::GaugeLaplace::Dslash(), quda::DslashArg< Float >::DslashArg(), quda::GaugeCovDev::DslashCD(), quda::DslashCoarseArg< Float, yFloat, ghostFloat, coarseSpin, coarseColor, csOrder, gOrder >::DslashCoarseArg(), DslashCuda::DslashCuda(), quda::GaugeLaplace::DslashXpay(), quda::GaugeField::exchange(), quda::ColorSpinorField::exchange(), quda::cudaGaugeField::exchangeExtendedGhost(), quda::cpuGaugeField::exchangeExtendedGhost(), quda::cudaGaugeField::exchangeGhost(), quda::cudaColorSpinorField::exchangeGhost(), quda::ExtractGhostArg< Float, nColor_, Order, nDim >::ExtractGhostArg(), quda::gaugefixingFFT(), gaugeLink_mgpu(), quda::GenericPackGhostLauncher< Float, block_float, Ns, Ms, Nc, Mc, Arg >::GenericPackGhostLauncher(), hisq_force_init(), hisq_force_test(), quda::cudaGaugeField::injectGhost(), main(), neighborIndex_mg(), quda::PackGhostArg< Field >::PackGhostArg(), quda::PGaugeExchange(), quda::cudaGaugeField::recvStart(), quda::colorspinor::FloatNOrder< Float, Ns, Nc, N, spin_project, huge_alloc >::resetGhost(), quda::cudaGaugeField::sendStart(), GaugeAlgTest::SetUp(), spinorNeighbor_5d(), quda::WuppertalSmearingArg< Float, Ns, Nc, gRecon >::WuppertalSmearingArg(), and quda::XSD::XSD().

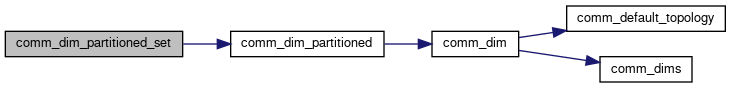

◆ comm_dim_partitioned_set()

| void comm_dim_partitioned_set | ( | int | dim | ) |

Definition at line 618 of file comm_common.cpp.

References comm_dim_partitioned(), manual_set_partition, and partition_string.

Referenced by commDimPartitionedSet().

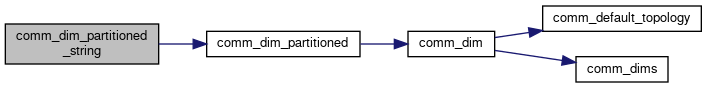

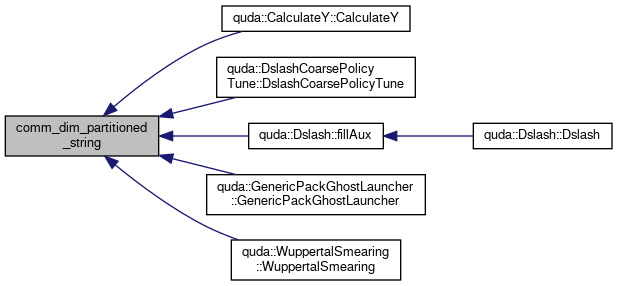

◆ comm_dim_partitioned_string()

| const char* comm_dim_partitioned_string | ( | const int * | comm_dim_override = 0 | ) |

Return a string that defines the comm partitioning (used as a tuneKey)

- Parameters

-

comm_dim_override Optional override for partitioning

- Returns

- String specifying comm partitioning

Definition at line 782 of file comm_common.cpp.

References comm_dim_partitioned(), partition_override_string, and partition_string.

Referenced by quda::CalculateY< from_coarse, Float, fineSpin, fineColor, coarseSpin, coarseColor, Arg >::CalculateY(), quda::DslashCoarsePolicyTune::DslashCoarsePolicyTune(), quda::Dslash< Float >::fillAux(), quda::GenericPackGhostLauncher< Float, block_float, Ns, Ms, Nc, Mc, Arg >::GenericPackGhostLauncher(), and quda::WuppertalSmearing< Float, Ns, Nc, Arg >::WuppertalSmearing().

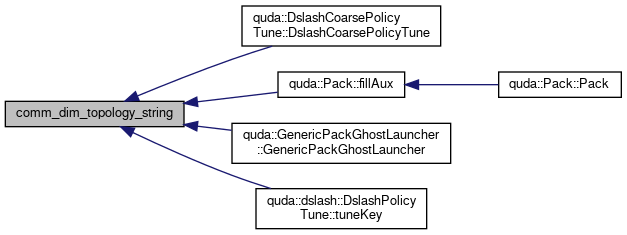

◆ comm_dim_topology_string()

| const char* comm_dim_topology_string | ( | ) |

Return a string that defines the comm topology (for use as a tuneKey)

- Returns

- String specifying comm topology

Definition at line 797 of file comm_common.cpp.

References topology_string.

Referenced by quda::DslashCoarsePolicyTune::DslashCoarsePolicyTune(), quda::Pack< Float, nColor, spin_project >::fillAux(), quda::GenericPackGhostLauncher< Float, block_float, Ns, Ms, Nc, Mc, Arg >::GenericPackGhostLauncher(), and quda::dslash::DslashPolicyTune< Dslash >::tuneKey().

◆ comm_dims()

| const int* comm_dims | ( | const Topology * | topo | ) |

Definition at line 328 of file comm_common.cpp.

References Topology_s::dims.

Referenced by comm_dim(), and comm_rank_displaced().

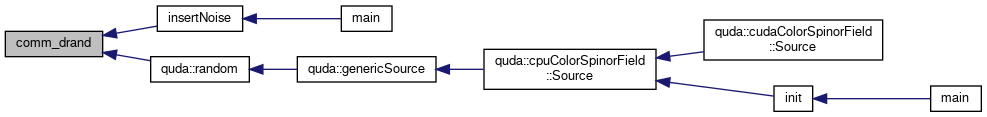

◆ comm_drand()

| double comm_drand | ( | void | ) |

We provide our own random number generator to avoid re-seeding rand(), which might also be used by the calling application. This is a clone of rand48(), provided by stdlib.h on UNIX.

- Returns

- a random double in the interval [0,1)

Definition at line 82 of file comm_common.cpp.

References rand_seed.

Referenced by insertNoise(), and quda::random().

◆ comm_enable_intranode()

| void comm_enable_intranode | ( | bool | enable | ) |

Enable / disable intra-node (non-peer-to-peer) communication.

- Parameters

-

[in] enable Boolean flag to enable / disable intra-node (non peer-to-peer) communication

Definition at line 318 of file comm_common.cpp.

References enable_intranode.

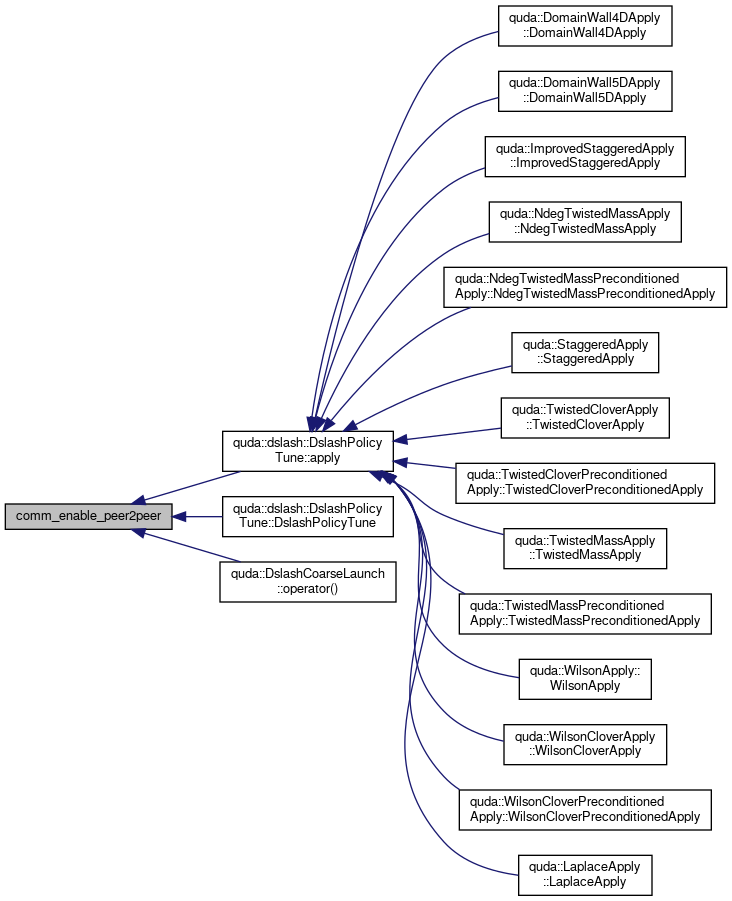

◆ comm_enable_peer2peer()

| void comm_enable_peer2peer | ( | bool | enable | ) |

Enable / disable peer-to-peer communication: used for dslash policies that do not presently support peer-to-peer communication.

- Parameters

-

[in] enable Boolean flag to enable / disable peer-to-peer communication

Definition at line 308 of file comm_common.cpp.

References enable_p2p.

Referenced by quda::dslash::DslashPolicyTune< Dslash >::apply(), quda::dslash::DslashPolicyTune< Dslash >::DslashPolicyTune(), and quda::DslashCoarseLaunch::operator()().

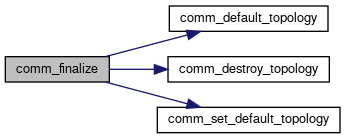

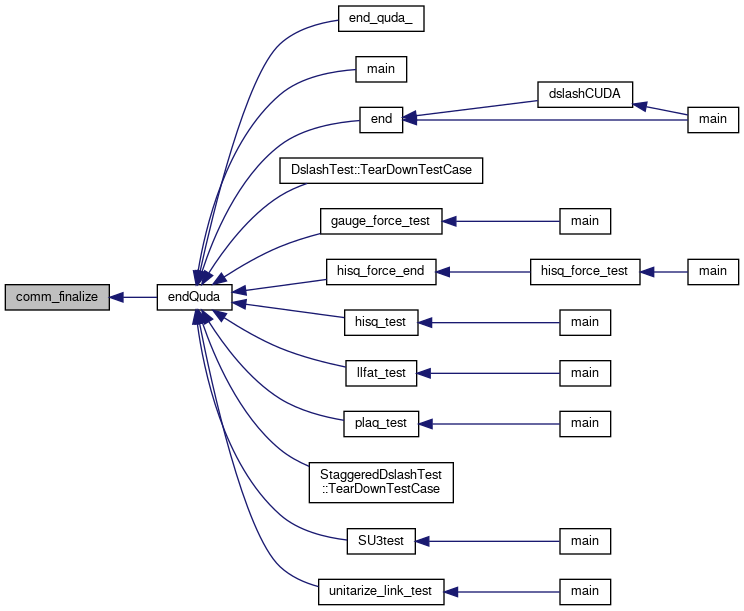

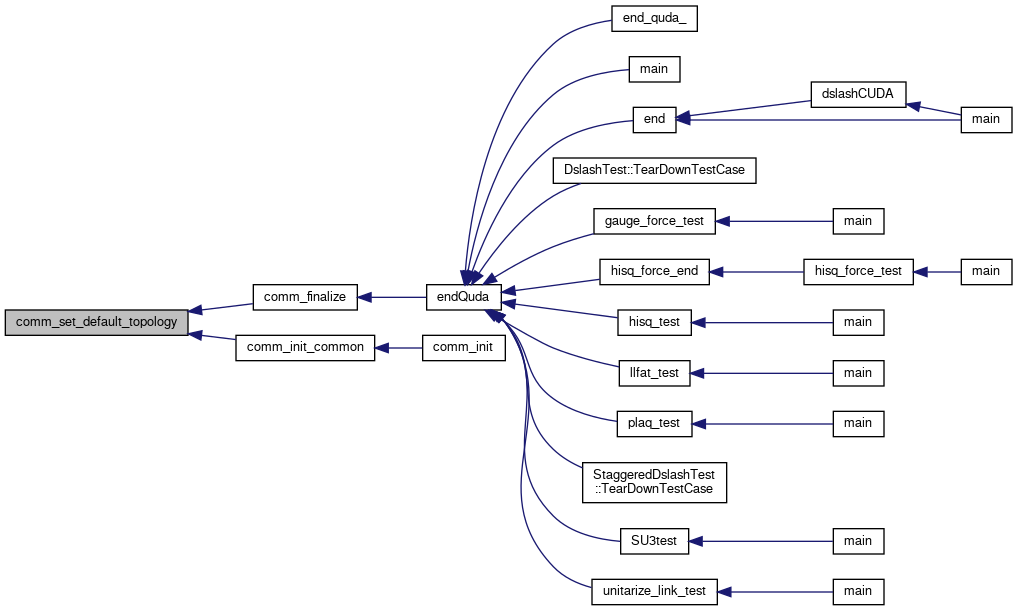

◆ comm_finalize()

| void comm_finalize | ( | void | ) |

Definition at line 606 of file comm_common.cpp.

References comm_default_topology(), comm_destroy_topology(), and comm_set_default_topology().

Referenced by endQuda().

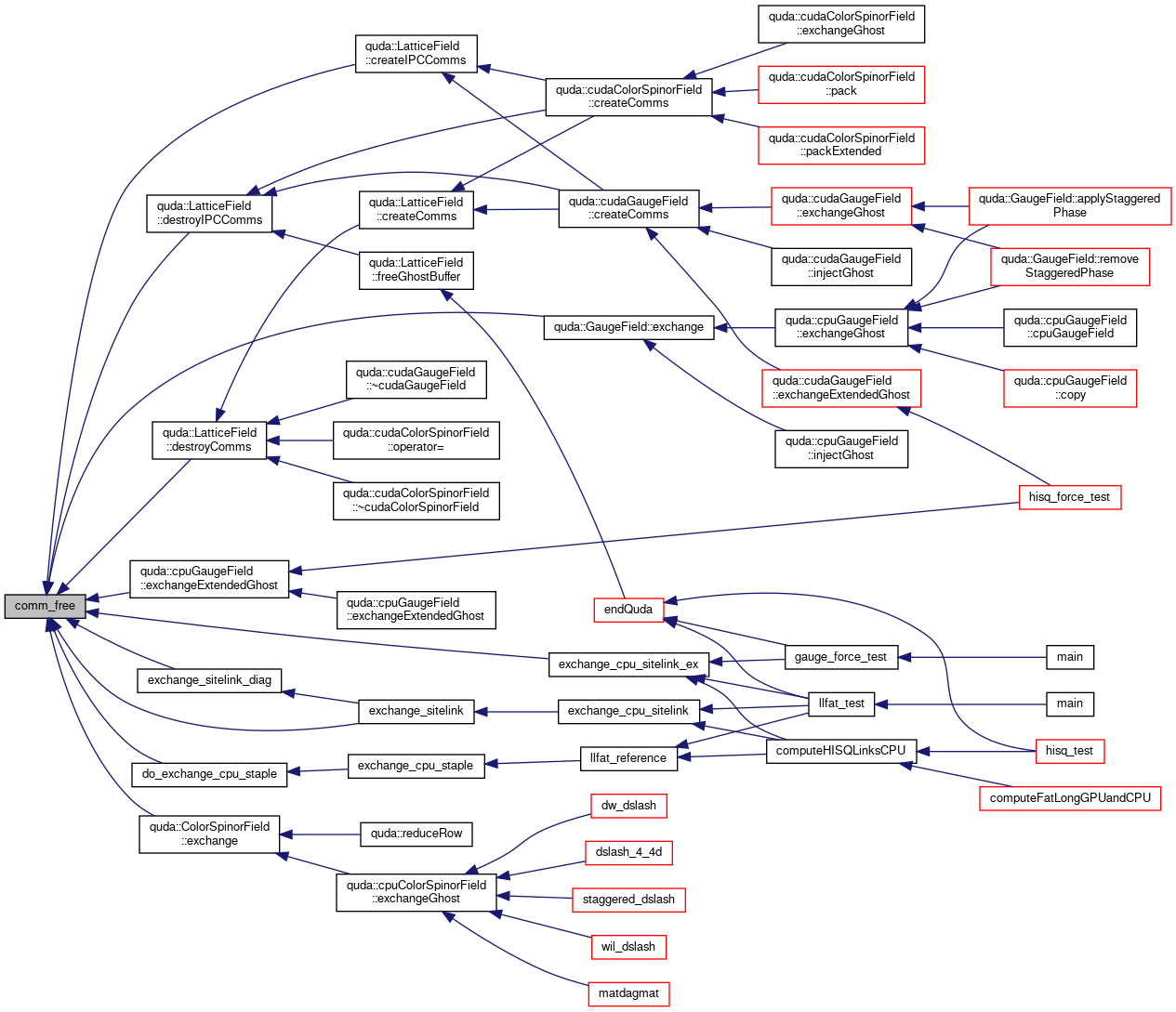

◆ comm_free()

| void comm_free | ( | MsgHandle *& | mh | ) |

Definition at line 207 of file comm_mpi.cpp.

References MsgHandle_s::custom, MsgHandle_s::datatype, MsgHandle_s::handle, host_free, MsgHandle_s::mem, MPI_CHECK, and MsgHandle_s::request.

Referenced by quda::LatticeField::createIPCComms(), quda::LatticeField::destroyComms(), quda::LatticeField::destroyIPCComms(), do_exchange_cpu_staple(), quda::GaugeField::exchange(), quda::ColorSpinorField::exchange(), exchange_cpu_sitelink_ex(), exchange_sitelink(), exchange_sitelink_diag(), and quda::cpuGaugeField::exchangeExtendedGhost().

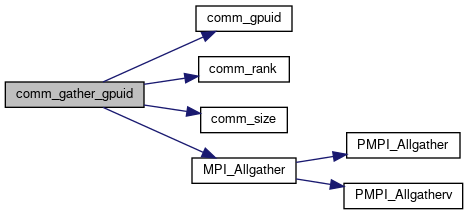

◆ comm_gather_gpuid()

| void comm_gather_gpuid | ( | int * | gpuid_recv_buf | ) |

Gather all GPU ids.

- Parameters

-

[out] gpuid_recv_buf int array of length comm_size() that will be filled in GPU ids for all processes (in rank order).

Definition at line 53 of file comm_mpi.cpp.

References comm_gpuid(), comm_rank(), comm_size(), gpuid, MPI_Allgather(), and MPI_CHECK.

Referenced by comm_peer2peer_init().

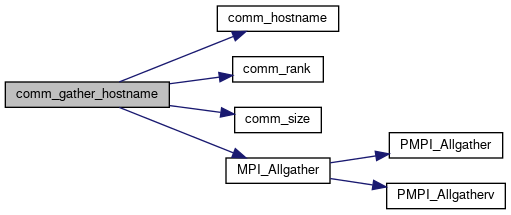

◆ comm_gather_hostname()

| void comm_gather_hostname | ( | char * | hostname_recv_buf | ) |

Gather all hostnames.

- Parameters

-

[out] hostname_recv_buf char array of length 128*comm_size() that will be filled in GPU ids for all processes. Each hostname is in rank order, with 128 bytes for each.

Definition at line 47 of file comm_mpi.cpp.

References comm_hostname(), comm_rank(), comm_size(), MPI_Allgather(), and MPI_CHECK.

Referenced by comm_init_common().

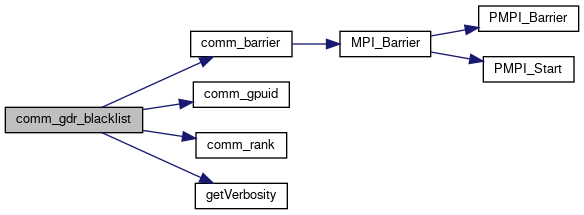

◆ comm_gdr_blacklist()

| bool comm_gdr_blacklist | ( | ) |

Query if GPU Direct RDMA communication is blacklisted for this GPU.

Definition at line 665 of file comm_common.cpp.

References comm_barrier(), comm_gpuid(), comm_rank(), errorQuda, getVerbosity(), and QUDA_SILENT.

Referenced by comm_peer2peer_init(), and quda::dslash::DslashFactory< Dslash >::create().

◆ comm_gdr_enabled()

| bool comm_gdr_enabled | ( | ) |

Query if GPU Direct RDMA communication is enabled (global setting)

Definition at line 649 of file comm_common.cpp.

Referenced by comm_config_string(), comm_peer2peer_init(), quda::cudaGaugeField::commsComplete(), quda::cudaColorSpinorField::commsQuery(), quda::cudaColorSpinorField::commsWait(), quda::LatticeField::createComms(), quda::DslashCoarsePolicyTune::DslashCoarsePolicyTune(), quda::dslash::DslashPolicyTune< Dslash >::DslashPolicyTune(), quda::cudaGaugeField::exchangeExtendedGhost(), quda::cudaGaugeField::exchangeGhost(), quda::cudaColorSpinorField::exchangeGhost(), quda::cudaGaugeField::injectGhost(), quda::cudaGaugeField::recvStart(), quda::cudaColorSpinorField::recvStart(), quda::cudaGaugeField::sendStart(), and quda::cudaColorSpinorField::sendStart().

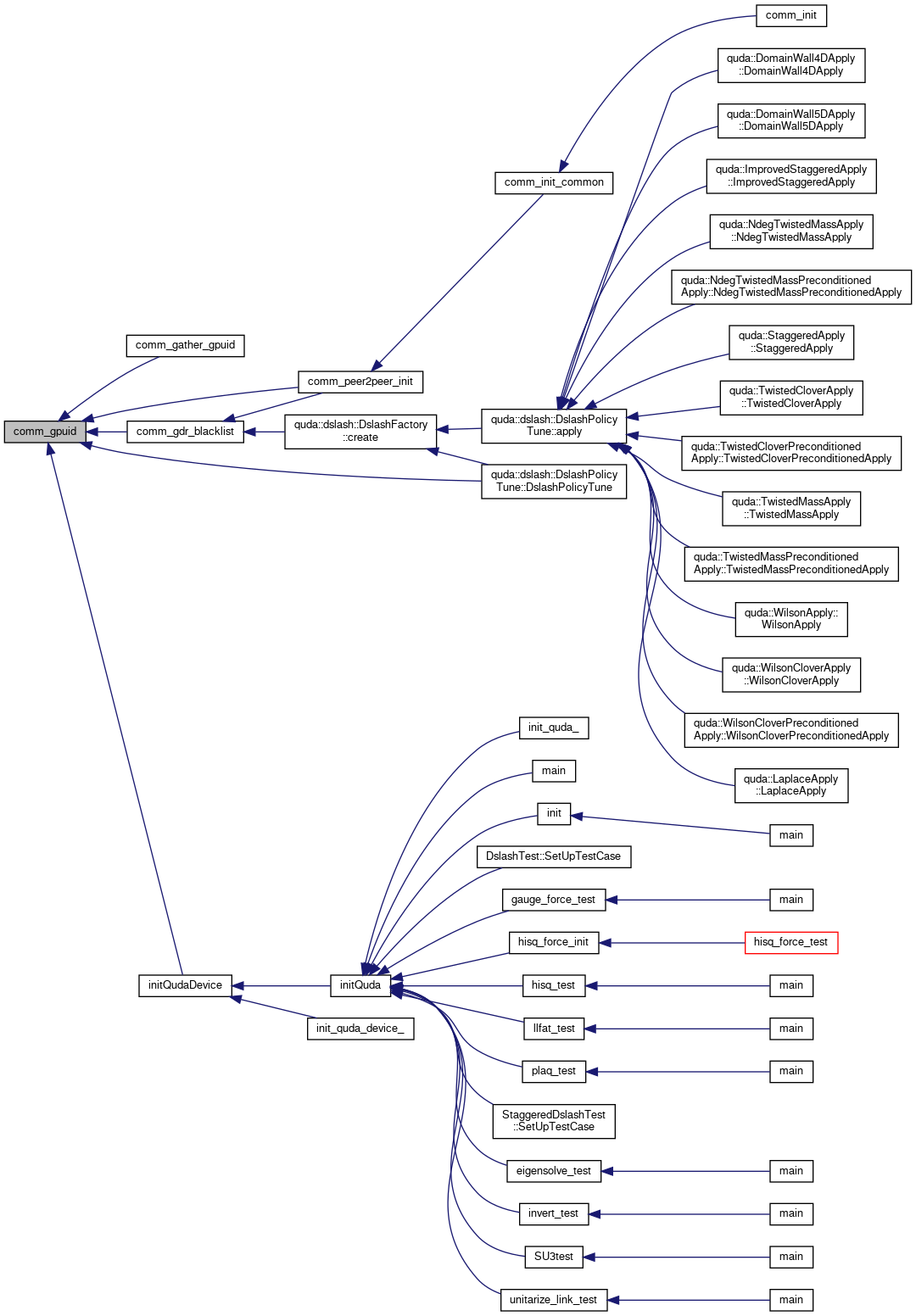

◆ comm_gpuid()

| int comm_gpuid | ( | void | ) |

- Returns

- GPU id associated with this process

Definition at line 146 of file comm_common.cpp.

References gpuid.

Referenced by comm_gather_gpuid(), comm_gdr_blacklist(), comm_peer2peer_init(), quda::dslash::DslashPolicyTune< Dslash >::DslashPolicyTune(), and initQudaDevice().

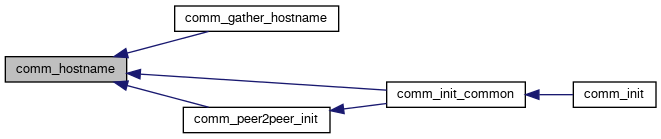

◆ comm_hostname()

| char* comm_hostname | ( | void | ) |

Definition at line 58 of file comm_common.cpp.

Referenced by comm_gather_hostname(), comm_init_common(), and comm_peer2peer_init().

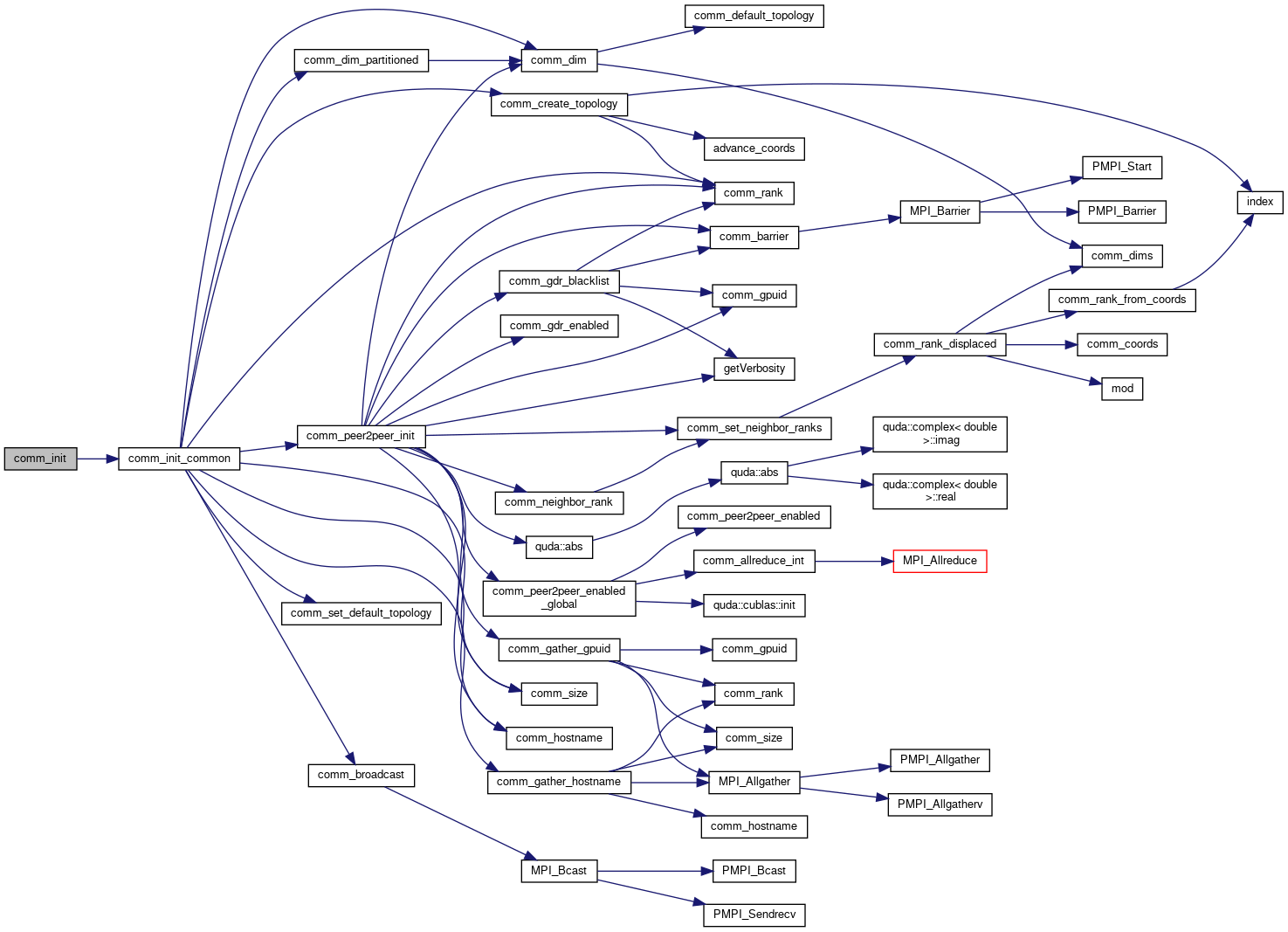

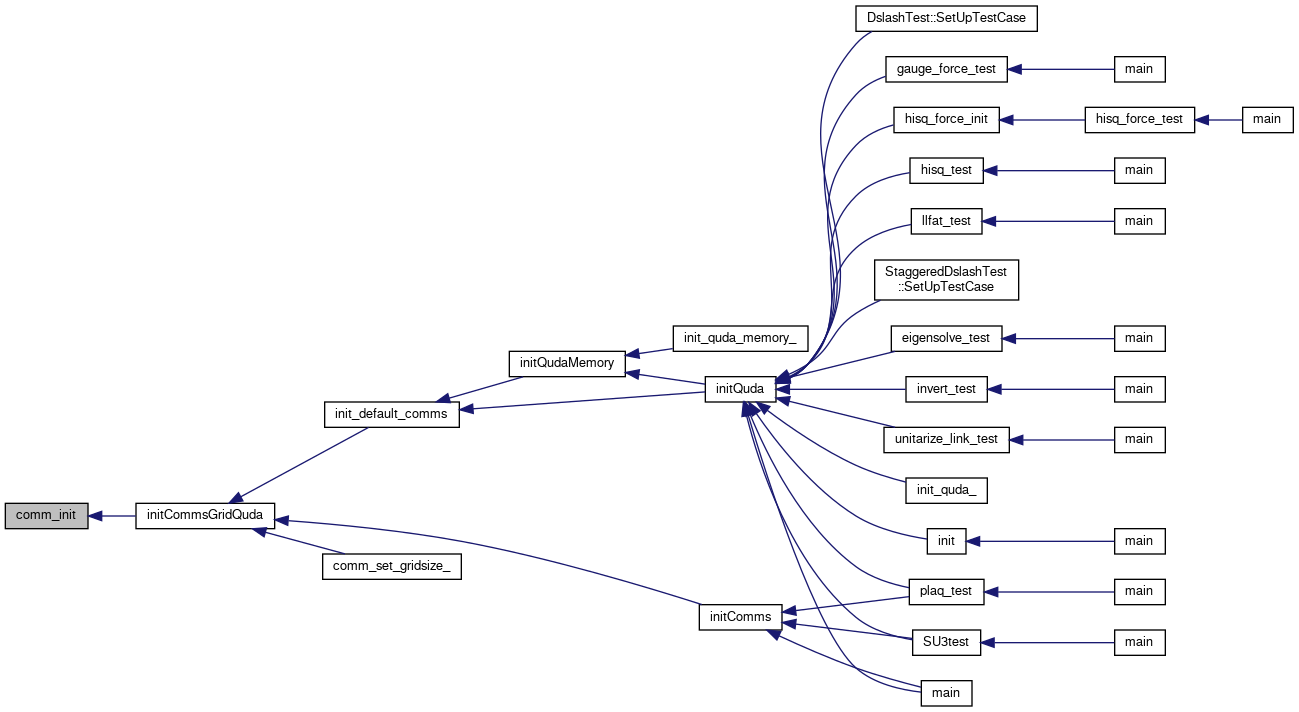

◆ comm_init()

| void comm_init | ( | int | ndim, |

| const int * | dims, | ||

| QudaCommsMap | rank_from_coords, | ||

| void * | map_data | ||

| ) |

Initialize the communications, implemented in comm_single.cpp, comm_qmp.cpp, and comm_mpi.cpp.

Dummy communications layer for single-GPU backend.

Definition at line 58 of file comm_mpi.cpp.

References comm_init_common(), errorQuda, initialized, MPI_CHECK, ndim, rank, and size.

Referenced by initCommsGridQuda().

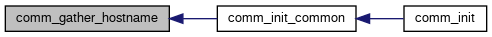

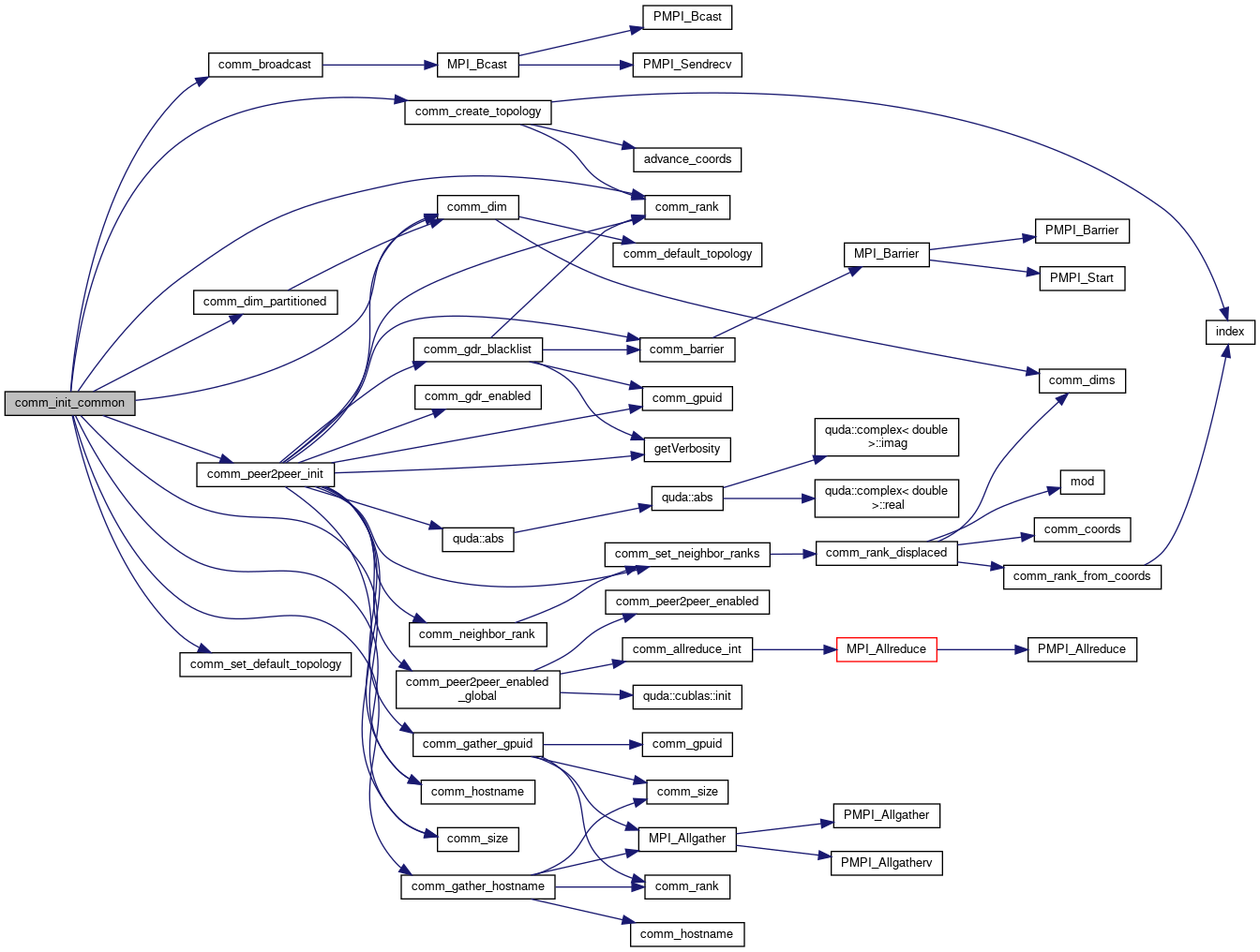

◆ comm_init_common()

| void comm_init_common | ( | int | ndim, |

| const int * | dims, | ||

| QudaCommsMap | rank_from_coords, | ||

| void * | map_data | ||

| ) |

Initialize the communications common to all communications abstractions.

Definition at line 700 of file comm_common.cpp.

References comm_broadcast(), comm_create_topology(), comm_dim(), comm_dim_partitioned(), comm_gather_hostname(), comm_hostname(), comm_peer2peer_init(), comm_rank(), comm_set_default_topology(), comm_size(), deterministic_reduce, device, errorQuda, gpuid, host_free, partition_string, safe_malloc, and topology_string.

Referenced by comm_init().

◆ comm_intranode_enabled()

| bool comm_intranode_enabled | ( | int | dir, |

| int | dim | ||

| ) |

Query if intra-node (non-peer-to-peer) communication is enabled in a given dimension and direction

- Parameters

-

dir Direction (0 - backwards, 1 forwards) dim Dimension (0-3)

- Returns

- Whether intra-node communication is enabled

Definition at line 314 of file comm_common.cpp.

References enable_intranode, and intranode_enabled.

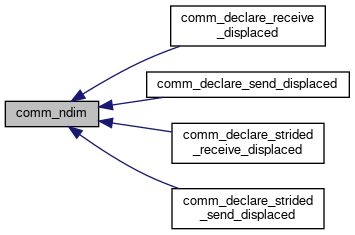

◆ comm_ndim()

| int comm_ndim | ( | const Topology * | topo | ) |

Definition at line 322 of file comm_common.cpp.

References Topology_s::ndim.

Referenced by comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), and comm_declare_strided_send_displaced().

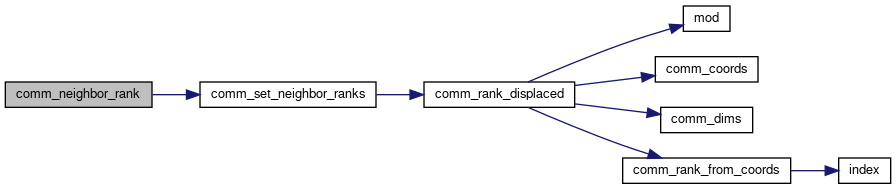

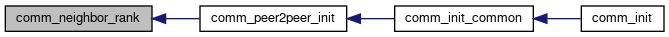

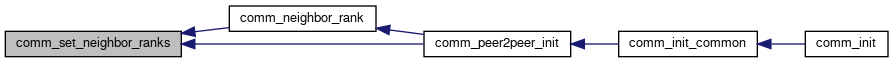

◆ comm_neighbor_rank()

| int comm_neighbor_rank | ( | int | dir, |

| int | dim | ||

| ) |

Definition at line 416 of file comm_common.cpp.

References comm_set_neighbor_ranks(), neighbor_rank, and neighbors_cached.

Referenced by comm_peer2peer_init().

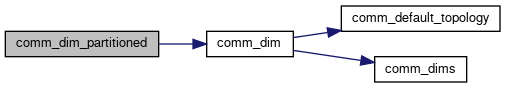

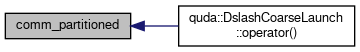

◆ comm_partitioned()

| int comm_partitioned | ( | ) |

Loop over comm_dim_partitioned(dim) for all comms dimensions.

- Returns

- Whether any communications dimensions are partitioned

Definition at line 640 of file comm_common.cpp.

References comm_dim_partitioned().

Referenced by quda::DslashCoarseLaunch::operator()().

◆ comm_peer2peer_enabled()

| bool comm_peer2peer_enabled | ( | int | dir, |

| int | dim | ||

| ) |

Query if peer-to-peer communication is enabled

- Parameters

-

dir Direction (0 - backwards, 1 forwards) dim Dimension (0-3)

- Returns

- Whether peer-to-peer is enabled

Definition at line 285 of file comm_common.cpp.

References enable_p2p, and peer2peer_enabled.

Referenced by comm_peer2peer_enabled_global(), quda::dslash::commsComplete(), quda::cudaGaugeField::commsComplete(), quda::cudaColorSpinorField::commsQuery(), quda::cudaColorSpinorField::commsWait(), quda::dslash::completeDslash(), quda::LatticeField::createIPCComms(), quda::LatticeField::destroyIPCComms(), quda::cudaGaugeField::exchangeExtendedGhost(), quda::cudaGaugeField::exchangeGhost(), quda::cudaColorSpinorField::exchangeGhost(), quda::cudaColorSpinorField::gather(), quda::dslash::getStreamIndex(), quda::cudaGaugeField::injectGhost(), quda::dslash::issueGather(), quda::dslash::issuePack(), quda::dslash::DslashBasic< Dslash >::operator()(), quda::dslash::DslashFusedExterior< Dslash >::operator()(), quda::dslash::DslashGDR< Dslash >::operator()(), quda::dslash::DslashFusedGDR< Dslash >::operator()(), quda::dslash::DslashGDRRecv< Dslash >::operator()(), quda::dslash::DslashFusedGDRRecv< Dslash >::operator()(), quda::dslash::DslashZeroCopyPack< Dslash >::operator()(), quda::dslash::DslashFusedZeroCopyPack< Dslash >::operator()(), quda::dslash::DslashZeroCopyPackGDRRecv< Dslash >::operator()(), quda::dslash::DslashFusedZeroCopyPackGDRRecv< Dslash >::operator()(), quda::dslash::DslashZeroCopy< Dslash >::operator()(), quda::dslash::DslashFusedZeroCopy< Dslash >::operator()(), quda::cudaGaugeField::recvStart(), quda::cudaColorSpinorField::recvStart(), quda::cudaColorSpinorField::scatter(), quda::cudaGaugeField::sendStart(), quda::cudaColorSpinorField::sendStart(), quda::Dslash< Float >::setParam(), and DslashCuda::setParam().

◆ comm_peer2peer_enabled_global()

| int comm_peer2peer_enabled_global | ( | ) |

Query what peer-to-peer communication is enabled globally

- Returns

- 2-bit number reporting 1 for copy engine, 2 for remote writes

Definition at line 289 of file comm_common.cpp.

References comm_allreduce_int(), comm_peer2peer_enabled(), enable_p2p, enable_peer_to_peer, and quda::cublas::init().

Referenced by quda::dslash::DslashPolicyTune< Dslash >::apply(), comm_config_string(), comm_peer2peer_init(), quda::dslash::DslashPolicyTune< Dslash >::DslashPolicyTune(), quda::cudaColorSpinorField::exchangeGhost(), quda::Pack< Float, nColor, spin_project >::fillAux(), and quda::dslash::setMappedGhost().

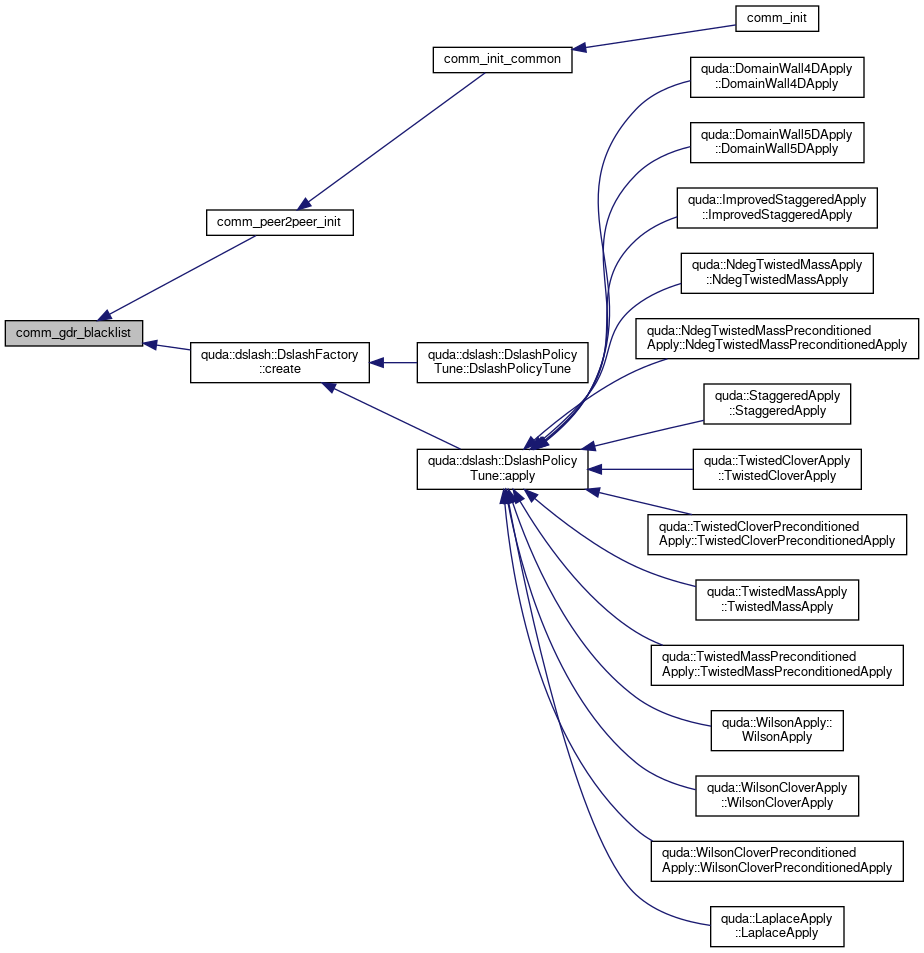

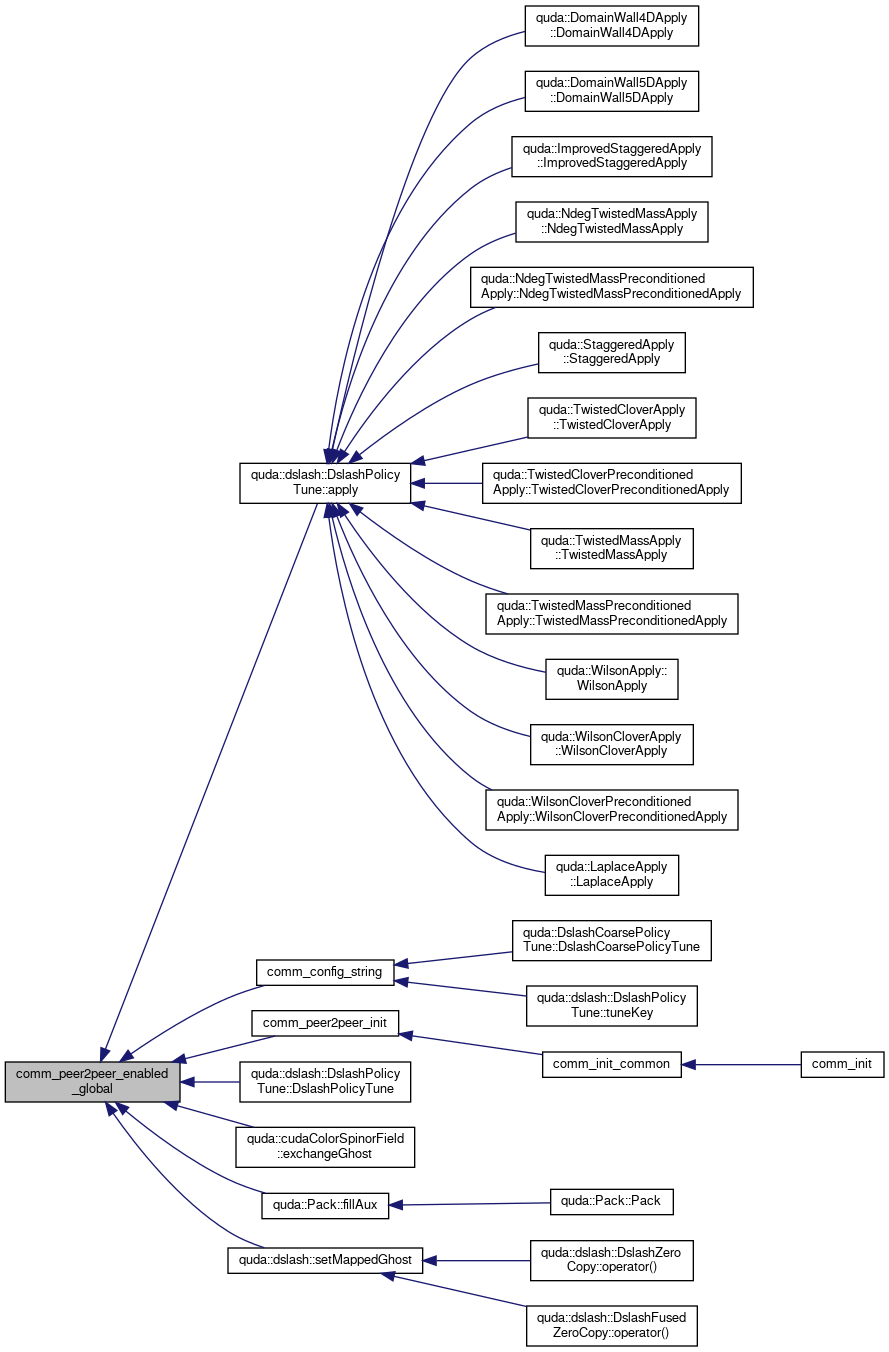

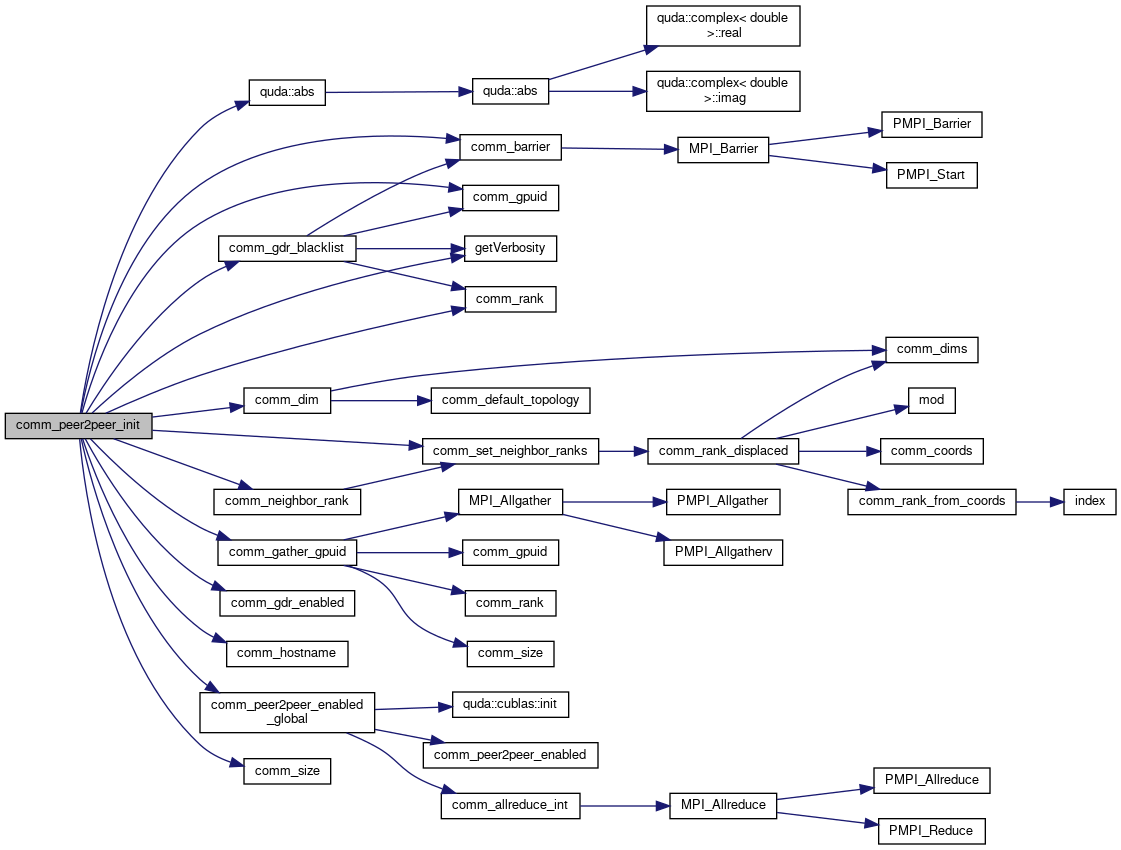

◆ comm_peer2peer_init()

| void comm_peer2peer_init | ( | const char * | hostname_recv_buf | ) |

Enabled peer-to-peer communication.

- Parameters

-

hostname_buf Array that holds all process hostnames

Definition at line 163 of file comm_common.cpp.

References quda::abs(), checkCudaErrorNoSync, comm_barrier(), comm_dim(), comm_gather_gpuid(), comm_gdr_blacklist(), comm_gdr_enabled(), comm_gpuid(), comm_hostname(), comm_neighbor_rank(), comm_peer2peer_enabled_global(), comm_rank(), comm_set_neighbor_ranks(), comm_size(), enable_peer_to_peer, errorQuda, getVerbosity(), gpuid, host_free, intranode_enabled, neighbor_rank, peer2peer_enabled, peer2peer_init, peer2peer_present, printfQuda, QUDA_SILENT, and safe_malloc.

Referenced by comm_init_common().

◆ comm_peer2peer_present()

| bool comm_peer2peer_present | ( | ) |

Returns true if any peer-to-peer capability is present on this system (regardless of whether it has been disabled or not. We use this, for example, to determine if we need to allocate pinned device memory or not.

Definition at line 281 of file comm_common.cpp.

References peer2peer_present.

Referenced by quda::device_pinned_free_(), and quda::device_pinned_malloc_().

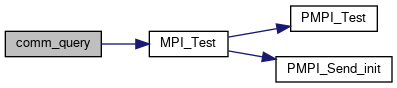

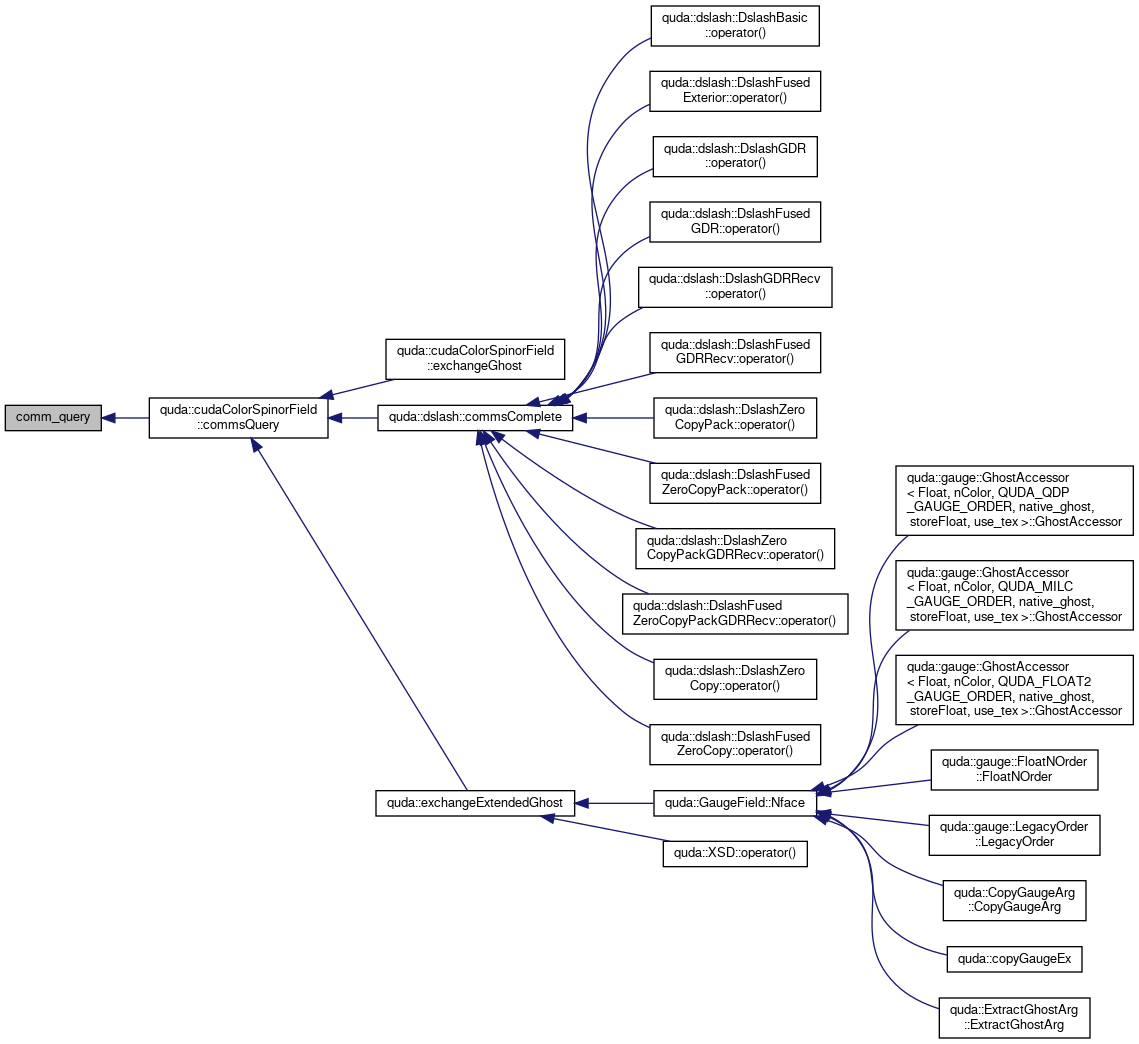

◆ comm_query()

| int comm_query | ( | MsgHandle * | mh | ) |

Definition at line 228 of file comm_mpi.cpp.

References MsgHandle_s::handle, MPI_CHECK, MPI_Test(), and MsgHandle_s::request.

Referenced by quda::cudaColorSpinorField::commsQuery().

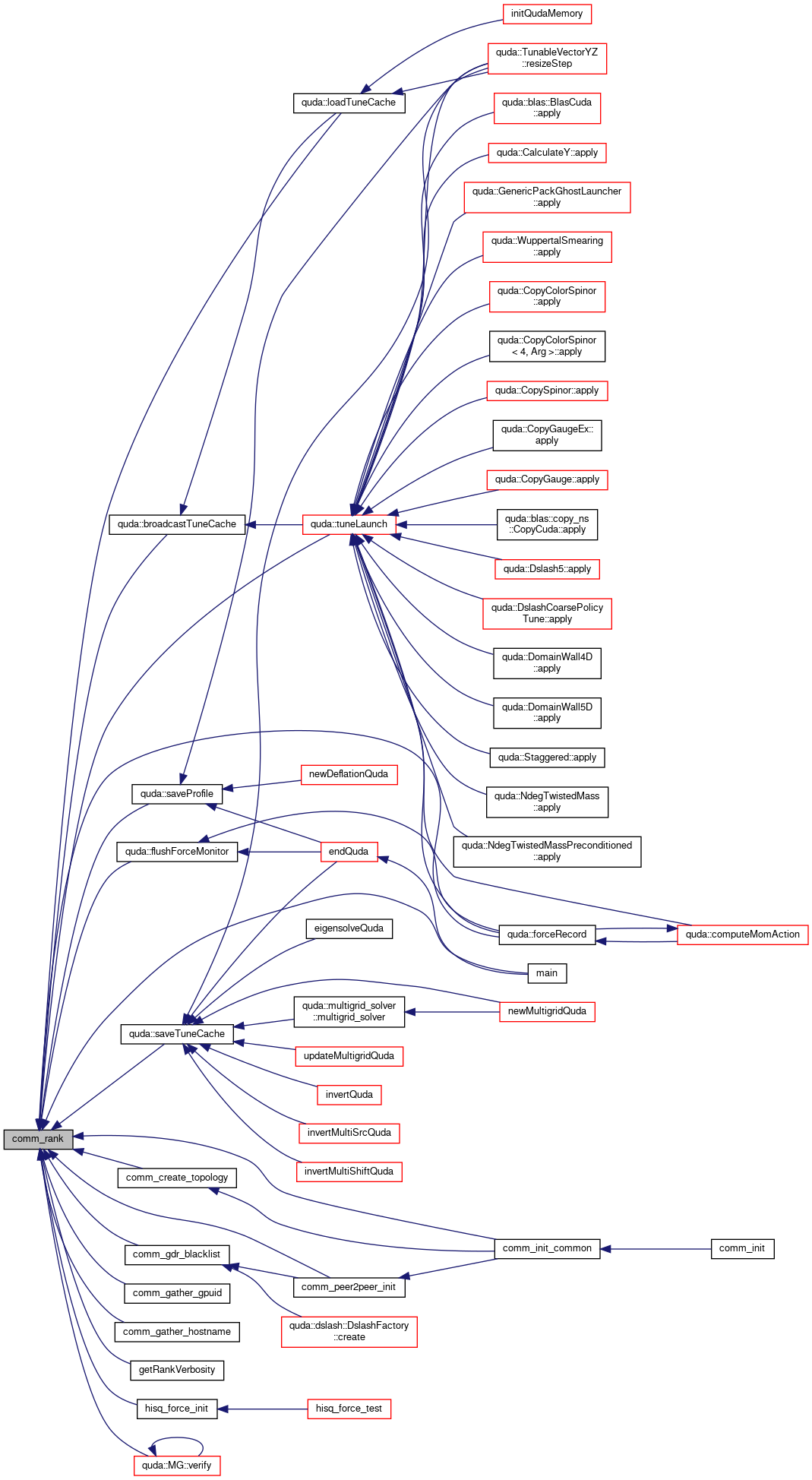

◆ comm_rank()

| int comm_rank | ( | void | ) |

- Returns

- Rank id of this process

Definition at line 82 of file comm_mpi.cpp.

References rank.

Referenced by quda::broadcastTuneCache(), comm_create_topology(), comm_gather_gpuid(), comm_gather_hostname(), comm_gdr_blacklist(), comm_init_common(), comm_peer2peer_init(), quda::flushForceMonitor(), quda::forceRecord(), getRankVerbosity(), hisq_force_init(), quda::loadTuneCache(), main(), quda::saveProfile(), quda::saveTuneCache(), quda::tuneLaunch(), and quda::MG::verify().

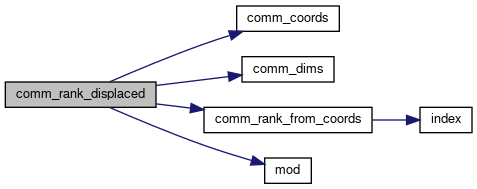

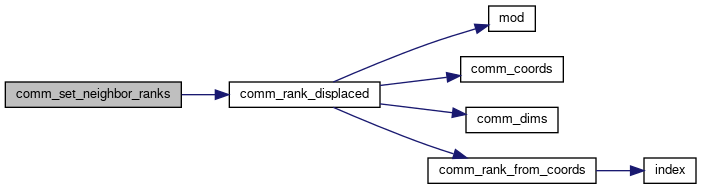

◆ comm_rank_displaced()

| int comm_rank_displaced | ( | const Topology * | topo, |

| const int | displacement[] | ||

| ) |

Definition at line 357 of file comm_common.cpp.

References comm_coords(), comm_dims(), comm_rank_from_coords(), Topology_s::coords, mod(), Topology_s::ndim, and QUDA_MAX_DIM.

Referenced by comm_declare_receive_displaced(), comm_declare_send_displaced(), comm_declare_strided_receive_displaced(), comm_declare_strided_send_displaced(), and comm_set_neighbor_ranks().

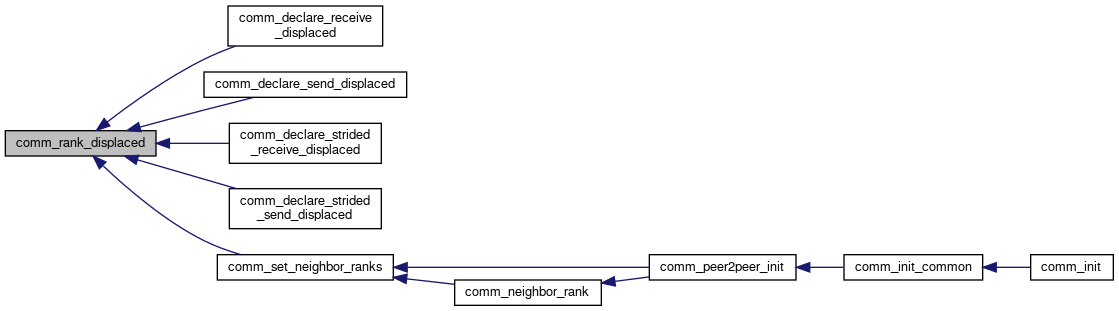

◆ comm_rank_from_coords()

| int comm_rank_from_coords | ( | const Topology * | topo, |

| const int * | coords | ||

| ) |

Definition at line 346 of file comm_common.cpp.

References Topology_s::dims, index(), Topology_s::ndim, and Topology_s::ranks.

Referenced by comm_rank_displaced().

◆ comm_set_default_topology()

| void comm_set_default_topology | ( | Topology * | topo | ) |

Definition at line 375 of file comm_common.cpp.

Referenced by comm_finalize(), and comm_init_common().

◆ comm_set_neighbor_ranks()

| void comm_set_neighbor_ranks | ( | Topology * | topo = NULL | ) |

Definition at line 394 of file comm_common.cpp.

References comm_rank_displaced(), default_topo, errorQuda, neighbor_rank, neighbors_cached, and QUDA_MAX_DIM.

Referenced by comm_neighbor_rank(), and comm_peer2peer_init().

◆ comm_set_tunekey_string()

| void comm_set_tunekey_string | ( | ) |

Create the topology and partition strings that are used in tuneKeys.

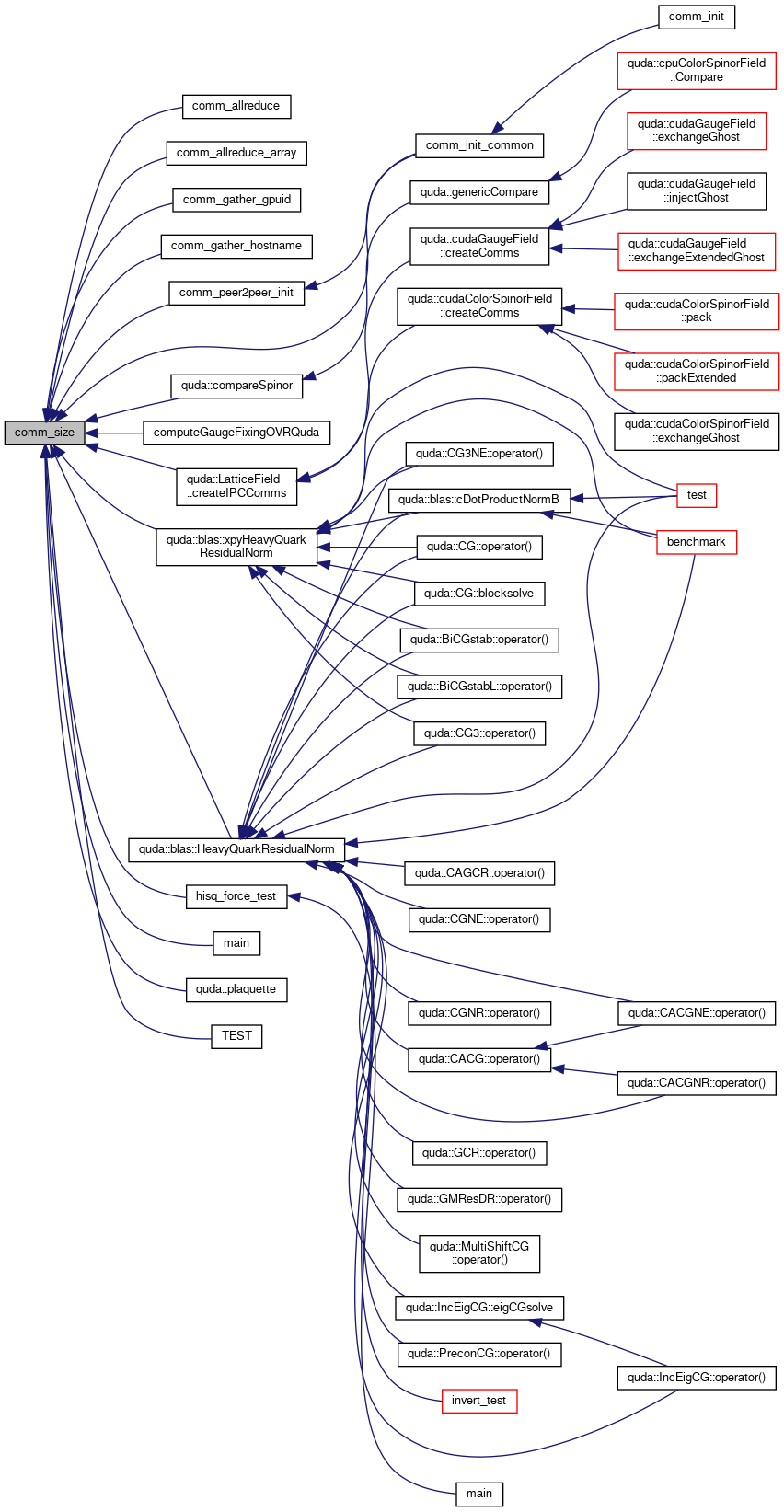

◆ comm_size()

| int comm_size | ( | void | ) |

- Returns

- Number of processes

Definition at line 88 of file comm_mpi.cpp.

References size.

Referenced by comm_allreduce(), comm_allreduce_array(), comm_gather_gpuid(), comm_gather_hostname(), comm_init_common(), comm_peer2peer_init(), quda::compareSpinor(), computeGaugeFixingOVRQuda(), quda::LatticeField::createIPCComms(), quda::blas::HeavyQuarkResidualNorm(), hisq_force_test(), main(), quda::plaquette(), TEST(), and quda::blas::xpyHeavyQuarkResidualNorm().

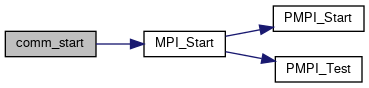

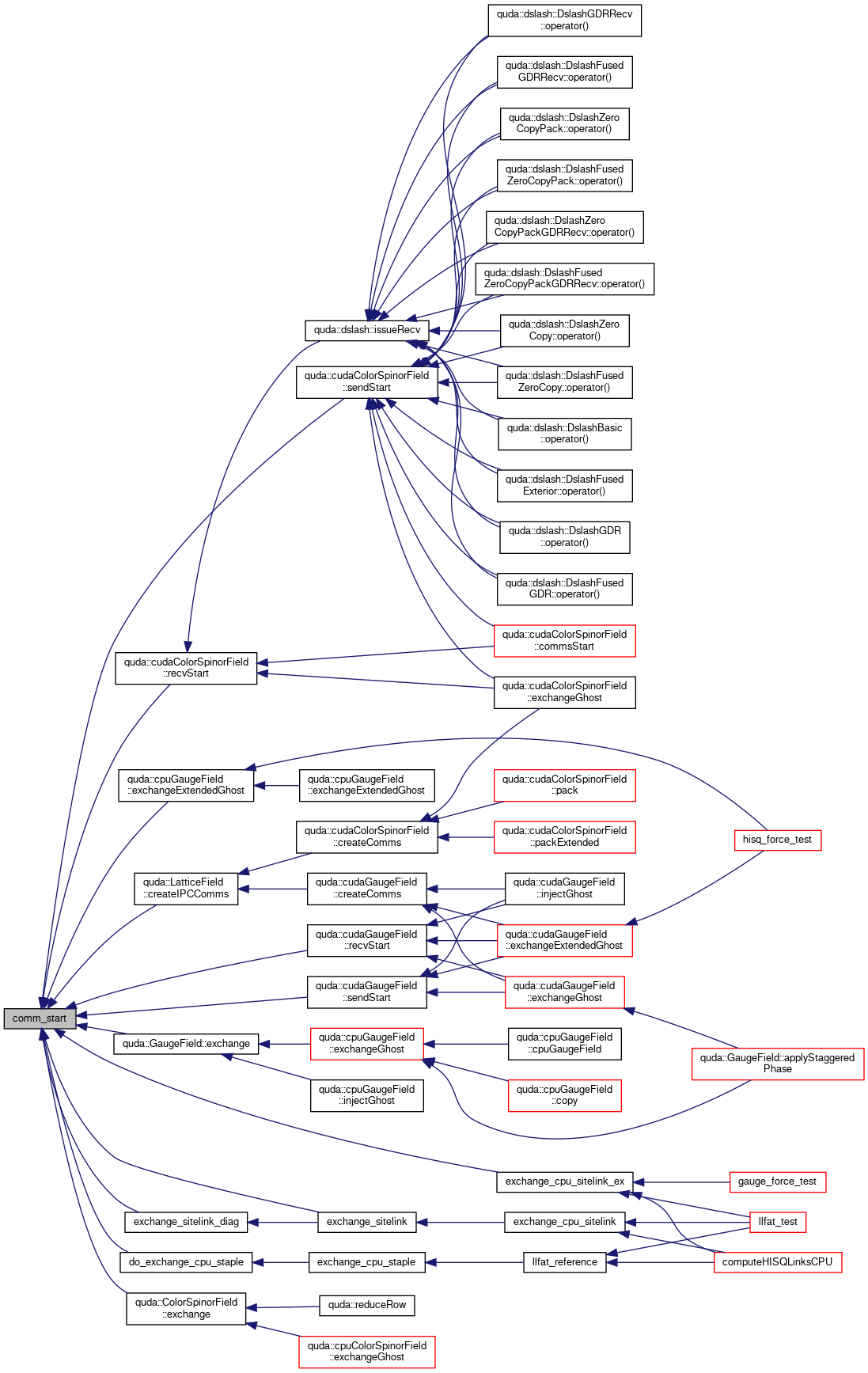

◆ comm_start()

| void comm_start | ( | MsgHandle * | mh | ) |

Definition at line 216 of file comm_mpi.cpp.

References MsgHandle_s::handle, MPI_CHECK, MPI_Start(), QMP_CHECK, and MsgHandle_s::request.

Referenced by quda::LatticeField::createIPCComms(), do_exchange_cpu_staple(), quda::GaugeField::exchange(), quda::ColorSpinorField::exchange(), exchange_cpu_sitelink_ex(), exchange_sitelink(), exchange_sitelink_diag(), quda::cpuGaugeField::exchangeExtendedGhost(), quda::cudaGaugeField::recvStart(), quda::cudaColorSpinorField::recvStart(), quda::cudaGaugeField::sendStart(), and quda::cudaColorSpinorField::sendStart().

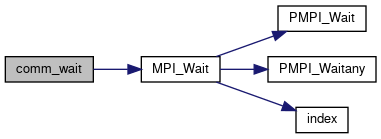

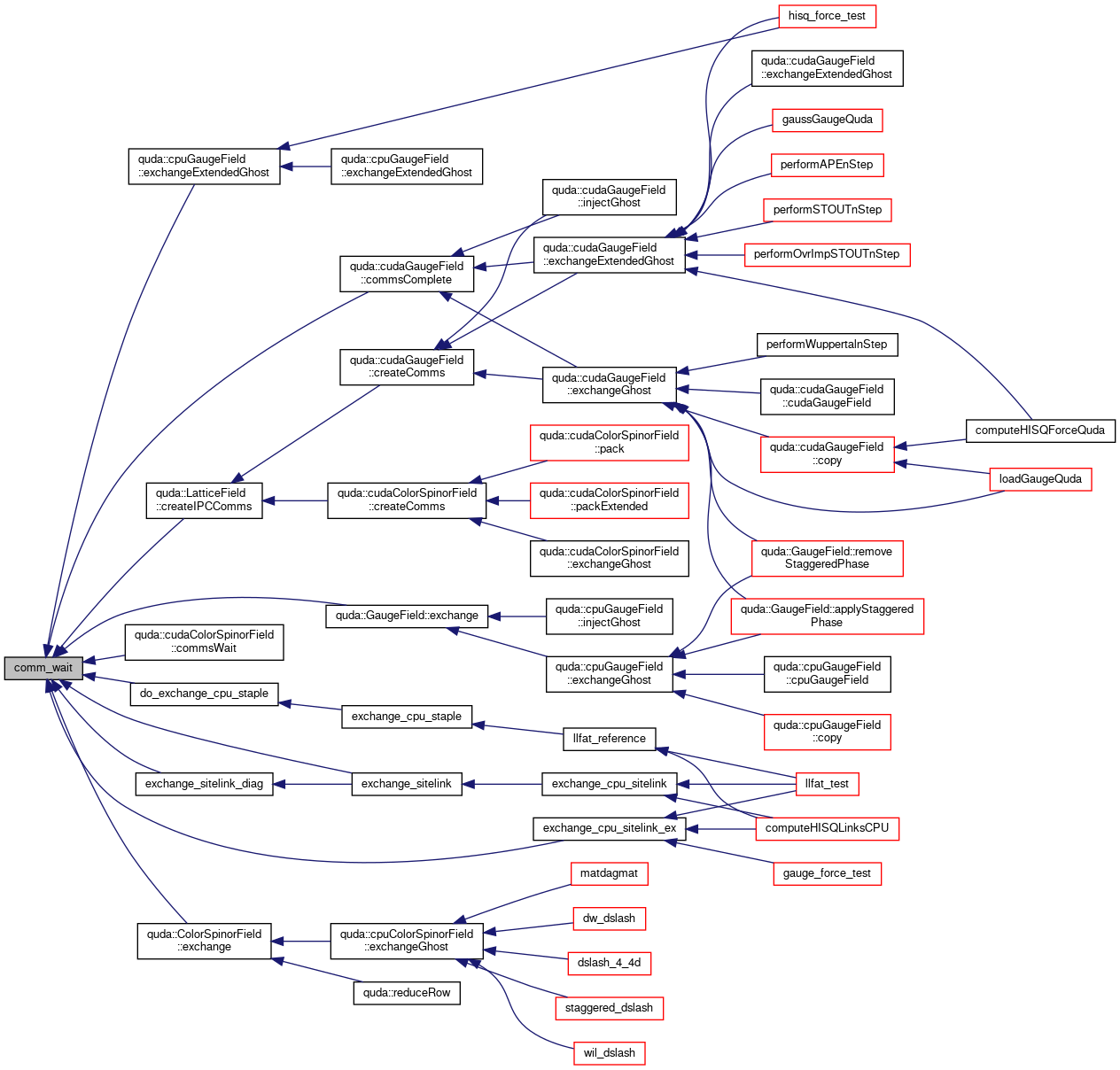

◆ comm_wait()

| void comm_wait | ( | MsgHandle * | mh | ) |

Definition at line 222 of file comm_mpi.cpp.

References MsgHandle_s::handle, MPI_CHECK, MPI_Wait(), QMP_CHECK, and MsgHandle_s::request.

Referenced by quda::cudaGaugeField::commsComplete(), quda::cudaColorSpinorField::commsWait(), quda::LatticeField::createIPCComms(), do_exchange_cpu_staple(), quda::GaugeField::exchange(), quda::ColorSpinorField::exchange(), exchange_cpu_sitelink_ex(), exchange_sitelink(), exchange_sitelink_diag(), and quda::cpuGaugeField::exchangeExtendedGhost().

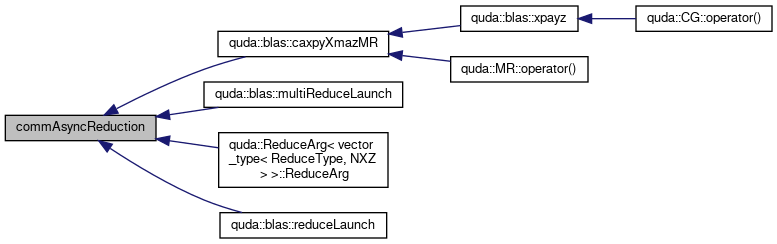

◆ commAsyncReduction()

| bool commAsyncReduction | ( | ) |

Definition at line 825 of file comm_common.cpp.

References asyncReduce.

Referenced by quda::blas::caxpyXmazMR(), quda::blas::multiReduceLaunch(), quda::ReduceArg< vector_type< ReduceType, NXZ > >::ReduceArg(), and quda::blas::reduceLaunch().

◆ commAsyncReductionSet()

| void commAsyncReductionSet | ( | bool | global_reduce | ) |

Definition at line 827 of file comm_common.cpp.

References asyncReduce.

Referenced by quda::MR::operator()().

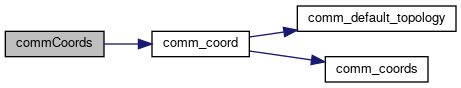

◆ commCoords()

| int commCoords | ( | int | ) |

Definition at line 813 of file comm_common.cpp.

References comm_coord().

Referenced by last_node_in_t(), and quda::Solver::Solver().

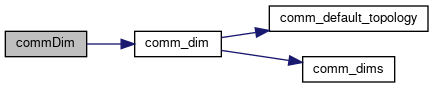

◆ commDim()

| int commDim | ( | int | ) |

Definition at line 811 of file comm_common.cpp.

References comm_dim().

◆ commDimPartitioned()

| int commDimPartitioned | ( | int | dir | ) |

Definition at line 815 of file comm_common.cpp.

References comm_dim_partitioned().

Referenced by quda::cudaColorSpinorField::commsQuery(), quda::cudaColorSpinorField::commsWait(), createCloverQuda(), quda::LatticeField::createComms(), quda::cudaColorSpinorField::createComms(), exchange_cpu_sitelink_ex(), exchange_sitelink(), exchange_sitelink_diag(), initQudaMemory(), loadGaugeQuda(), quda::cudaColorSpinorField::recvStart(), quda::cudaColorSpinorField::scatter(), quda::cudaColorSpinorField::scatterExtended(), quda::cudaColorSpinorField::sendStart(), quda::shiftColorSpinorField(), quda::ShiftColorSpinorFieldArg< Output, Input >::ShiftColorSpinorFieldArg(), and updateR().

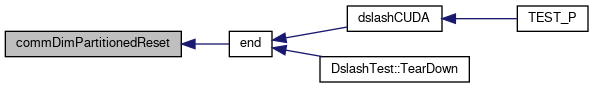

◆ commDimPartitionedReset()

| void commDimPartitionedReset | ( | ) |

Reset the comm dim partioned array to zero,.

This should only be needed for automated testing when different partitioning is applied within a single run.

Definition at line 819 of file comm_common.cpp.

References comm_dim_partitioned_reset().

Referenced by end().

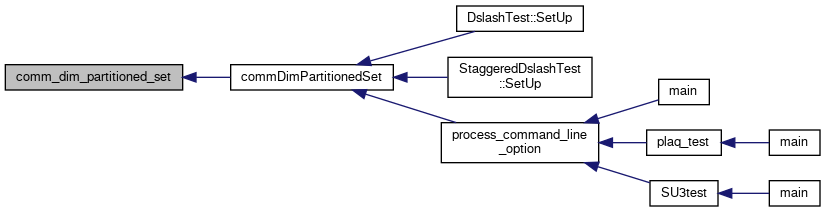

◆ commDimPartitionedSet()

| void commDimPartitionedSet | ( | int | dir | ) |

Definition at line 817 of file comm_common.cpp.

References comm_dim_partitioned_set().

Referenced by process_command_line_option(), StaggeredDslashTest::SetUp(), and DslashTest::SetUp().

◆ commGlobalReduction()

| bool commGlobalReduction | ( | ) |

Definition at line 821 of file comm_common.cpp.

References globalReduce.

Referenced by quda::tuneLaunch().

◆ commGlobalReductionSet()

| void commGlobalReductionSet | ( | bool | global_reduce | ) |

Definition at line 823 of file comm_common.cpp.

References globalReduce.

Referenced by quda::IncEigCG::eigCGsolve(), quda::PreconCG::operator()(), quda::MR::operator()(), and quda::SD::operator()().

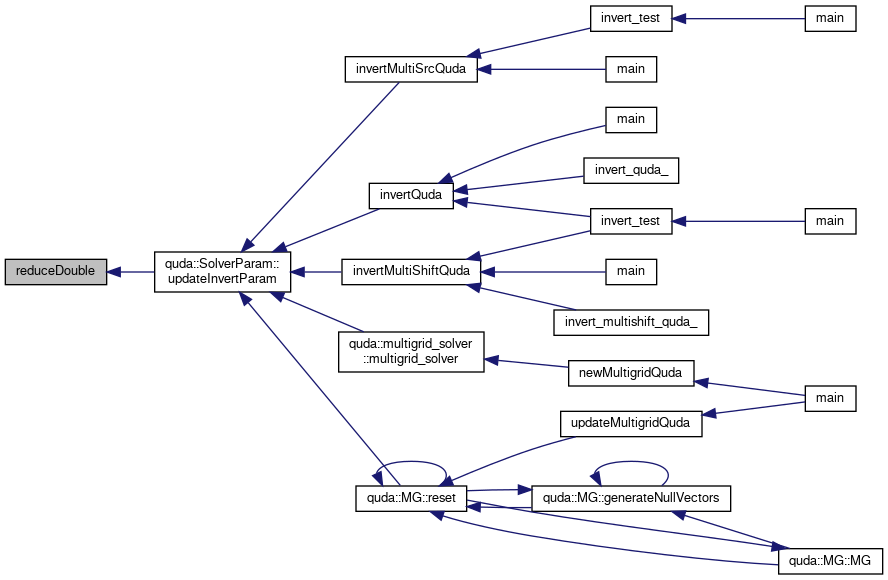

◆ reduceDouble()

| void reduceDouble | ( | double & | ) |

Definition at line 806 of file comm_common.cpp.

References comm_allreduce(), and globalReduce.

Referenced by quda::SolverParam::updateInvertParam().

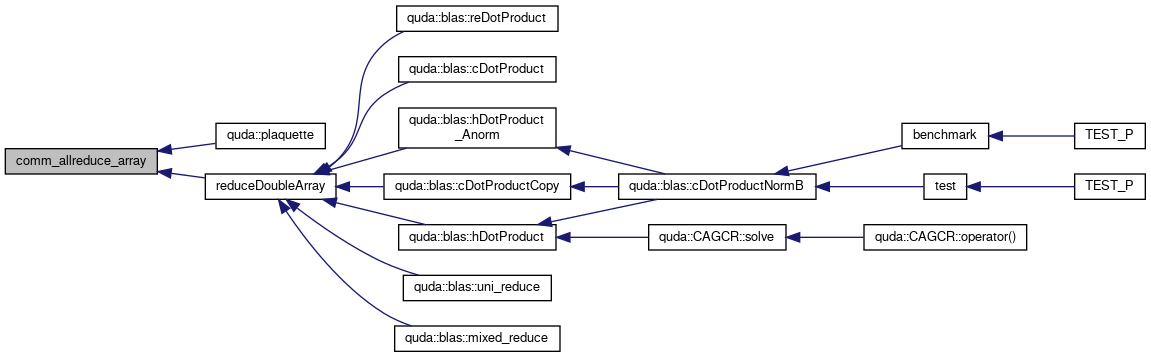

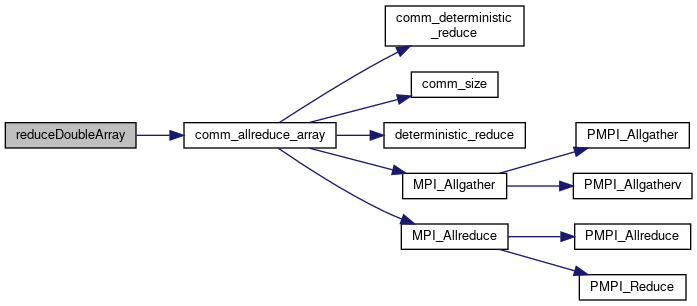

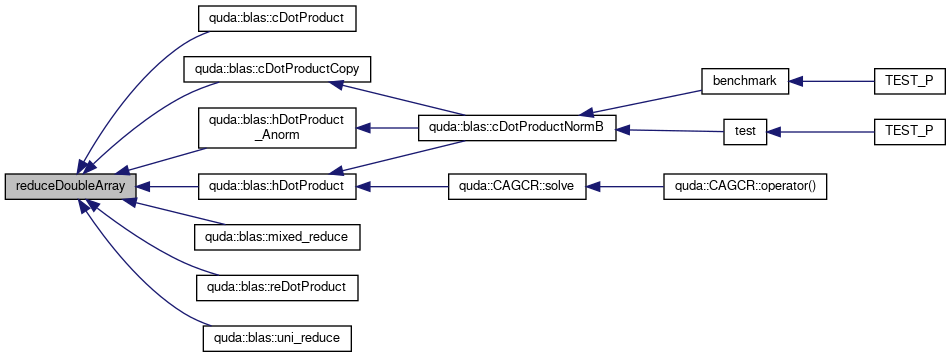

◆ reduceDoubleArray()

| void reduceDoubleArray | ( | double * | , |

| const int | len | ||

| ) |

Definition at line 808 of file comm_common.cpp.

References comm_allreduce_array(), and globalReduce.

Referenced by quda::blas::cDotProduct(), quda::blas::cDotProductCopy(), quda::blas::hDotProduct(), quda::blas::hDotProduct_Anorm(), quda::blas::mixed_reduce(), quda::blas::reDotProduct(), and quda::blas::uni_reduce().

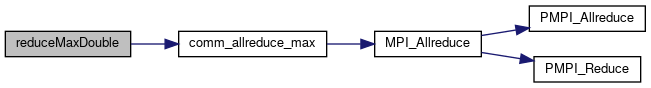

◆ reduceMaxDouble()

| void reduceMaxDouble | ( | double & | ) |

Definition at line 804 of file comm_common.cpp.

References comm_allreduce_max().

1.8.13

1.8.13